Search Results for author: Ying WEI

Found 54 papers, 22 papers with code

Self-Supervised Graph Transformer on Large-Scale Molecular Data

3 code implementations • NeurIPS 2020 • Yu Rong, Yatao Bian, Tingyang Xu, Weiyang Xie, Ying WEI, Wenbing Huang, Junzhou Huang

We pre-train GROVER with 100 million parameters on 10 million unlabelled molecules -- the biggest GNN and the largest training dataset in molecular representation learning.

Ranked #4 on

Molecular Property Prediction

on QM7

Ranked #4 on

Molecular Property Prediction

on QM7

Adversarial Sparse Transformer for Time Series Forecasting

1 code implementation • NeurIPS 2020 • Sifan Wu, Xi Xiao, Qianggang Ding, Peilin Zhao, Ying WEI, Junzhou Huang

Specifically, AST adopts a Sparse Transformer as the generator to learn a sparse attention map for time series forecasting, and uses a discriminator to improve the prediction performance from sequence level.

Multivariate Time Series Forecasting

Multivariate Time Series Forecasting

Probabilistic Time Series Forecasting

+1

Probabilistic Time Series Forecasting

+1

Blind Image Quality Assessment via Vision-Language Correspondence: A Multitask Learning Perspective

1 code implementation • CVPR 2023 • Weixia Zhang, Guangtao Zhai, Ying WEI, Xiaokang Yang, Kede Ma

We aim at advancing blind image quality assessment (BIQA), which predicts the human perception of image quality without any reference information.

Hierarchical Attention Transfer Network for Cross-Domain Sentiment Classification

1 code implementation • Thirty-Second AAAI Conference on Artificial Intelligence 2018 • Zheng Li, Ying WEI, Yu Zhang, Qiang Yang

Existing cross-domain sentiment classification meth- ods cannot automatically capture non-pivots, i. e., the domain- specific sentiment words, and pivots, i. e., the domain-shared sentiment words, simultaneously.

Transferable End-to-End Aspect-based Sentiment Analysis with Selective Adversarial Learning

1 code implementation • IJCNLP 2019 • Zheng Li, Xin Li, Ying WEI, Lidong Bing, Yu Zhang, Qiang Yang

Joint extraction of aspects and sentiments can be effectively formulated as a sequence labeling problem.

Aspect-Based Sentiment Analysis

Aspect-Based Sentiment Analysis

Aspect-Based Sentiment Analysis (ABSA)

+1

Aspect-Based Sentiment Analysis (ABSA)

+1

Hierarchically Structured Meta-learning

1 code implementation • 13 May 2019 • Huaxiu Yao, Ying WEI, Junzhou Huang, Zhenhui Li

In order to learn quickly with few samples, meta-learning utilizes prior knowledge learned from previous tasks.

Learning from Multiple Cities: A Meta-Learning Approach for Spatial-Temporal Prediction

1 code implementation • 24 Jan 2019 • Huaxiu Yao, Yiding Liu, Ying WEI, Xianfeng Tang, Zhenhui Li

Specifically, our proposed model is designed as a spatial-temporal network with a meta-learning paradigm.

Exploiting Coarse-to-Fine Task Transfer for Aspect-level Sentiment Classification

1 code implementation • AAAI 2019 2018 • Zheng Li, Ying WEI, Yu Zhang, Xiang Zhang, Xin Li, Qiang Yang

Aspect-level sentiment classification (ASC) aims at identifying sentiment polarities towards aspects in a sentence, where the aspect can behave as a general Aspect Category (AC) or a specific Aspect Term (AT).

Graph Few-shot Learning via Knowledge Transfer

1 code implementation • 7 Oct 2019 • Huaxiu Yao, Chuxu Zhang, Ying WEI, Meng Jiang, Suhang Wang, Junzhou Huang, Nitesh V. Chawla, Zhenhui Li

Towards the challenging problem of semi-supervised node classification, there have been extensive studies.

FGAHOI: Fine-Grained Anchors for Human-Object Interaction Detection

1 code implementation • 8 Jan 2023 • Shuailei Ma, Yuefeng Wang, Shanze Wang, Ying WEI

HSAM and TAM semantically align and merge the extracted features and query embeddings in the hierarchical spatial and task perspectives in turn.

Ranked #6 on

Human-Object Interaction Detection

on HICO-DET

Ranked #6 on

Human-Object Interaction Detection

on HICO-DET

Improving Generalization in Meta-learning via Task Augmentation

1 code implementation • 26 Jul 2020 • Huaxiu Yao, Long-Kai Huang, Linjun Zhang, Ying WEI, Li Tian, James Zou, Junzhou Huang, Zhenhui Li

Moreover, both MetaMix and Channel Shuffle outperform state-of-the-art results by a large margin across many datasets and are compatible with existing meta-learning algorithms.

Meta-learning with an Adaptive Task Scheduler

2 code implementations • NeurIPS 2021 • Huaxiu Yao, Yu Wang, Ying WEI, Peilin Zhao, Mehrdad Mahdavi, Defu Lian, Chelsea Finn

In ATS, for the first time, we design a neural scheduler to decide which meta-training tasks to use next by predicting the probability being sampled for each candidate task, and train the scheduler to optimize the generalization capacity of the meta-model to unseen tasks.

Collaborative Unsupervised Domain Adaptation for Medical Image Diagnosis

1 code implementation • 17 Nov 2019 • Yifan Zhang, Ying WEI, Peilin Zhao, Shuaicheng Niu, Qingyao Wu, Mingkui Tan, Junzhou Huang

In this paper, we seek to exploit rich labeled data from relevant domains to help the learning in the target task with unsupervised domain adaptation (UDA).

COVID-DA: Deep Domain Adaptation from Typical Pneumonia to COVID-19

1 code implementation • 30 Apr 2020 • Yifan Zhang, Shuaicheng Niu, Zhen Qiu, Ying WEI, Peilin Zhao, Jianhua Yao, Junzhou Huang, Qingyao Wu, Mingkui Tan

There are two main challenges: 1) the discrepancy of data distributions between domains; 2) the task difference between the diagnosis of typical pneumonia and COVID-19.

Collaborative Unsupervised Domain Adaptation for Medical Image Diagnosis

1 code implementation • 5 Jul 2020 • Yifan Zhang, Ying WEI, Qingyao Wu, Peilin Zhao, Shuaicheng Niu, Junzhou Huang, Mingkui Tan

Deep learning based medical image diagnosis has shown great potential in clinical medicine.

Fisher Deep Domain Adaptation

1 code implementation • 12 Mar 2020 • Yinghua Zhang, Yu Zhang, Ying WEI, Kun Bai, Yangqiu Song, Qiang Yang

Though the learned representations are separable in the source domain, they usually have a large variance and samples with different class labels tend to overlap in the target domain, which yields suboptimal adaptation performance.

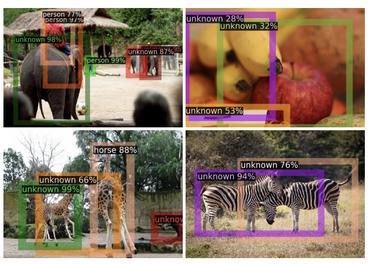

Detecting the open-world objects with the help of the Brain

1 code implementation • 21 Mar 2023 • Shuailei Ma, Yuefeng Wang, Ying WEI, Peihao Chen, Zhixiang Ye, Jiaqi Fan, Enming Zhang, Thomas H. Li

We propose leveraging the VL as the ``Brain'' of the open-world detector by simply generating unknown labels.

SKDF: A Simple Knowledge Distillation Framework for Distilling Open-Vocabulary Knowledge to Open-world Object Detector

1 code implementation • 14 Dec 2023 • Shuailei Ma, Yuefeng Wang, Ying WEI, Jiaqi Fan, Enming Zhang, Xinyu Sun, Peihao Chen

Ablation experiments demonstrate that both of them are effective in mitigating the impact of open-world knowledge distillation on the learning of known objects.

Learning to Substitute Spans towards Improving Compositional Generalization

1 code implementation • 5 Jun 2023 • Zhaoyi Li, Ying WEI, Defu Lian

Despite the rising prevalence of neural sequence models, recent empirical evidences suggest their deficiency in compositional generalization.

Towards Anytime Fine-tuning: Continually Pre-trained Language Models with Hypernetwork Prompt

1 code implementation • 19 Oct 2023 • Gangwei Jiang, Caigao Jiang, Siqiao Xue, James Y. Zhang, Jun Zhou, Defu Lian, Ying WEI

In this work, we first investigate such anytime fine-tuning effectiveness of existing continual pre-training approaches, concluding with unanimously decreased performance on unseen domains.

Unleashing the Power of Meta-tuning for Few-shot Generalization Through Sparse Interpolated Experts

1 code implementation • 13 Mar 2024 • Shengzhuang Chen, Jihoon Tack, Yunqiao Yang, Yee Whye Teh, Jonathan Richard Schwarz, Ying WEI

Conventional wisdom suggests parameter-efficient fine-tuning of foundation models as the state-of-the-art method for transfer learning in vision, replacing the rich literature of alternatives such as meta-learning.

Benchmarking and Improving Compositional Generalization of Multi-aspect Controllable Text Generation

1 code implementation • 5 Apr 2024 • Tianqi Zhong, Zhaoyi Li, Quan Wang, Linqi Song, Ying WEI, Defu Lian, Zhendong Mao

Compositional generalization, representing the model's ability to generate text with new attribute combinations obtained by recombining single attributes from the training data, is a crucial property for multi-aspect controllable text generation (MCTG) methods.

Learning to Multitask

no code implementations • NeurIPS 2018 • Yu Zhang, Ying WEI, Qiang Yang

Based on such training set, L2MT first uses a proposed layerwise graph neural network to learn task embeddings for all the tasks in a multitask problem and then learns an estimation function to estimate the relative test error based on task embeddings and the representation of the multitask model based on a unified formulation.

Learning to Transfer

no code implementations • 18 Aug 2017 • Ying Wei, Yu Zhang, Qiang Yang

We establish the L2T framework in two stages: 1) we first learn a reflection function encrypting transfer learning skills from experiences; and 2) we infer what and how to transfer for a newly arrived pair of domains by optimizing the reflection function.

Transfer Learning via Learning to Transfer

no code implementations • ICML 2018 • Ying WEI, Yu Zhang, Junzhou Huang, Qiang Yang

In transfer learning, what and how to transfer are two primary issues to be addressed, as different transfer learning algorithms applied between a source and a target domain result in different knowledge transferred and thereby the performance improvement in the target domain.

Transferable Neural Processes for Hyperparameter Optimization

no code implementations • 7 Sep 2019 • Ying Wei, Peilin Zhao, Huaxiu Yao, Junzhou Huang

Automated machine learning aims to automate the whole process of machine learning, including model configuration.

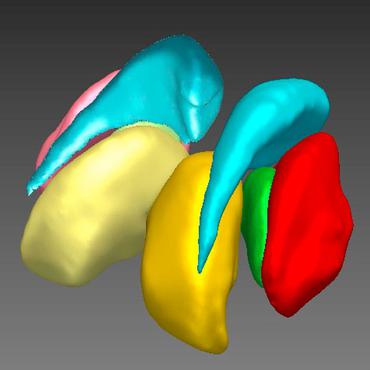

Infant brain MRI segmentation with dilated convolution pyramid downsampling and self-attention

no code implementations • 29 Dec 2019 • Zhihao Lei, Lin Qi, Ying WEI, Yunlong Zhou

In this paper, we propose a dual aggregation network to adaptively aggregate different information in infant brain MRI segmentation.

Large-Scale Screening of COVID-19 from Community Acquired Pneumonia using Infection Size-Aware Classification

no code implementations • 22 Mar 2020 • Feng Shi, Liming Xia, Fei Shan, Dijia Wu, Ying WEI, Huan Yuan, Huiting Jiang, Yaozong Gao, He Sui, Dinggang Shen

The worldwide spread of coronavirus disease (COVID-19) has become a threatening risk for global public health.

Dual-Sampling Attention Network for Diagnosis of COVID-19 from Community Acquired Pneumonia

no code implementations • 6 May 2020 • Xi Ouyang, Jiayu Huo, Liming Xia, Fei Shan, Jun Liu, Zhanhao Mo, Fuhua Yan, Zhongxiang Ding, Qi Yang, Bin Song, Feng Shi, Huan Yuan, Ying WEI, Xiaohuan Cao, Yaozong Gao, Dijia Wu, Qian Wang, Dinggang Shen

To this end, we develop a dual-sampling attention network to automatically diagnose COVID- 19 from the community acquired pneumonia (CAP) in chest computed tomography (CT).

Adaptive Feature Selection Guided Deep Forest for COVID-19 Classification with Chest CT

no code implementations • 7 May 2020 • Liang Sun, Zhanhao Mo, Fuhua Yan, Liming Xia, Fei Shan, Zhongxiang Ding, Wei Shao, Feng Shi, Huan Yuan, Huiting Jiang, Dijia Wu, Ying WEI, Yaozong Gao, Wanchun Gao, He Sui, Daoqiang Zhang, Dinggang Shen

We evaluated our proposed AFS-DF on COVID-19 dataset with 1495 patients of COVID-19 and 1027 patients of community acquired pneumonia (CAP).

Hypergraph Learning for Identification of COVID-19 with CT Imaging

no code implementations • 7 May 2020 • Donglin Di, Feng Shi, Fuhua Yan, Liming Xia, Zhanhao Mo, Zhongxiang Ding, Fei Shan, Shengrui Li, Ying WEI, Ying Shao, Miaofei Han, Yaozong Gao, He Sui, Yue Gao, Dinggang Shen

The main challenge in early screening is how to model the confusing cases in the COVID-19 and CAP groups, with very similar clinical manifestations and imaging features.

Multi-Site Infant Brain Segmentation Algorithms: The iSeg-2019 Challenge

no code implementations • 4 Jul 2020 • Yue Sun, Kun Gao, Zhengwang Wu, Zhihao Lei, Ying WEI, Jun Ma, Xiaoping Yang, Xue Feng, Li Zhao, Trung Le Phan, Jitae Shin, Tao Zhong, Yu Zhang, Lequan Yu, Caizi Li, Ramesh Basnet, M. Omair Ahmad, M. N. S. Swamy, Wenao Ma, Qi Dou, Toan Duc Bui, Camilo Bermudez Noguera, Bennett Landman, Ian H. Gotlib, Kathryn L. Humphreys, Sarah Shultz, Longchuan Li, Sijie Niu, Weili Lin, Valerie Jewells, Gang Li, Dinggang Shen, Li Wang

Deep learning-based methods have achieved state-of-the-art performance; however, one of major limitations is that the learning-based methods may suffer from the multi-site issue, that is, the models trained on a dataset from one site may not be applicable to the datasets acquired from other sites with different imaging protocols/scanners.

Learn to Cross-lingual Transfer with Meta Graph Learning Across Heterogeneous Languages

no code implementations • EMNLP 2020 • Zheng Li, Mukul Kumar, William Headden, Bing Yin, Ying WEI, Yu Zhang, Qiang Yang

Recent emergence of multilingual pre-training language model (mPLM) has enabled breakthroughs on various downstream cross-lingual transfer (CLT) tasks.

A novel multiple instance learning framework for COVID-19 severity assessment via data augmentation and self-supervised learning

no code implementations • 7 Feb 2021 • Zekun Li, Wei Zhao, Feng Shi, Lei Qi, Xingzhi Xie, Ying WEI, Zhongxiang Ding, Yang Gao, Shangjie Wu, Jun Liu, Yinghuan Shi, Dinggang Shen

How to fast and accurately assess the severity level of COVID-19 is an essential problem, when millions of people are suffering from the pandemic around the world.

Frustratingly Easy Transferability Estimation

no code implementations • 17 Jun 2021 • Long-Kai Huang, Ying WEI, Yu Rong, Qiang Yang, Junzhou Huang

Transferability estimation has been an essential tool in selecting a pre-trained model and the layers in it for transfer learning, to transfer, so as to maximize the performance on a target task and prevent negative transfer.

Cross-Site Severity Assessment of COVID-19 from CT Images via Domain Adaptation

no code implementations • 8 Sep 2021 • Geng-Xin Xu, Chen Liu, Jun Liu, Zhongxiang Ding, Feng Shi, Man Guo, Wei Zhao, Xiaoming Li, Ying WEI, Yaozong Gao, Chuan-Xian Ren, Dinggang Shen

Particularly, we propose a domain translator and align the heterogeneous data to the estimated class prototypes (i. e., class centers) in a hyper-sphere manifold.

Minimizing Memorization in Meta-learning: A Causal Perspective

no code implementations • 29 Sep 2021 • Yinjie Jiang, Zhengyu Chen, Luotian Yuan, Ying WEI, Kun Kuang, Xinhai Ye, Zhihua Wang, Fei Wu

Meta-learning has emerged as a potent paradigm for quick learning of few-shot tasks, by leveraging the meta-knowledge learned from meta-training tasks.

MetaTS: Meta Teacher-Student Network for Multilingual Sequence Labeling with Minimal Supervision

no code implementations • EMNLP 2021 • Zheng Li, Danqing Zhang, Tianyu Cao, Ying WEI, Yiwei Song, Bing Yin

In this work, we explore multilingual sequence labeling with minimal supervision using a single unified model for multiple languages.

Functionally Regionalized Knowledge Transfer for Low-resource Drug Discovery

no code implementations • NeurIPS 2021 • Huaxiu Yao, Ying WEI, Long-Kai Huang, Ding Xue, Junzhou Huang, Zhenhui (Jessie) Li

More recently, there has been a surge of interest in employing machine learning approaches to expedite the drug discovery process where virtual screening for hit discovery and ADMET prediction for lead optimization play essential roles.

Self-Supervised Text Erasing with Controllable Image Synthesis

no code implementations • 27 Apr 2022 • Gangwei Jiang, Shiyao Wang, Tiezheng Ge, Yuning Jiang, Ying WEI, Defu Lian

The synthetic training images with erasure ground-truth are then fed to train a coarse-to-fine erasing network.

Discriminative-Region Attention and Orthogonal-View Generation Model for Vehicle Re-Identification

no code implementations • 28 Apr 2022 • Huadong Li, Yuefeng Wang, Ying WEI, Lin Wang, Li Ge

Finally, the distance between vehicle appearances is presented by the discriminative region features and multi-view features together.

Learning to generate imaginary tasks for improving generalization in meta-learning

no code implementations • 9 Jun 2022 • Yichen Wu, Long-Kai Huang, Ying WEI

The success of meta-learning on existing benchmarks is predicated on the assumption that the distribution of meta-training tasks covers meta-testing tasks.

Wasserstein Distributional Learning

no code implementations • 12 Sep 2022 • Chengliang Tang, Nathan Lenssen, Ying WEI, Tian Zheng

To overcome this fundamental issue, we propose Wasserstein Distributional Learning (WDL), a flexible density-on-scalar regression modeling framework that starts with the Wasserstein distance $W_2$ as a proper metric for the space of density outcomes.

Disentangling Task Relations for Few-shot Text Classification via Self-Supervised Hierarchical Task Clustering

no code implementations • 16 Nov 2022 • Juan Zha, Zheng Li, Ying WEI, Yu Zhang

However, most prior works assume that all the tasks are sampled from a single data source, which cannot adapt to real-world scenarios where tasks are heterogeneous and lie in different distributions.

Hybrid Censored Quantile Regression Forest to Assess the Heterogeneous Effects

no code implementations • 12 Dec 2022 • Huichen Zhu, Yifei Sun, Ying WEI

We propose a variable importance decomposition to measure the impact of a variable on the treatment effect function.

CAT: LoCalization and IdentificAtion Cascade Detection Transformer for Open-World Object Detection

no code implementations • CVPR 2023 • Shuailei Ma, Yuefeng Wang, Jiaqi Fan, Ying WEI, Thomas H. Li, Hongli Liu, Fanbing Lv

Open-world object detection (OWOD), as a more general and challenging goal, requires the model trained from data on known objects to detect both known and unknown objects and incrementally learn to identify these unknown objects.

On the Opportunities of Green Computing: A Survey

no code implementations • 1 Nov 2023 • You Zhou, Xiujing Lin, Xiang Zhang, Maolin Wang, Gangwei Jiang, Huakang Lu, Yupeng Wu, Kai Zhang, Zhe Yang, Kehang Wang, Yongduo Sui, Fengwei Jia, Zuoli Tang, Yao Zhao, Hongxuan Zhang, Tiannuo Yang, Weibo Chen, Yunong Mao, Yi Li, De Bao, Yu Li, Hongrui Liao, Ting Liu, Jingwen Liu, Jinchi Guo, Xiangyu Zhao, Ying WEI, Hong Qian, Qi Liu, Xiang Wang, Wai Kin, Chan, Chenliang Li, Yusen Li, Shiyu Yang, Jining Yan, Chao Mou, Shuai Han, Wuxia Jin, Guannan Zhang, Xiaodong Zeng

To tackle the challenges of computing resources and environmental impact of AI, Green Computing has become a hot research topic.

High-fidelity 3D Reconstruction of Plants using Neural Radiance Field

no code implementations • 7 Nov 2023 • Kewei Hu, Ying WEI, Yaoqiang Pan, Hanwen Kang, Chao Chen

Recently, a promising development has emerged in the form of Neural Radiance Field (NeRF), a novel method that utilises neural density fields.

Concept-wise Fine-tuning Matters in Preventing Negative Transfer

no code implementations • ICCV 2023 • Yunqiao Yang, Long-Kai Huang, Ying WEI

A multitude of prevalent pre-trained models mark a major milestone in the development of artificial intelligence, while fine-tuning has been a common practice that enables pretrained models to figure prominently in a wide array of target datasets.

RetroOOD: Understanding Out-of-Distribution Generalization in Retrosynthesis Prediction

no code implementations • 18 Dec 2023 • Yemin Yu, Luotian Yuan, Ying WEI, Hanyu Gao, Xinhai Ye, Zhihua Wang, Fei Wu

Machine learning-assisted retrosynthesis prediction models have been gaining widespread adoption, though their performances oftentimes degrade significantly when deployed in real-world applications embracing out-of-distribution (OOD) molecules or reactions.

Understanding the Multi-modal Prompts of the Pre-trained Vision-Language Model

no code implementations • 18 Dec 2023 • Shuailei Ma, Chen-Wei Xie, Ying WEI, Siyang Sun, Jiaqi Fan, Xiaoyi Bao, Yuxin Guo, Yun Zheng

In this paper, we conduct a direct analysis of the multi-modal prompts by asking the following questions: $(i)$ How do the learned multi-modal prompts improve the recognition performance?

From Words to Molecules: A Survey of Large Language Models in Chemistry

no code implementations • 2 Feb 2024 • Chang Liao, Yemin Yu, Yu Mei, Ying WEI

After that, we explore the diverse applications of LLMs in chemistry, including novel paradigms for their application in chemistry tasks.

Understanding and Patching Compositional Reasoning in LLMs

no code implementations • 22 Feb 2024 • Zhaoyi Li, Gangwei Jiang, Hong Xie, Linqi Song, Defu Lian, Ying WEI

LLMs have marked a revolutonary shift, yet they falter when faced with compositional reasoning tasks.

MoPE-CLIP: Structured Pruning for Efficient Vision-Language Models with Module-wise Pruning Error Metric

no code implementations • 12 Mar 2024 • Haokun Lin, Haoli Bai, Zhili Liu, Lu Hou, Muyi Sun, Linqi Song, Ying WEI, Zhenan Sun

We find that directly using smaller pre-trained models and applying magnitude-based pruning on CLIP models leads to inflexibility and inferior performance.