Search Results for author: Yogish Sabharwal

Found 8 papers, 2 papers with code

PoWER-BERT: Accelerating BERT Inference via Progressive Word-vector Elimination

1 code implementation • ICML 2020 • Saurabh Goyal, Anamitra R. Choudhury, Saurabh M. Raje, Venkatesan T. Chakaravarthy, Yogish Sabharwal, Ashish Verma

We demonstrate that our method attains up to 6. 8x reduction in inference time with <1% loss in accuracy when applied over ALBERT, a highly compressed version of BERT.

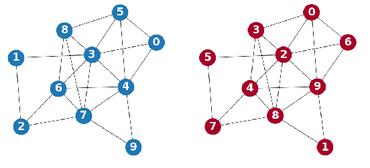

GREED: A Neural Framework for Learning Graph Distance Functions

2 code implementations • 24 Dec 2021 • Rishabh Ranjan, Siddharth Grover, Sourav Medya, Venkatesan Chakaravarthy, Yogish Sabharwal, Sayan Ranu

To elaborate, although GED is a metric, its neural approximations do not provide such a guarantee.

Efficient Inferencing of Compressed Deep Neural Networks

no code implementations • 1 Nov 2017 • Dharma Teja Vooturi, Saurabh Goyal, Anamitra R. Choudhury, Yogish Sabharwal, Ashish Verma

Large number of weights in deep neural networks makes the models difficult to be deployed in low memory environments such as, mobile phones, IOT edge devices as well as "inferencing as a service" environments on cloud.

Effective Elastic Scaling of Deep Learning Workloads

no code implementations • 24 Jun 2020 • Vaibhav Saxena, K. R. Jayaram, Saurav Basu, Yogish Sabharwal, Ashish Verma

We design a fast dynamic programming based optimizer to solve this problem in real-time to determine jobs that can be scaled up/down, and use this optimizer in an autoscaler to dynamically change the allocated resources and batch sizes of individual DL jobs.

Scheduling Resources for Executing a Partial Set of Jobs

no code implementations • 10 Oct 2012 • Venkatesan Chakaravarthy, Arindam Pal, Sambuddha Roy, Yogish Sabharwal

In this paper, we consider the problem of choosing a minimum cost set of resources for executing a specified set of jobs.

Data Structures and Algorithms

Efficient Scaling of Dynamic Graph Neural Networks

no code implementations • 16 Sep 2021 • Venkatesan T. Chakaravarthy, Shivmaran S. Pandian, Saurabh Raje, Yogish Sabharwal, Toyotaro Suzumura, Shashanka Ubaru

We present distributed algorithms for training dynamic Graph Neural Networks (GNN) on large scale graphs spanning multi-node, multi-GPU systems.

NeuroSED: Learning Subgraph Similarity via Graph Neural Networks

no code implementations • 29 Sep 2021 • Rishabh Ranjan, Siddharth Grover, Sourav Medya, Venkatesan Chakaravarthy, Yogish Sabharwal, Sayan Ranu

Subgraph edit distance (SED) is one of the most expressive measures of subgraph similarity.

Compression of Deep Neural Networks by combining pruning and low rank decomposition

no code implementations • 20 Oct 2018 • Saurabh Goyal, Anamitra R Choudhury, Vivek Sharma, Yogish Sabharwal, Ashish Verma

Large number of weights in deep neural networks make the models difficult to be deployed in low memory environments such as, mobile phones, IOT edge devices as well as "inferencing as a service" environments on the cloud.