Search Results for author: Yuefeng Chen

Found 37 papers, 18 papers with code

Bilinear Representation for Language-based Image Editing Using Conditional Generative Adversarial Networks

1 code implementation • 18 Mar 2019 • Xiaofeng Mao, Yuefeng Chen, Yuhong Li, Tao Xiong, Yuan He, Hui Xue

The task of Language-Based Image Editing (LBIE) aims at generating a target image by editing the source image based on the given language description.

Robust Visual Tracking Using Dynamic Classifier Selection with Sparse Representation of Label Noise

no code implementations • 19 Mar 2019 • Yuefeng Chen, Qing Wang

However, the self-updating scheme makes these methods suffer from drifting problem because of the incorrect labels of weak classifiers in training samples.

Self-Supervised Learning For Few-Shot Image Classification

2 code implementations • 14 Nov 2019 • Da Chen, Yuefeng Chen, Yuhong Li, Feng Mao, Yuan He, Hui Xue

In this paper, we proposed to train a more generalized embedding network with self-supervised learning (SSL) which can provide robust representation for downstream tasks by learning from the data itself.

Ranked #4 on

Few-Shot Image Classification

on Mini-ImageNet - 1-Shot Learning

(using extra training data)

Ranked #4 on

Few-Shot Image Classification

on Mini-ImageNet - 1-Shot Learning

(using extra training data)

Learning To Characterize Adversarial Subspaces

no code implementations • 15 Nov 2019 • Xiaofeng Mao, Yuefeng Chen, Yuhong Li, Yuan He, Hui Xue

To detect these adversarial examples, previous methods use artificially designed metrics to characterize the properties of \textit{adversarial subspaces} where adversarial examples lie.

Self-supervised Adversarial Training

1 code implementation • 15 Nov 2019 • Kejiang Chen, Hang Zhou, Yuefeng Chen, Xiaofeng Mao, Yuhong Li, Yuan He, Hui Xue, Weiming Zhang, Nenghai Yu

Recent work has demonstrated that neural networks are vulnerable to adversarial examples.

AdvKnn: Adversarial Attacks On K-Nearest Neighbor Classifiers With Approximate Gradients

1 code implementation • 15 Nov 2019 • Xiaodan Li, Yuefeng Chen, Yuan He, Hui Xue

Deep neural networks have been shown to be vulnerable to adversarial examples---maliciously crafted examples that can trigger the target model to misbehave by adding imperceptible perturbations.

Towards Face Encryption by Generating Adversarial Identity Masks

1 code implementation • ICCV 2021 • Xiao Yang, Yinpeng Dong, Tianyu Pang, Hang Su, Jun Zhu, Yuefeng Chen, Hui Xue

As billions of personal data being shared through social media and network, the data privacy and security have drawn an increasing attention.

GAP++: Learning to generate target-conditioned adversarial examples

no code implementations • 9 Jun 2020 • Xiaofeng Mao, Yuefeng Chen, Yuhong Li, Yuan He, Hui Xue

Different from previous single-target attack models, our model can conduct target-conditioned attacks by learning the relations of attack target and the semantics in image.

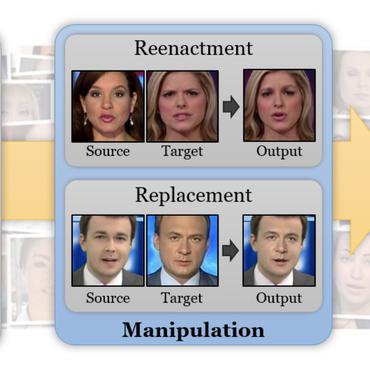

Sharp Multiple Instance Learning for DeepFake Video Detection

no code implementations • 11 Aug 2020 • Xiaodan Li, Yining Lang, Yuefeng Chen, Xiaofeng Mao, Yuan He, Shuhui Wang, Hui Xue, Quan Lu

A sharp MIL (S-MIL) is proposed which builds direct mapping from instance embeddings to bag prediction, rather than from instance embeddings to instance prediction and then to bag prediction in traditional MIL.

Composite Adversarial Attacks

1 code implementation • 10 Dec 2020 • Xiaofeng Mao, Yuefeng Chen, Shuhui Wang, Hang Su, Yuan He, Hui Xue

Adversarial attack is a technique for deceiving Machine Learning (ML) models, which provides a way to evaluate the adversarial robustness.

Adversarial Examples Detection beyond Image Space

1 code implementation • 23 Feb 2021 • Kejiang Chen, Yuefeng Chen, Hang Zhou, Chuan Qin, Xiaofeng Mao, Weiming Zhang, Nenghai Yu

To detect both few-perturbation attacks and large-perturbation attacks, we propose a method beyond image space by a two-stream architecture, in which the image stream focuses on the pixel artifacts and the gradient stream copes with the confidence artifacts.

Spatial-Phase Shallow Learning: Rethinking Face Forgery Detection in Frequency Domain

no code implementations • CVPR 2021 • Honggu Liu, Xiaodan Li, Wenbo Zhou, Yuefeng Chen, Yuan He, Hui Xue, Weiming Zhang, Nenghai Yu

The remarkable success in face forgery techniques has received considerable attention in computer vision due to security concerns.

QAIR: Practical Query-efficient Black-Box Attacks for Image Retrieval

no code implementations • CVPR 2021 • Xiaodan Li, Jinfeng Li, Yuefeng Chen, Shaokai Ye, Yuan He, Shuhui Wang, Hang Su, Hui Xue

Comprehensive experiments show that the proposed attack achieves a high attack success rate with few queries against the image retrieval systems under the black-box setting.

Adversarial Laser Beam: Effective Physical-World Attack to DNNs in a Blink

1 code implementation • CVPR 2021 • Ranjie Duan, Xiaofeng Mao, A. K. Qin, Yun Yang, Yuefeng Chen, Shaokai Ye, Yuan He

Though it is well known that the performance of deep neural networks (DNNs) degrades under certain light conditions, there exists no study on the threats of light beams emitted from some physical source as adversarial attacker on DNNs in a real-world scenario.

Towards Robust Vision Transformer

2 code implementations • CVPR 2022 • Xiaofeng Mao, Gege Qi, Yuefeng Chen, Xiaodan Li, Ranjie Duan, Shaokai Ye, Yuan He, Hui Xue

By using and combining robust components as building blocks of ViTs, we propose Robust Vision Transformer (RVT), which is a new vision transformer and has superior performance with strong robustness.

Ranked #24 on

Domain Generalization

on ImageNet-C

Ranked #24 on

Domain Generalization

on ImageNet-C

AdvDrop: Adversarial Attack to DNNs by Dropping Information

1 code implementation • ICCV 2021 • Ranjie Duan, Yuefeng Chen, Dantong Niu, Yun Yang, A. K. Qin, Yuan He

Human can easily recognize visual objects with lost information: even losing most details with only contour reserved, e. g. cartoon.

D$^2$ETR: Decoder-Only DETR with Computationally Efficient Cross-Scale Attention

no code implementations • 29 Sep 2021 • Junyu Lin, Xiaofeng Mao, Yuefeng Chen, Lei Xu, Yuan He, Hui Xue'

DETR is the first fully end-to-end detector that predicts a final set of predictions without post-processing.

Adversarial Attacks on ML Defense Models Competition

1 code implementation • 15 Oct 2021 • Yinpeng Dong, Qi-An Fu, Xiao Yang, Wenzhao Xiang, Tianyu Pang, Hang Su, Jun Zhu, Jiayu Tang, Yuefeng Chen, Xiaofeng Mao, Yuan He, Hui Xue, Chao Li, Ye Liu, Qilong Zhang, Lianli Gao, Yunrui Yu, Xitong Gao, Zhe Zhao, Daquan Lin, Jiadong Lin, Chuanbiao Song, ZiHao Wang, Zhennan Wu, Yang Guo, Jiequan Cui, Xiaogang Xu, Pengguang Chen

Due to the vulnerability of deep neural networks (DNNs) to adversarial examples, a large number of defense techniques have been proposed to alleviate this problem in recent years.

Unrestricted Adversarial Attacks on ImageNet Competition

1 code implementation • 17 Oct 2021 • Yuefeng Chen, Xiaofeng Mao, Yuan He, Hui Xue, Chao Li, Yinpeng Dong, Qi-An Fu, Xiao Yang, Tianyu Pang, Hang Su, Jun Zhu, Fangcheng Liu, Chao Zhang, Hongyang Zhang, Yichi Zhang, Shilong Liu, Chang Liu, Wenzhao Xiang, Yajie Wang, Huipeng Zhou, Haoran Lyu, Yidan Xu, Zixuan Xu, Taoyu Zhu, Wenjun Li, Xianfeng Gao, Guoqiu Wang, Huanqian Yan, Ying Guo, Chaoning Zhang, Zheng Fang, Yang Wang, Bingyang Fu, Yunfei Zheng, Yekui Wang, Haorong Luo, Zhen Yang

Many works have investigated the adversarial attacks or defenses under the settings where a bounded and imperceptible perturbation can be added to the input.

Beyond ImageNet Attack: Towards Crafting Adversarial Examples for Black-box Domains

2 code implementations • ICLR 2022 • Qilong Zhang, Xiaodan Li, Yuefeng Chen, Jingkuan Song, Lianli Gao, Yuan He, Hui Xue

Notably, our methods outperform state-of-the-art approaches by up to 7. 71\% (towards coarse-grained domains) and 25. 91\% (towards fine-grained domains) on average.

D^2ETR: Decoder-Only DETR with Computationally Efficient Cross-Scale Attention

no code implementations • 2 Mar 2022 • Junyu Lin, Xiaofeng Mao, Yuefeng Chen, Lei Xu, Yuan He, Hui Xue

DETR is the first fully end-to-end detector that predicts a final set of predictions without post-processing.

Enhance the Visual Representation via Discrete Adversarial Training

1 code implementation • 16 Sep 2022 • Xiaofeng Mao, Yuefeng Chen, Ranjie Duan, Yao Zhu, Gege Qi, Shaokai Ye, Xiaodan Li, Rong Zhang, Hui Xue

For borrowing the advantage from NLP-style AT, we propose Discrete Adversarial Training (DAT).

Ranked #1 on

Domain Generalization

on Stylized-ImageNet

Ranked #1 on

Domain Generalization

on Stylized-ImageNet

MaxMatch: Semi-Supervised Learning with Worst-Case Consistency

no code implementations • 26 Sep 2022 • Yangbangyan Jiang, Xiaodan Li, Yuefeng Chen, Yuan He, Qianqian Xu, Zhiyong Yang, Xiaochun Cao, Qingming Huang

In recent years, great progress has been made to incorporate unlabeled data to overcome the inefficiently supervised problem via semi-supervised learning (SSL).

Towards Understanding and Boosting Adversarial Transferability from a Distribution Perspective

2 code implementations • 9 Oct 2022 • Yao Zhu, Yuefeng Chen, Xiaodan Li, Kejiang Chen, Yuan He, Xiang Tian, Bolun Zheng, Yaowu Chen, Qingming Huang

We conduct comprehensive transferable attacks against multiple DNNs to demonstrate the effectiveness of the proposed method.

Boosting Out-of-distribution Detection with Typical Features

no code implementations • 9 Oct 2022 • Yao Zhu, Yuefeng Chen, Chuanlong Xie, Xiaodan Li, Rong Zhang, Hui Xue, Xiang Tian, Bolun Zheng, Yaowu Chen

Out-of-distribution (OOD) detection is a critical task for ensuring the reliability and safety of deep neural networks in real-world scenarios.

Prompt-based Connective Prediction Method for Fine-grained Implicit Discourse Relation Recognition

1 code implementation • 13 Oct 2022 • Hao Zhou, Man Lan, Yuanbin Wu, Yuefeng Chen, Meirong Ma

Due to the absence of connectives, implicit discourse relation recognition (IDRR) is still a challenging and crucial task in discourse analysis.

Defects of Convolutional Decoder Networks in Frequency Representation

no code implementations • 17 Oct 2022 • Ling Tang, Wen Shen, Zhanpeng Zhou, Yuefeng Chen, Quanshi Zhang

In this paper, we prove the representation defects of a cascaded convolutional decoder network, considering the capacity of representing different frequency components of an input sample.

Context-Aware Robust Fine-Tuning

no code implementations • 29 Nov 2022 • Xiaofeng Mao, Yuefeng Chen, Xiaojun Jia, Rong Zhang, Hui Xue, Zhao Li

Contrastive Language-Image Pre-trained (CLIP) models have zero-shot ability of classifying an image belonging to "[CLASS]" by using similarity between the image and the prompt sentence "a [CONTEXT] of [CLASS]".

Ranked #1 on

Domain Generalization

on VLCS

Ranked #1 on

Domain Generalization

on VLCS

Rethinking Out-of-Distribution Detection From a Human-Centric Perspective

no code implementations • 30 Nov 2022 • Yao Zhu, Yuefeng Chen, Xiaodan Li, Rong Zhang, Hui Xue, Xiang Tian, Rongxin Jiang, Bolun Zheng, Yaowu Chen

Additionally, our experiments demonstrate that model selection is non-trivial for OOD detection and should be considered as an integral of the proposed method, which differs from the claim in existing works that proposed methods are universal across different models.

A Comprehensive Study on Robustness of Image Classification Models: Benchmarking and Rethinking

no code implementations • 28 Feb 2023 • Chang Liu, Yinpeng Dong, Wenzhao Xiang, Xiao Yang, Hang Su, Jun Zhu, Yuefeng Chen, Yuan He, Hui Xue, Shibao Zheng

In our benchmark, we evaluate the robustness of 55 typical deep learning models on ImageNet with diverse architectures (e. g., CNNs, Transformers) and learning algorithms (e. g., normal supervised training, pre-training, adversarial training) under numerous adversarial attacks and out-of-distribution (OOD) datasets.

Information-containing Adversarial Perturbation for Combating Facial Manipulation Systems

no code implementations • 21 Mar 2023 • Yao Zhu, Yuefeng Chen, Xiaodan Li, Rong Zhang, Xiang Tian, Bolun Zheng, Yaowu Chen

We use an encoder to map a facial image and its identity message to a cross-model adversarial example which can disrupt multiple facial manipulation systems to achieve initiative protection.

ImageNet-E: Benchmarking Neural Network Robustness via Attribute Editing

2 code implementations • CVPR 2023 • Xiaodan Li, Yuefeng Chen, Yao Zhu, Shuhui Wang, Rong Zhang, Hui Xue

We also evaluate some robust models including both adversarially trained models and other robust trained models and find that some models show worse robustness against attribute changes than vanilla models.

COCO-O: A Benchmark for Object Detectors under Natural Distribution Shifts

1 code implementation • ICCV 2023 • Xiaofeng Mao, Yuefeng Chen, Yao Zhu, Da Chen, Hang Su, Rong Zhang, Hui Xue

To give a more comprehensive robustness assessment, we introduce COCO-O(ut-of-distribution), a test dataset based on COCO with 6 types of natural distribution shifts.

Robust Automatic Speech Recognition via WavAugment Guided Phoneme Adversarial Training

no code implementations • 24 Jul 2023 • Gege Qi, Yuefeng Chen, Xiaofeng Mao, Xiaojun Jia, Ranjie Duan, Rong Zhang, Hui Xue

Developing a practically-robust automatic speech recognition (ASR) is challenging since the model should not only maintain the original performance on clean samples, but also achieve consistent efficacy under small volume perturbations and large domain shifts.

Automatic Speech Recognition

Automatic Speech Recognition

Automatic Speech Recognition (ASR)

+1

Automatic Speech Recognition (ASR)

+1

Revisiting and Exploring Efficient Fast Adversarial Training via LAW: Lipschitz Regularization and Auto Weight Averaging

no code implementations • 22 Aug 2023 • Xiaojun Jia, Yuefeng Chen, Xiaofeng Mao, Ranjie Duan, Jindong Gu, Rong Zhang, Hui Xue, Xiaochun Cao

In this paper, we conduct a comprehensive study of over 10 fast adversarial training methods in terms of adversarial robustness and training costs.

Model Inversion Attack via Dynamic Memory Learning

no code implementations • 24 Aug 2023 • Gege Qi, Yuefeng Chen, Xiaofeng Mao, Binyuan Hui, Xiaodan Li, Rong Zhang, Hui Xue

Model Inversion (MI) attacks aim to recover the private training data from the target model, which has raised security concerns about the deployment of DNNs in practice.

Enhancing Few-shot CLIP with Semantic-Aware Fine-Tuning

no code implementations • 8 Nov 2023 • Yao Zhu, Yuefeng Chen, Wei Wang, Xiaofeng Mao, Xiu Yan, Yue Wang, Zhigang Li, Wang Lu, Jindong Wang, Xiangyang Ji

Hence, we propose fine-tuning the parameters of the attention pooling layer during the training process to encourage the model to focus on task-specific semantics.