Search Results for author: Zhiwen Fan

Found 39 papers, 17 papers with code

Cascade Cost Volume for High-Resolution Multi-View Stereo and Stereo Matching

4 code implementations • CVPR 2020 • Xiaodong Gu, Zhiwen Fan, Zuozhuo Dai, Siyu Zhu, Feitong Tan, Ping Tan

The deep multi-view stereo (MVS) and stereo matching approaches generally construct 3D cost volumes to regularize and regress the output depth or disparity.

Ranked #12 on

Point Clouds

on Tanks and Temples

Ranked #12 on

Point Clouds

on Tanks and Temples

LightGaussian: Unbounded 3D Gaussian Compression with 15x Reduction and 200+ FPS

1 code implementation • 28 Nov 2023 • Zhiwen Fan, Kevin Wang, Kairun Wen, Zehao Zhu, Dejia Xu, Zhangyang Wang

Recent advancements in real-time neural rendering using point-based techniques have paved the way for the widespread adoption of 3D representations.

SinNeRF: Training Neural Radiance Fields on Complex Scenes from a Single Image

1 code implementation • 2 Apr 2022 • Dejia Xu, Yifan Jiang, Peihao Wang, Zhiwen Fan, Humphrey Shi, Zhangyang Wang

Despite the rapid development of Neural Radiance Field (NeRF), the necessity of dense covers largely prohibits its wider applications.

NeuralLift-360: Lifting An In-the-wild 2D Photo to A 3D Object with 360° Views

1 code implementation • 29 Nov 2022 • Dejia Xu, Yifan Jiang, Peihao Wang, Zhiwen Fan, Yi Wang, Zhangyang Wang

In this work, we study the challenging task of lifting a single image to a 3D object and, for the first time, demonstrate the ability to generate a plausible 3D object with 360{\deg} views that correspond well with the given reference image.

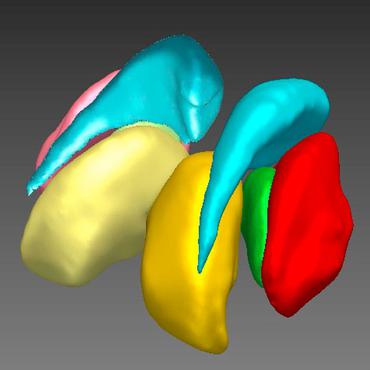

NeRF-SOS: Any-View Self-supervised Object Segmentation on Complex Scenes

1 code implementation • 19 Sep 2022 • Zhiwen Fan, Peihao Wang, Yifan Jiang, Xinyu Gong, Dejia Xu, Zhangyang Wang

Our framework, called NeRF with Self-supervised Object Segmentation NeRF-SOS, couples object segmentation and neural radiance field to segment objects in any view within a scene.

Aug-NeRF: Training Stronger Neural Radiance Fields with Triple-Level Physically-Grounded Augmentations

1 code implementation • CVPR 2022 • Tianlong Chen, Peihao Wang, Zhiwen Fan, Zhangyang Wang

Inspired by that, we propose Augmented NeRF (Aug-NeRF), which for the first time brings the power of robust data augmentations into regularizing the NeRF training.

POPE: 6-DoF Promptable Pose Estimation of Any Object, in Any Scene, with One Reference

1 code implementation • 25 May 2023 • Zhiwen Fan, Panwang Pan, Peihao Wang, Yifan Jiang, Dejia Xu, Hanwen Jiang, Zhangyang Wang

To mitigate this issue, we propose a general paradigm for object pose estimation, called Promptable Object Pose Estimation (POPE).

Unified Implicit Neural Stylization

1 code implementation • 5 Apr 2022 • Zhiwen Fan, Yifan Jiang, Peihao Wang, Xinyu Gong, Dejia Xu, Zhangyang Wang

Representing visual signals by implicit representation (e. g., a coordinate based deep network) has prevailed among many vision tasks.

M$^3$ViT: Mixture-of-Experts Vision Transformer for Efficient Multi-task Learning with Model-Accelerator Co-design

1 code implementation • 26 Oct 2022 • Hanxue Liang, Zhiwen Fan, Rishov Sarkar, Ziyu Jiang, Tianlong Chen, Kai Zou, Yu Cheng, Cong Hao, Zhangyang Wang

However, when deploying MTL onto those real-world systems that are often resource-constrained or latency-sensitive, two prominent challenges arise: (i) during training, simultaneously optimizing all tasks is often difficult due to gradient conflicts across tasks; (ii) at inference, current MTL regimes have to activate nearly the entire model even to just execute a single task.

Edge-MoE: Memory-Efficient Multi-Task Vision Transformer Architecture with Task-level Sparsity via Mixture-of-Experts

1 code implementation • 30 May 2023 • Rishov Sarkar, Hanxue Liang, Zhiwen Fan, Zhangyang Wang, Cong Hao

Computer vision researchers are embracing two promising paradigms: Vision Transformers (ViTs) and Multi-task Learning (MTL), which both show great performance but are computation-intensive, given the quadratic complexity of self-attention in ViT and the need to activate an entire large MTL model for one task.

Can We Solve 3D Vision Tasks Starting from A 2D Vision Transformer?

2 code implementations • 15 Sep 2022 • Yi Wang, Zhiwen Fan, Tianlong Chen, Hehe Fan, Zhangyang Wang

Vision Transformers (ViTs) have proven to be effective, in solving 2D image understanding tasks by training over large-scale image datasets; and meanwhile as a somehow separate track, in modeling the 3D visual world too such as voxels or point clouds.

CADTransformer: Panoptic Symbol Spotting Transformer for CAD Drawings

1 code implementation • CVPR 2022 • Zhiwen Fan, Tianlong Chen, Peihao Wang, Zhangyang Wang

CADTransformer tokenizes directly from the set of graphical primitives in CAD drawings, and correspondingly optimizes line-grained semantic and instance symbol spotting altogether by a pair of prediction heads.

Enhancing NeRF akin to Enhancing LLMs: Generalizable NeRF Transformer with Mixture-of-View-Experts

1 code implementation • ICCV 2023 • Wenyan Cong, Hanxue Liang, Peihao Wang, Zhiwen Fan, Tianlong Chen, Mukund Varma, Yi Wang, Zhangyang Wang

Cross-scene generalizable NeRF models, which can directly synthesize novel views of unseen scenes, have become a new spotlight of the NeRF field.

Neural Implicit Dictionary via Mixture-of-Expert Training

1 code implementation • 8 Jul 2022 • Peihao Wang, Zhiwen Fan, Tianlong Chen, Zhangyang Wang

In this paper, we present a generic INR framework that achieves both data and training efficiency by learning a Neural Implicit Dictionary (NID) from a data collection and representing INR as a functional combination of basis sampled from the dictionary.

Learning to Estimate 6DoF Pose from Limited Data: A Few-Shot, Generalizable Approach using RGB Images

1 code implementation • 13 Jun 2023 • Panwang Pan, Zhiwen Fan, Brandon Y. Feng, Peihao Wang, Chenxin Li, Zhangyang Wang

The accurate estimation of six degrees-of-freedom (6DoF) object poses is essential for many applications in robotics and augmented reality.

Symbolic Visual Reinforcement Learning: A Scalable Framework with Object-Level Abstraction and Differentiable Expression Search

1 code implementation • 30 Dec 2022 • Wenqing Zheng, S P Sharan, Zhiwen Fan, Kevin Wang, Yihan Xi, Zhangyang Wang

Learning efficient and interpretable policies has been a challenging task in reinforcement learning (RL), particularly in the visual RL setting with complex scenes.

INR-Arch: A Dataflow Architecture and Compiler for Arbitrary-Order Gradient Computations in Implicit Neural Representation Processing

1 code implementation • 11 Aug 2023 • Stefan Abi-Karam, Rishov Sarkar, Dejia Xu, Zhiwen Fan, Zhangyang Wang, Cong Hao

In this work, we introduce INR-Arch, a framework that transforms the computation graph of an nth-order gradient into a hardware-optimized dataflow architecture.

Joint CS-MRI Reconstruction and Segmentation with a Unified Deep Network

no code implementations • 6 May 2018 • Liyan Sun, Zhiwen Fan, Yue Huang, Xinghao Ding, John Paisley

The need for fast acquisition and automatic analysis of MRI data is growing in the age of big data.

Residual-Guide Feature Fusion Network for Single Image Deraining

no code implementations • 20 Apr 2018 • Zhiwen Fan, Huafeng Wu, Xueyang Fu, Yue Hunag, Xinghao Ding

Single image rain streaks removal is extremely important since rainy images adversely affect many computer vision systems.

A Deep Information Sharing Network for Multi-contrast Compressed Sensing MRI Reconstruction

no code implementations • 10 Apr 2018 • Liyan Sun, Zhiwen Fan, Yue Huang, Xinghao Ding, John Paisley

In multi-contrast magnetic resonance imaging (MRI), compressed sensing theory can accelerate imaging by sampling fewer measurements within each contrast.

A Segmentation-aware Deep Fusion Network for Compressed Sensing MRI

no code implementations • ECCV 2018 • Zhiwen Fan, Liyan Sun, Xinghao Ding, Yue Huang, Congbo Cai, John Paisley

In this paper, we proposed a segmentation-aware deep fusion network called SADFN for compressed sensing MRI.

A Divide-and-Conquer Approach to Compressed Sensing MRI

no code implementations • 27 Mar 2018 • Liyan Sun, Zhiwen Fan, Xinghao Ding, Congbo Cai, Yue Huang, John Paisley

Compressed sensing (CS) theory assures us that we can accurately reconstruct magnetic resonance images using fewer k-space measurements than the Nyquist sampling rate requires.

A Deep Error Correction Network for Compressed Sensing MRI

no code implementations • 23 Mar 2018 • Liyan Sun, Zhiwen Fan, Yue Huang, Xinghao Ding, John Paisley

Existing CS-MRI algorithms can serve as the template module for guiding the reconstruction.

MeshMVS: Multi-View Stereo Guided Mesh Reconstruction

no code implementations • 17 Oct 2020 • Rakesh Shrestha, Zhiwen Fan, Qingkun Su, Zuozhuo Dai, Siyu Zhu, Ping Tan

Deep learning based 3D shape generation methods generally utilize latent features extracted from color images to encode the semantics of objects and guide the shape generation process.

FloorPlanCAD: A Large-Scale CAD Drawing Dataset for Panoptic Symbol Spotting

no code implementations • ICCV 2021 • Zhiwen Fan, Lingjie Zhu, Honghua Li, Xiaohao Chen, Siyu Zhu, Ping Tan

The proposed CNN-GCN method achieved state-of-the-art (SOTA) performance on the task of semantic symbol spotting, and help us build a baseline network for the panoptic symbol spotting task.

Reasoning With Hierarchical Symbols: Reclaiming Symbolic Policies For Visual Reinforcement Learning

no code implementations • 29 Sep 2021 • Wenqing Zheng, S P Sharan, Zhiwen Fan, Zhangyang Wang

Deep vision models are nowadays widely integrated into visual reinforcement learning (RL) to parameterize the policy networks.

Signal Processing for Implicit Neural Representations

no code implementations • 17 Oct 2022 • Dejia Xu, Peihao Wang, Yifan Jiang, Zhiwen Fan, Zhangyang Wang

We answer this question by proposing an implicit neural signal processing network, dubbed INSP-Net, via differential operators on INR.

Data-Model-Circuit Tri-Design for Ultra-Light Video Intelligence on Edge Devices

no code implementations • 16 Oct 2022 • Yimeng Zhang, Akshay Karkal Kamath, Qiucheng Wu, Zhiwen Fan, Wuyang Chen, Zhangyang Wang, Shiyu Chang, Sijia Liu, Cong Hao

In this paper, we propose a data-model-hardware tri-design framework for high-throughput, low-cost, and high-accuracy multi-object tracking (MOT) on High-Definition (HD) video stream.

StegaNeRF: Embedding Invisible Information within Neural Radiance Fields

no code implementations • ICCV 2023 • Chenxin Li, Brandon Y. Feng, Zhiwen Fan, Panwang Pan, Zhangyang Wang

Recent advances in neural rendering imply a future of widespread visual data distributions through sharing NeRF model weights.

NeuralLift-360: Lifting an In-the-Wild 2D Photo to a 3D Object With 360deg Views

no code implementations • CVPR 2023 • Dejia Xu, Yifan Jiang, Peihao Wang, Zhiwen Fan, Yi Wang, Zhangyang Wang

In this work, we study the challenging task of lifting a single image to a 3D object and, for the first time, demonstrate the ability to generate a plausible 3D object with 360deg views that corresponds well with the given reference image.

Pose-Free Generalizable Rendering Transformer

no code implementations • 5 Oct 2023 • Zhiwen Fan, Panwang Pan, Peihao Wang, Yifan Jiang, Hanwen Jiang, Dejia Xu, Zehao Zhu, Dilin Wang, Zhangyang Wang

To address this challenge, we introduce PF-GRT, a new Pose-Free framework for Generalizable Rendering Transformer, eliminating the need for pre-computed camera poses and instead leveraging feature-matching learned directly from data.

FSGS: Real-Time Few-shot View Synthesis using Gaussian Splatting

no code implementations • 1 Dec 2023 • Zehao Zhu, Zhiwen Fan, Yifan Jiang, Zhangyang Wang

Novel view synthesis from limited observations remains an important and persistent task.

Feature 3DGS: Supercharging 3D Gaussian Splatting to Enable Distilled Feature Fields

no code implementations • 6 Dec 2023 • Shijie Zhou, Haoran Chang, Sicheng Jiang, Zhiwen Fan, Zehao Zhu, Dejia Xu, Pradyumna Chari, Suya You, Zhangyang Wang, Achuta Kadambi

In this work, we go one step further: in addition to radiance field rendering, we enable 3D Gaussian splatting on arbitrary-dimension semantic features via 2D foundation model distillation.

SteinDreamer: Variance Reduction for Text-to-3D Score Distillation via Stein Identity

no code implementations • 31 Dec 2023 • Peihao Wang, Zhiwen Fan, Dejia Xu, Dilin Wang, Sreyas Mohan, Forrest Iandola, Rakesh Ranjan, Yilei Li, Qiang Liu, Zhangyang Wang, Vikas Chandra

In this paper, we reveal that the gradient estimation in score distillation is inherent to high variance.

Taming Mode Collapse in Score Distillation for Text-to-3D Generation

no code implementations • 31 Dec 2023 • Peihao Wang, Dejia Xu, Zhiwen Fan, Dilin Wang, Sreyas Mohan, Forrest Iandola, Rakesh Ranjan, Yilei Li, Qiang Liu, Zhangyang Wang, Vikas Chandra

In this paper, we reveal that the existing score distillation-based text-to-3D generation frameworks degenerate to maximal likelihood seeking on each view independently and thus suffer from the mode collapse problem, manifesting as the Janus artifact in practice.

Lift3D: Zero-Shot Lifting of Any 2D Vision Model to 3D

no code implementations • 27 Mar 2024 • Mukund Varma T, Peihao Wang, Zhiwen Fan, Zhangyang Wang, Hao Su, Ravi Ramamoorthi

In recent years, there has been an explosion of 2D vision models for numerous tasks such as semantic segmentation, style transfer or scene editing, enabled by large-scale 2D image datasets.

InstantSplat: Unbounded Sparse-view Pose-free Gaussian Splatting in 40 Seconds

no code implementations • 29 Mar 2024 • Zhiwen Fan, Wenyan Cong, Kairun Wen, Kevin Wang, Jian Zhang, Xinghao Ding, Danfei Xu, Boris Ivanovic, Marco Pavone, Georgios Pavlakos, Zhangyang Wang, Yue Wang

This pre-processing is usually conducted via a Structure-from-Motion (SfM) pipeline, a procedure that can be slow and unreliable, particularly in sparse-view scenarios with insufficient matched features for accurate reconstruction.

MM3DGS SLAM: Multi-modal 3D Gaussian Splatting for SLAM Using Vision, Depth, and Inertial Measurements

no code implementations • 1 Apr 2024 • Lisong C. Sun, Neel P. Bhatt, Jonathan C. Liu, Zhiwen Fan, Zhangyang Wang, Todd E. Humphreys, Ufuk Topcu

We show for the first time that using 3D Gaussians for map representation with unposed camera images and inertial measurements can enable accurate SLAM.

DreamScene360: Unconstrained Text-to-3D Scene Generation with Panoramic Gaussian Splatting

no code implementations • 10 Apr 2024 • Shijie Zhou, Zhiwen Fan, Dejia Xu, Haoran Chang, Pradyumna Chari, Tejas Bharadwaj, Suya You, Zhangyang Wang, Achuta Kadambi

This point cloud serves as the initial state for the centroids of 3D Gaussians.