Code Completion

63 papers with code • 4 benchmarks • 9 datasets

Libraries

Use these libraries to find Code Completion models and implementationsDatasets

Most implemented papers

CodeXGLUE: A Machine Learning Benchmark Dataset for Code Understanding and Generation

Benchmark datasets have a significant impact on accelerating research in programming language tasks.

Open Vocabulary Learning on Source Code with a Graph-Structured Cache

Machine learning models that take computer program source code as input typically use Natural Language Processing (NLP) techniques.

Structural Language Models of Code

We introduce a new approach to any-code completion that leverages the strict syntax of programming languages to model a code snippet as a tree - structural language modeling (SLM).

Neural Software Analysis

The resulting tools complement and outperform traditional program analyses, and are used in industrial practice.

UniXcoder: Unified Cross-Modal Pre-training for Code Representation

Furthermore, we propose to utilize multi-modal contents to learn representation of code fragment with contrastive learning, and then align representations among programming languages using a cross-modal generation task.

Multi-lingual Evaluation of Code Generation Models

Using these benchmarks, we are able to assess the performance of code generation models in a multi-lingual fashion, and discovered generalization ability of language models on out-of-domain languages, advantages of multi-lingual models over mono-lingual, the ability of few-shot prompting to teach the model new languages, and zero-shot translation abilities even on mono-lingual settings.

Not what you've signed up for: Compromising Real-World LLM-Integrated Applications with Indirect Prompt Injection

Large Language Models (LLMs) are increasingly being integrated into various applications.

CodeKGC: Code Language Model for Generative Knowledge Graph Construction

However, large generative language model trained on structured data such as code has demonstrated impressive capability in understanding natural language for structural prediction and reasoning tasks.

MPI-rical: Data-Driven MPI Distributed Parallelism Assistance with Transformers

Message Passing Interface (MPI) plays a crucial role in distributed memory parallelization across multiple nodes.

Scope is all you need: Transforming LLMs for HPC Code

With easier access to powerful compute resources, there is a growing trend in the field of AI for software development to develop larger and larger language models (LLMs) to address a variety of programming tasks.

CodeXGLUE

CodeXGLUE

PyTorrent

PyTorrent

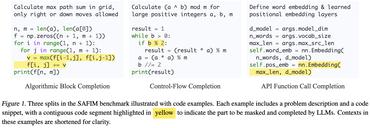

SAFIM

SAFIM