Image Generation

1982 papers with code • 85 benchmarks • 67 datasets

Image Generation (synthesis) is the task of generating new images from an existing dataset.

- Unconditional generation refers to generating samples unconditionally from the dataset, i.e. $p(y)$

- Conditional image generation (subtask) refers to generating samples conditionally from the dataset, based on a label, i.e. $p(y|x)$.

In this section, you can find state-of-the-art leaderboards for unconditional generation. For conditional generation, and other types of image generations, refer to the subtasks.

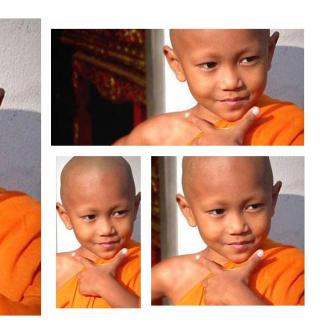

( Image credit: StyleGAN )

Libraries

Use these libraries to find Image Generation models and implementationsDatasets

Subtasks

-

Image-to-Image Translation

Image-to-Image Translation

-

Image Inpainting

Image Inpainting

-

Text-to-Image Generation

Text-to-Image Generation

-

Conditional Image Generation

Conditional Image Generation

-

Conditional Image Generation

Conditional Image Generation

-

Face Generation

Face Generation

-

3D Generation

3D Generation

-

Image Harmonization

Image Harmonization

-

Pose Transfer

Pose Transfer

-

3D-Aware Image Synthesis

3D-Aware Image Synthesis

-

Facial Inpainting

Facial Inpainting

-

Layout-to-Image Generation

Layout-to-Image Generation

-

ROI-based image generation

ROI-based image generation

-

Image Generation from Scene Graphs

Image Generation from Scene Graphs

-

Pose-Guided Image Generation

Pose-Guided Image Generation

-

User Constrained Thumbnail Generation

User Constrained Thumbnail Generation

-

Handwritten Word Generation

Handwritten Word Generation

-

Chinese Landscape Painting Generation

Chinese Landscape Painting Generation

-

person reposing

person reposing

-

Infinite Image Generation

Infinite Image Generation

-

Multi class one-shot image synthesis

Multi class one-shot image synthesis

-

Single class few-shot image synthesis

Single class few-shot image synthesis

Latest papers with no code

Generating Counterfactual Trajectories with Latent Diffusion Models for Concept Discovery

In the first step, CDCT uses a Latent Diffusion Model (LDM) to generate a counterfactual trajectory dataset.

OneActor: Consistent Character Generation via Cluster-Conditioned Guidance

Comprehensive experiments show that our method outperforms a variety of baselines with satisfactory character consistency, superior prompt conformity as well as high image quality.

Adversarial Identity Injection for Semantic Face Image Synthesis

Among all the explored techniques, Semantic Image Synthesis (SIS) methods, whose goal is to generate an image conditioned on a semantic segmentation mask, are the most promising, even though preserving the perceived identity of the input subject is not their main concern.

OmniSSR: Zero-shot Omnidirectional Image Super-Resolution using Stable Diffusion Model

Omnidirectional images (ODIs) are commonly used in real-world visual tasks, and high-resolution ODIs help improve the performance of related visual tasks.

Watermark-embedded Adversarial Examples for Copyright Protection against Diffusion Models

Diffusion Models (DMs) have shown remarkable capabilities in various image-generation tasks.

In-Context Translation: Towards Unifying Image Recognition, Processing, and Generation

Secondly, it standardizes the training of different tasks into a general in-context learning, where "in-context" means the input comprises an example input-output pair of the target task and a query image.

Zero-shot detection of buildings in mobile LiDAR using Language Vision Model

Moreover, constructing LVMs for point clouds is even more challenging due to the requirements for large amounts of data and training time.

Ctrl-Adapter: An Efficient and Versatile Framework for Adapting Diverse Controls to Any Diffusion Model

Ctrl-Adapter provides diverse capabilities including image control, video control, video control with sparse frames, multi-condition control, compatibility with different backbones, adaptation to unseen control conditions, and video editing.

EdgeRelight360: Text-Conditioned 360-Degree HDR Image Generation for Real-Time On-Device Video Portrait Relighting

In this paper, we present EdgeRelight360, an approach for real-time video portrait relighting on mobile devices, utilizing text-conditioned generation of 360-degree high dynamic range image (HDRI) maps.

Diffscaler: Enhancing the Generative Prowess of Diffusion Transformers

As these parameters are independent, a single diffusion model with these task-specific parameters can be used to perform multiple tasks simultaneously.

CIFAR-10

CIFAR-10

ImageNet

ImageNet

CIFAR-100

CIFAR-100

MNIST

MNIST

Cityscapes

Cityscapes

CelebA

CelebA

Fashion-MNIST

Fashion-MNIST

CUB-200-2011

CUB-200-2011

FFHQ

FFHQ

Oxford 102 Flower

Oxford 102 Flower