Topic coverage

5 papers with code • 3 benchmarks • 1 datasets

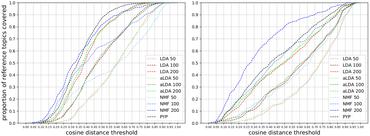

A prevalent use case of topic models is that of topic discovery. However, most of the topic model evaluation methods rely on abstract metrics such as perplexity or topic coherence. The topic coverage approach is to measure the models' performance by matching model-generated topics to a fixed set of reference topics - topics discovered by humans and represented in a machine-readable format. This way, the models are evaluated in the context of their use, by essentially simulating topic modeling in a fixed setting defined by a text collection and a set of reference topics. Reference topics represent a ground truth that can be used to evaluate both topic models and other measures of model performance. This coverage approach enables large-scale automatic evaluation of existing and future topic models.

Most implemented papers

A Topic Coverage Approach to Evaluation of Topic Models

When topic models are used for discovery of topics in text collections, a question that arises naturally is how well the model-induced topics correspond to topics of interest to the analyst.

Unsupervised Summarization for Chat Logs with Topic-Oriented Ranking and Context-Aware Auto-Encoders

Automatic chat summarization can help people quickly grasp important information from numerous chat messages.

AugESC: Dialogue Augmentation with Large Language Models for Emotional Support Conversation

Applying this approach, we construct AugESC, an augmented dataset for the ESC task, which largely extends the scale and topic coverage of the crowdsourced ESConv corpus.

MUG: A General Meeting Understanding and Generation Benchmark

To prompt SLP advancement, we establish a large-scale general Meeting Understanding and Generation Benchmark (MUG) to benchmark the performance of a wide range of SLP tasks, including topic segmentation, topic-level and session-level extractive summarization and topic title generation, keyphrase extraction, and action item detection.

ReGen: Zero-Shot Text Classification via Training Data Generation with Progressive Dense Retrieval

With the development of large language models (LLMs), zero-shot learning has attracted much attention for various NLP tasks.

Topic modeling topic coverage dataset

Topic modeling topic coverage dataset