Clustering

Clustering

Supporting Clustering with Contrastive Learning

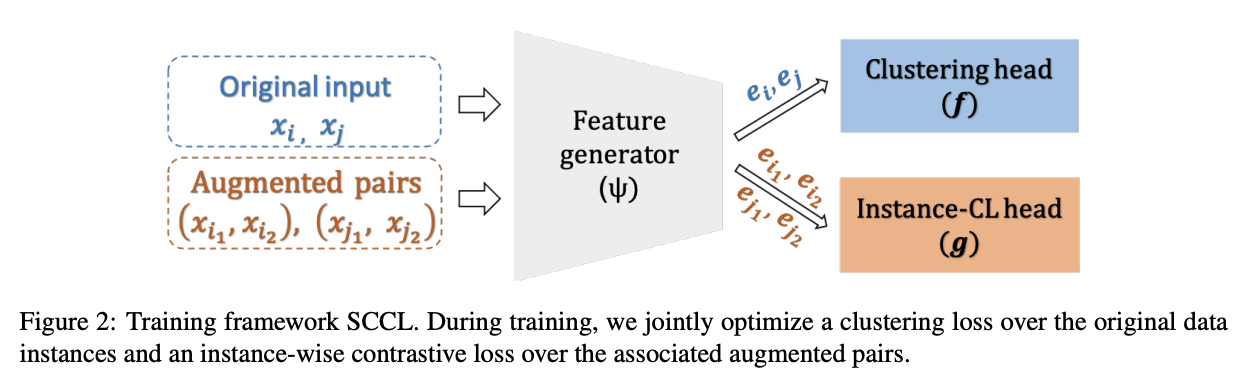

Introduced by Zhang et al. in Supporting Clustering with Contrastive LearningSCCL, or Supporting Clustering with Contrastive Learning, is a framework to leverage contrastive learning to promote better separation in unsupervised clustering. It combines the top-down clustering with the bottom-up instance-wise contrastive learning to achieve better inter-cluster distance and intra-cluster distance. During training, we jointly optimize a clustering loss over the original data instances and an instance-wise contrastive loss over the associated augmented pairs.

Source: Supporting Clustering with Contrastive LearningPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Classification | 1 | 12.50% |

| Continual Learning | 1 | 12.50% |

| Emotion Recognition | 1 | 12.50% |

| Language Modelling | 1 | 12.50% |

| Sentiment Analysis | 1 | 12.50% |

| Clustering | 1 | 12.50% |

| Short Text Clustering | 1 | 12.50% |

| Text Clustering | 1 | 12.50% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |