A Spectral Approach to Gradient Estimation for Implicit Distributions

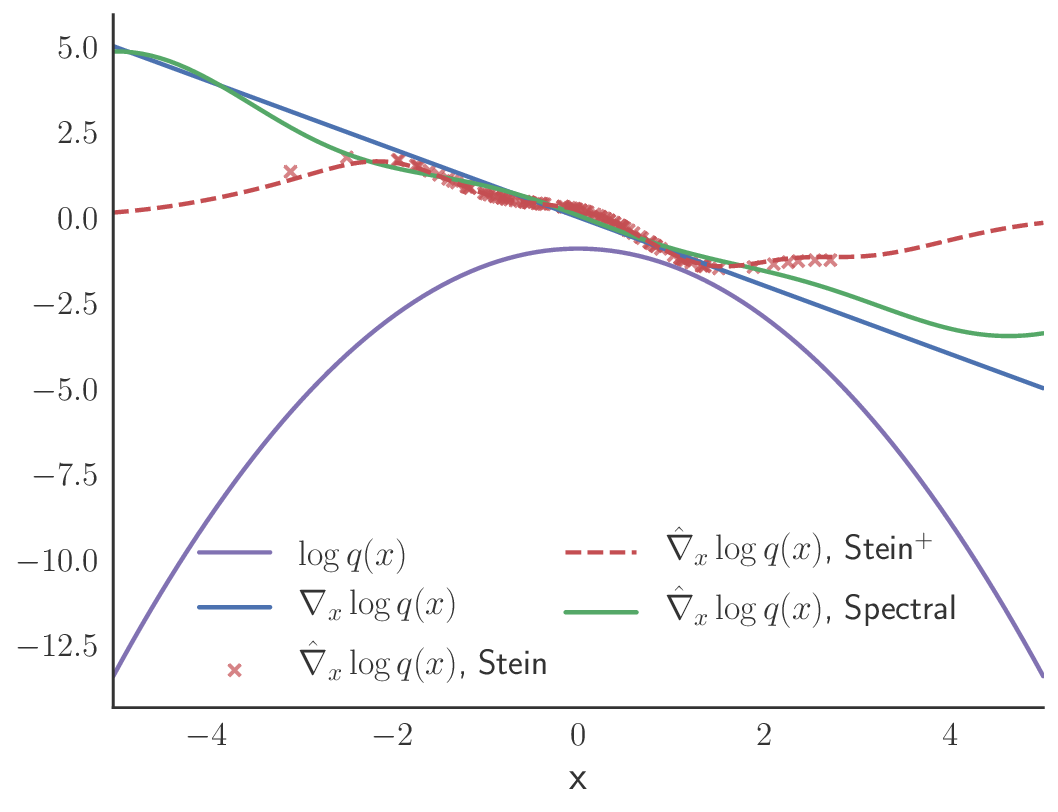

Recently there have been increasing interests in learning and inference with implicit distributions (i.e., distributions without tractable densities). To this end, we develop a gradient estimator for implicit distributions based on Stein's identity and a spectral decomposition of kernel operators, where the eigenfunctions are approximated by the Nystr\"om method. Unlike the previous works that only provide estimates at the sample points, our approach directly estimates the gradient function, thus allows for a simple and principled out-of-sample extension. We provide theoretical results on the error bound of the estimator and discuss the bias-variance tradeoff in practice. The effectiveness of our method is demonstrated by applications to gradient-free Hamiltonian Monte Carlo and variational inference with implicit distributions. Finally, we discuss the intuition behind the estimator by drawing connections between the Nystr\"om method and kernel PCA, which indicates that the estimator can automatically adapt to the geometry of the underlying distribution.

PDF Abstract ICML 2018 PDF ICML 2018 Abstract