Are Large Language Models Post Hoc Explainers?

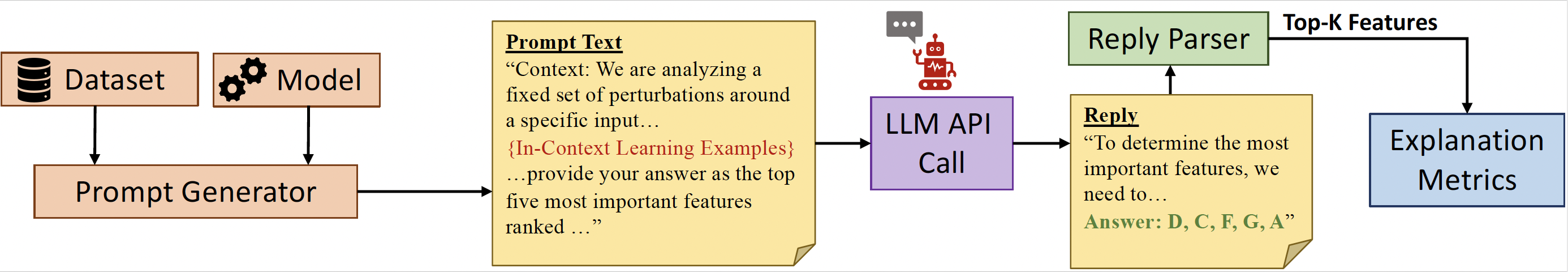

The increasing use of predictive models in high-stakes settings highlights the need for ensuring that relevant stakeholders understand and trust the decisions made by these models. To this end, several approaches have been proposed in recent literature to explain the behavior of complex predictive models in a post hoc fashion. However, despite the growing number of such post hoc explanation techniques, many require white-box access to the model and/or are computationally expensive, highlighting the need for next-generation post hoc explainers. Recently, Large Language Models (LLMs) have emerged as powerful tools that are effective at a wide variety of tasks. However, their potential to explain the behavior of other complex predictive models remains relatively unexplored. In this work, we carry out one of the initial explorations to analyze the effectiveness of LLMs in explaining other complex predictive models. To this end, we propose three novel approaches that exploit the in-context learning (ICL) capabilities of LLMs to explain the predictions made by other complex models. We conduct extensive experimentation with these approaches on real-world datasets to demonstrate that LLMs perform on par with state-of-the-art post hoc explainers, opening up promising avenues for future research into LLM-based post hoc explanations of complex predictive models.

PDF Abstract