Camouflaged Object Detection via Context-aware Cross-level Fusion

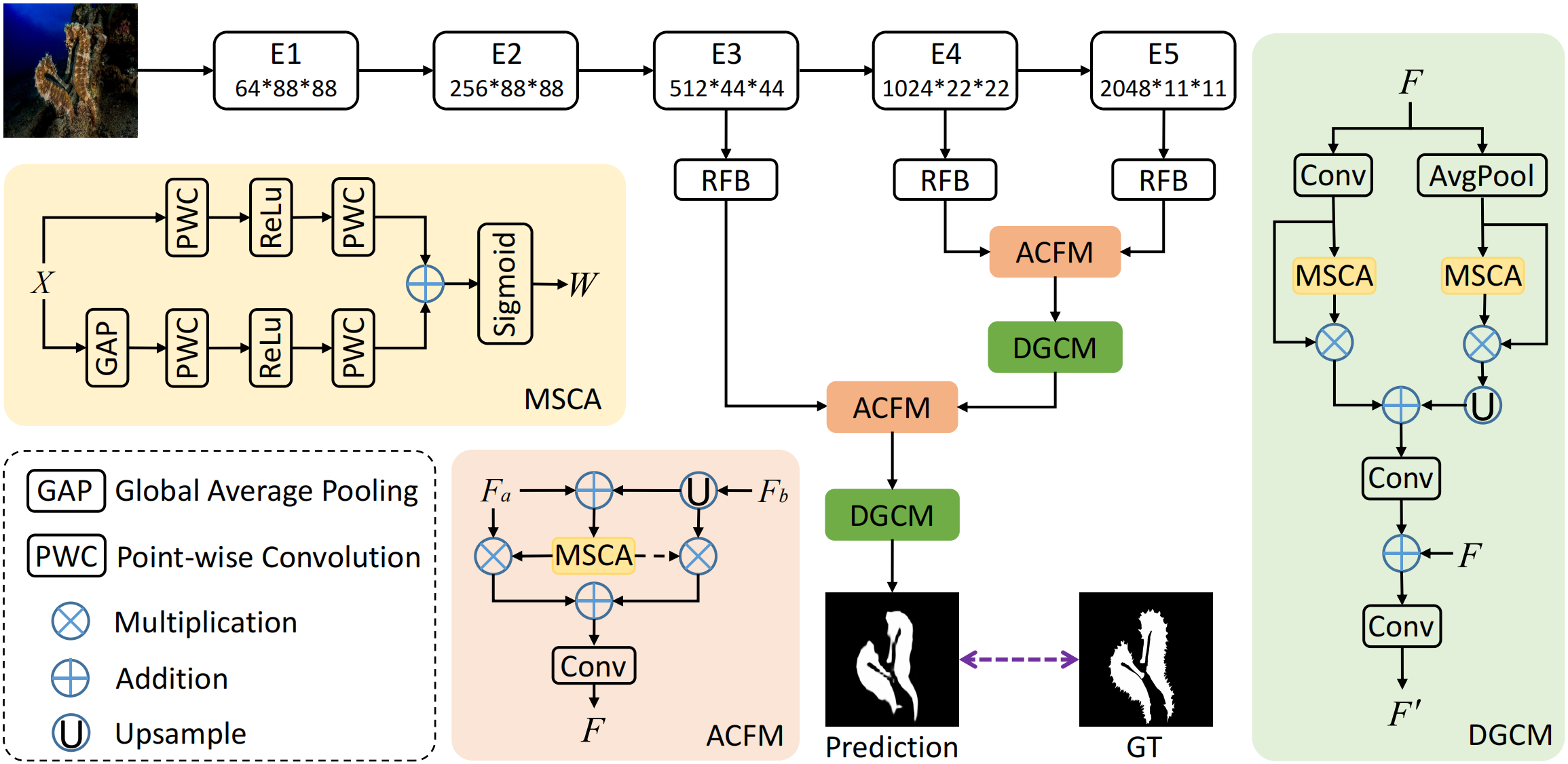

Camouflaged object detection (COD) aims to identify the objects that conceal themselves in natural scenes. Accurate COD suffers from a number of challenges associated with low boundary contrast and the large variation of object appearances, e.g., object size and shape. To address these challenges, we propose a novel Context-aware Cross-level Fusion Network (C2F-Net), which fuses context-aware cross-level features for accurately identifying camouflaged objects. Specifically, we compute informative attention coefficients from multi-level features with our Attention-induced Cross-level Fusion Module (ACFM), which further integrates the features under the guidance of attention coefficients. We then propose a Dual-branch Global Context Module (DGCM) to refine the fused features for informative feature representations by exploiting rich global context information. Multiple ACFMs and DGCMs are integrated in a cascaded manner for generating a coarse prediction from high-level features. The coarse prediction acts as an attention map to refine the low-level features before passing them to our Camouflage Inference Module (CIM) to generate the final prediction. We perform extensive experiments on three widely used benchmark datasets and compare C2F-Net with state-of-the-art (SOTA) models. The results show that C2F-Net is an effective COD model and outperforms SOTA models remarkably. Further, an evaluation on polyp segmentation datasets demonstrates the promising potentials of our C2F-Net in COD downstream applications. Our code is publicly available at: https://github.com/Ben57882/C2FNet-TSCVT.

PDF Abstract

COD10K

COD10K

CAMO

CAMO