CoCoNet: Coupled Contrastive Learning Network with Multi-level Feature Ensemble for Multi-modality Image Fusion

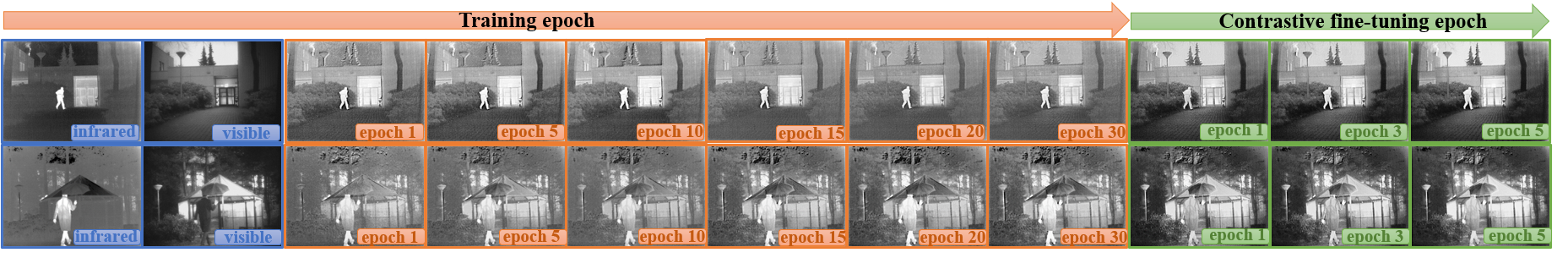

Infrared and visible image fusion targets to provide an informative image by combining complementary information from different sensors. Existing learning-based fusion approaches attempt to construct various loss functions to preserve complementary features, while neglecting to discover the inter-relationship between the two modalities, leading to redundant or even invalid information on the fusion results. Moreover, most methods focus on strengthening the network with an increase in depth while neglecting the importance of feature transmission, causing vital information degeneration. To alleviate these issues, we propose a coupled contrastive learning network, dubbed CoCoNet, to realize infrared and visible image fusion in an end-to-end manner. Concretely, to simultaneously retain typical features from both modalities and to avoid artifacts emerging on the fused result, we develop a coupled contrastive constraint in our loss function. In a fused image, its foreground target / background detail part is pulled close to the infrared / visible source and pushed far away from the visible / infrared source in the representation space. We further exploit image characteristics to provide data-sensitive weights, allowing our loss function to build a more reliable relationship with source images. A multi-level attention module is established to learn rich hierarchical feature representation and to comprehensively transfer features in the fusion process. We also apply the proposed CoCoNet on medical image fusion of different types, e.g., magnetic resonance image, positron emission tomography image, and single photon emission computed tomography image. Extensive experiments demonstrate that our method achieves state-of-the-art (SOTA) performance under both subjective and objective evaluation, especially in preserving prominent targets and recovering vital textural details.

PDF Abstract