Convolutional Initialization for Data-Efficient Vision Transformers

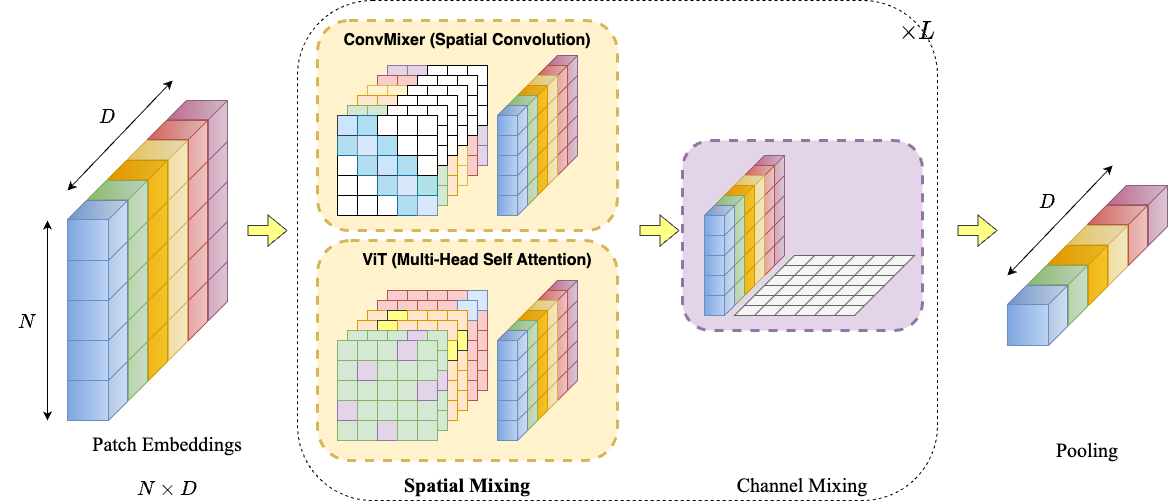

Training vision transformer networks on small datasets poses challenges. In contrast, convolutional neural networks (CNNs) can achieve state-of-the-art performance by leveraging their architectural inductive bias. In this paper, we investigate whether this inductive bias can be reinterpreted as an initialization bias within a vision transformer network. Our approach is motivated by the finding that random impulse filters can achieve almost comparable performance to learned filters in CNNs. We introduce a novel initialization strategy for transformer networks that can achieve comparable performance to CNNs on small datasets while preserving its architectural flexibility.

PDF Abstract

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

SVHN

SVHN