Latent Code Augmentation Based on Stable Diffusion for Data-free Substitute Attacks

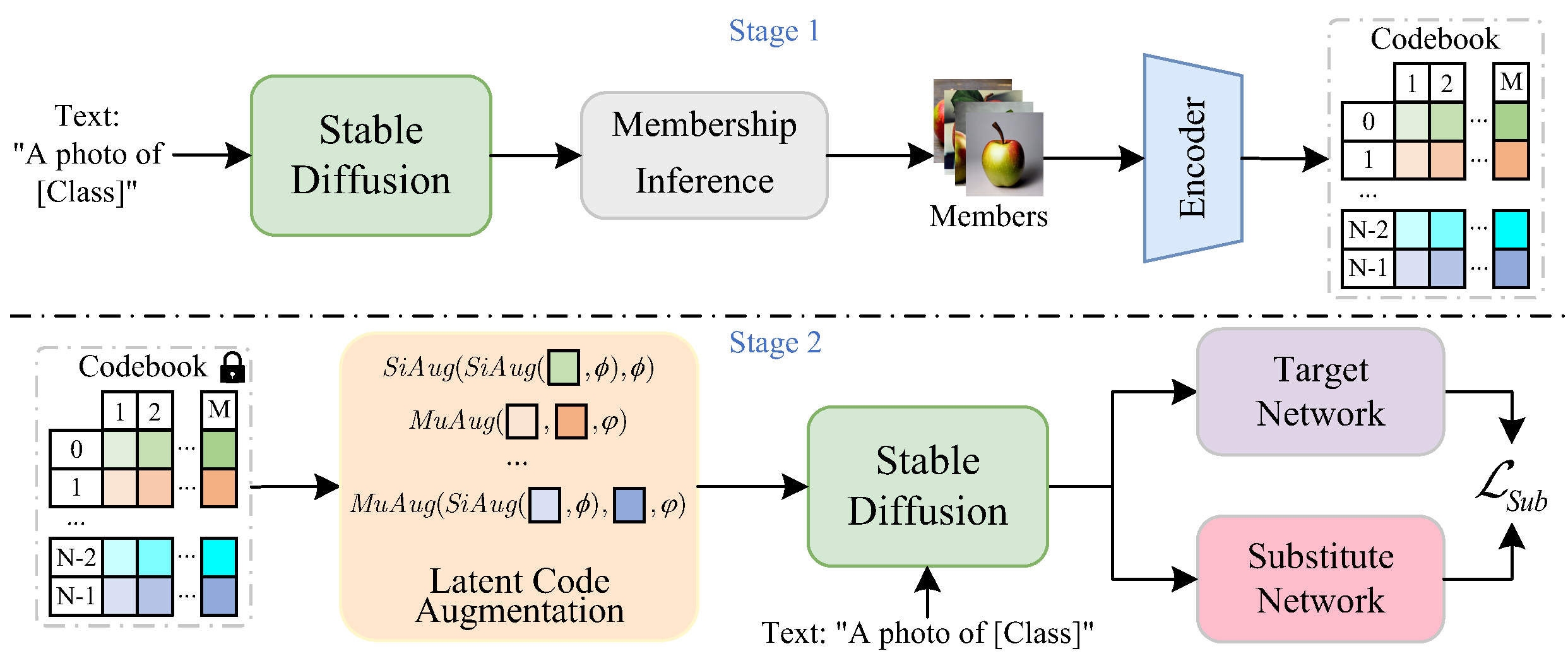

Since the training data of the target model is not available in the black-box substitute attack, most recent schemes utilize GANs to generate data for training the substitute model. However, these GANs-based schemes suffer from low training efficiency as the generator needs to be retrained for each target model during the substitute training process, as well as low generation quality. To overcome these limitations, we consider utilizing the diffusion model to generate data, and propose a novel data-free substitute attack scheme based on the Stable Diffusion (SD) to improve the efficiency and accuracy of substitute training. Despite the data generated by the SD exhibiting high quality, it presents a different distribution of domains and a large variation of positive and negative samples for the target model. For this problem, we propose Latent Code Augmentation (LCA) to facilitate SD in generating data that aligns with the data distribution of the target model. Specifically, we augment the latent codes of the inferred member data with LCA and use them as guidance for SD. With the guidance of LCA, the data generated by the SD not only meets the discriminative criteria of the target model but also exhibits high diversity. By utilizing this data, it is possible to train the substitute model that closely resembles the target model more efficiently. Extensive experiments demonstrate that our LCA achieves higher attack success rates and requires fewer query budgets compared to GANs-based schemes for different target models. Our codes are available at \url{https://github.com/LzhMeng/LCA}.

PDF Abstract

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

STL-10

STL-10