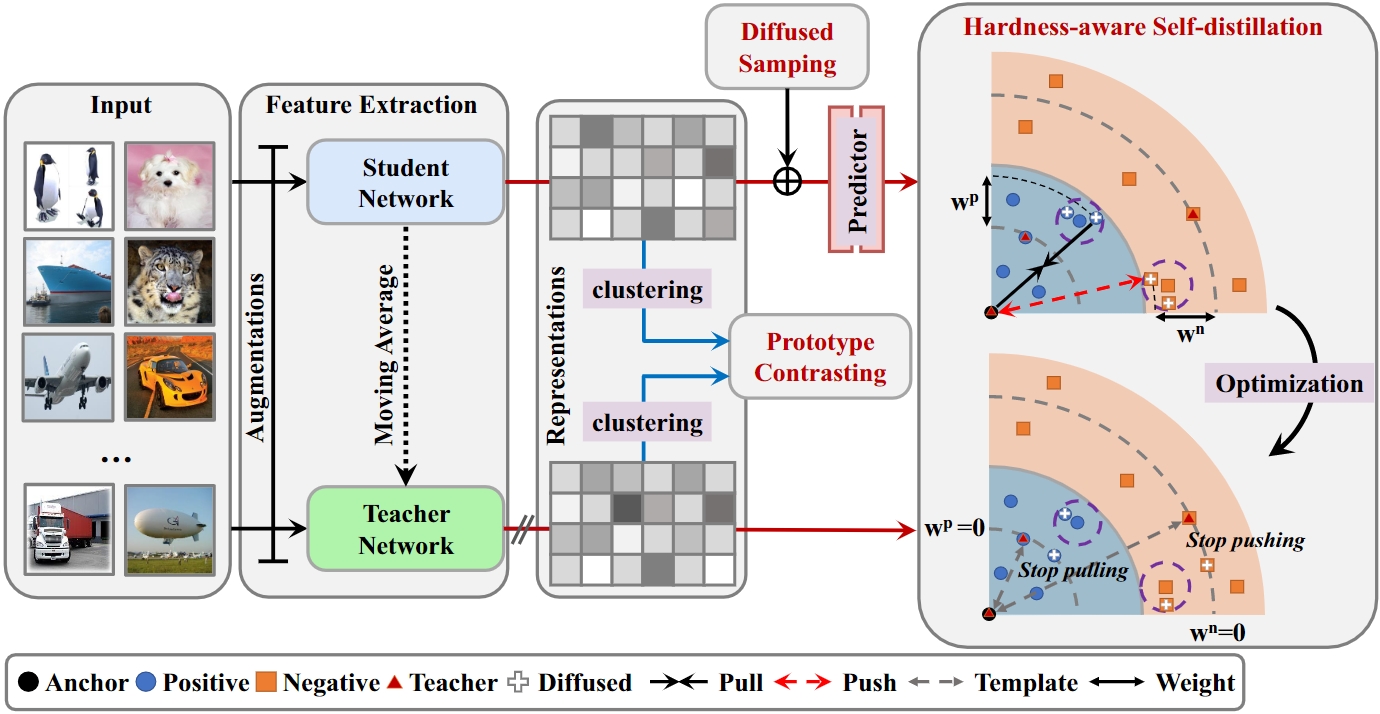

Deep Clustering with Diffused Sampling and Hardness-aware Self-distillation

Deep clustering has gained significant attention due to its capability in learning clustering-friendly representations without labeled data. However, previous deep clustering methods tend to treat all samples equally, which neglect the variance in the latent distribution and the varying difficulty in classifying or clustering different samples. To address this, this paper proposes a novel end-to-end deep clustering method with diffused sampling and hardness-aware self-distillation (HaDis). Specifically, we first align one view of instances with another view via diffused sampling alignment (DSA), which helps improve the intra-cluster compactness. To alleviate the sampling bias, we present the hardness-aware self-distillation (HSD) mechanism to mine the hardest positive and negative samples and adaptively adjust their weights in a self-distillation fashion, which is able to deal with the potential imbalance in sample contributions during optimization. Further, the prototypical contrastive learning is incorporated to simultaneously enhance the inter-cluster separability and intra-cluster compactness. Experimental results on five challenging image datasets demonstrate the superior clustering performance of our HaDis method over the state-of-the-art. Source code is available at https://github.com/Regan-Zhang/HaDis.

PDF Abstract

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

STL-10

STL-10