Discretization Invariant Networks for Learning Maps between Neural Fields

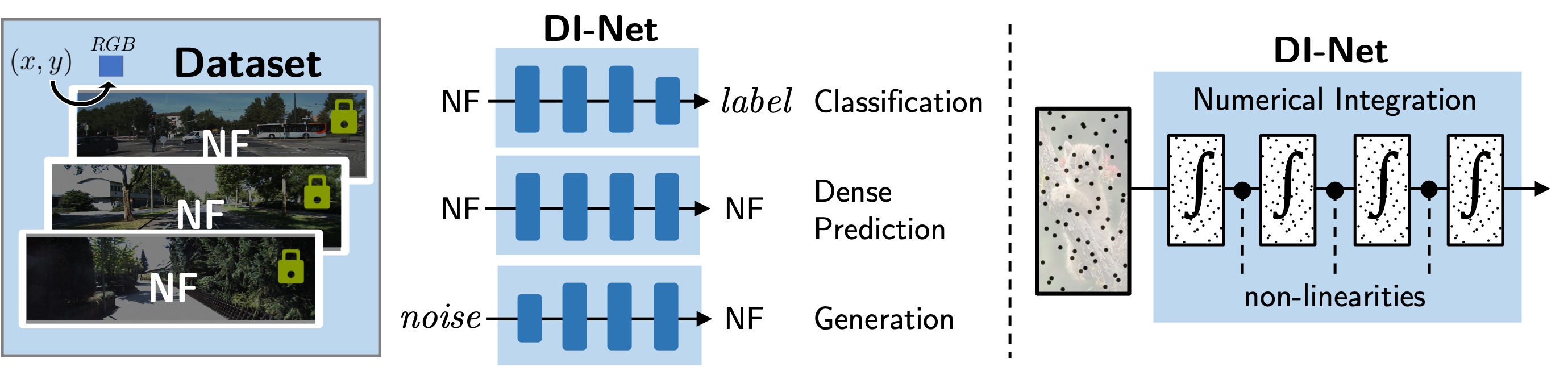

With the emergence of powerful representations of continuous data in the form of neural fields, there is a need for discretization invariant learning: an approach for learning maps between functions on continuous domains without being sensitive to how the function is sampled. We present a new framework for understanding and designing discretization invariant neural networks (DI-Nets), which generalizes many discrete networks such as convolutional neural networks as well as continuous networks such as neural operators. Our analysis establishes upper bounds on the deviation in model outputs under different finite discretizations, and highlights the central role of point set discrepancy in characterizing such bounds. This insight leads to the design of a family of neural networks driven by numerical integration via quasi-Monte Carlo sampling with discretizations of low discrepancy. We prove by construction that DI-Nets universally approximate a large class of maps between integrable function spaces, and show that discretization invariance also describes backpropagation through such models. Applied to neural fields, convolutional DI-Nets can learn to classify and segment visual data under various discretizations, and sometimes generalize to new types of discretizations at test time. Code: https://github.com/clintonjwang/DI-net.

PDF Abstract

ImageNet

ImageNet

Cityscapes

Cityscapes