Deep Transformation-Invariant Clustering

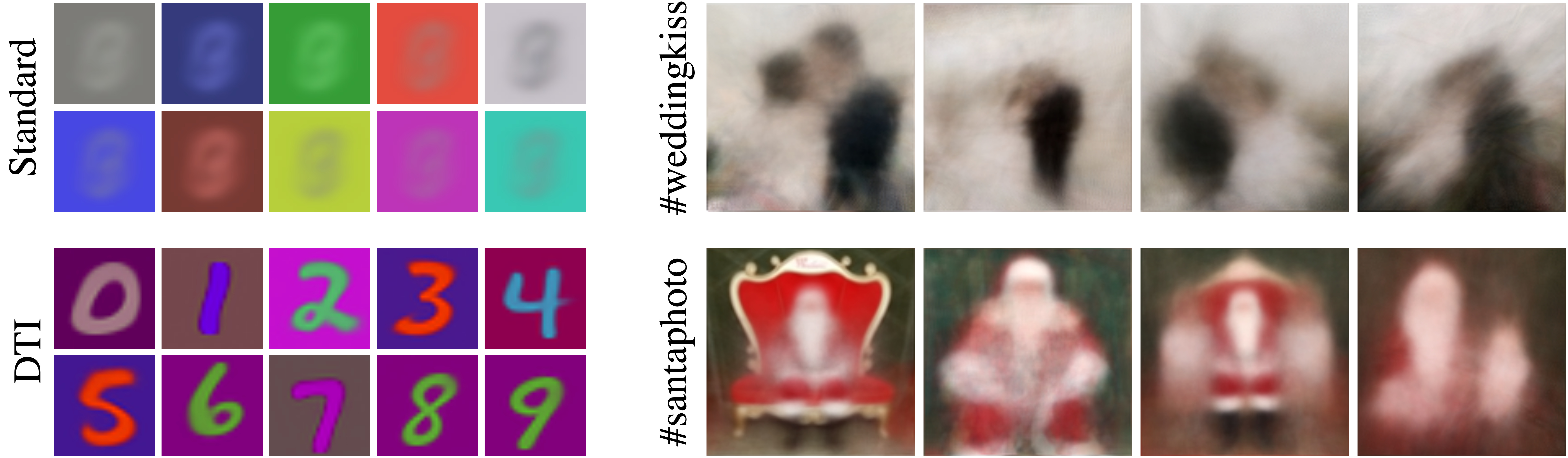

Recent advances in image clustering typically focus on learning better deep representations. In contrast, we present an orthogonal approach that does not rely on abstract features but instead learns to predict image transformations and performs clustering directly in image space. This learning process naturally fits in the gradient-based training of K-means and Gaussian mixture model, without requiring any additional loss or hyper-parameters. It leads us to two new deep transformation-invariant clustering frameworks, which jointly learn prototypes and transformations. More specifically, we use deep learning modules that enable us to resolve invariance to spatial, color and morphological transformations. Our approach is conceptually simple and comes with several advantages, including the possibility to easily adapt the desired invariance to the task and a strong interpretability of both cluster centers and assignments to clusters. We demonstrate that our novel approach yields competitive and highly promising results on standard image clustering benchmarks. Finally, we showcase its robustness and the advantages of its improved interpretability by visualizing clustering results over real photograph collections.

PDF Abstract NeurIPS 2020 PDF NeurIPS 2020 AbstractCode

Datasets

Results from the Paper

Ranked #2 on

Unsupervised Image Classification

on SVHN

(using extra training data)

Ranked #2 on

Unsupervised Image Classification

on SVHN

(using extra training data)

MNIST

MNIST

SVHN

SVHN

Fashion-MNIST

Fashion-MNIST

USPS

USPS

FRGC

FRGC