Density Fixing: Simple yet Effective Regularization Method based on the Class Prior

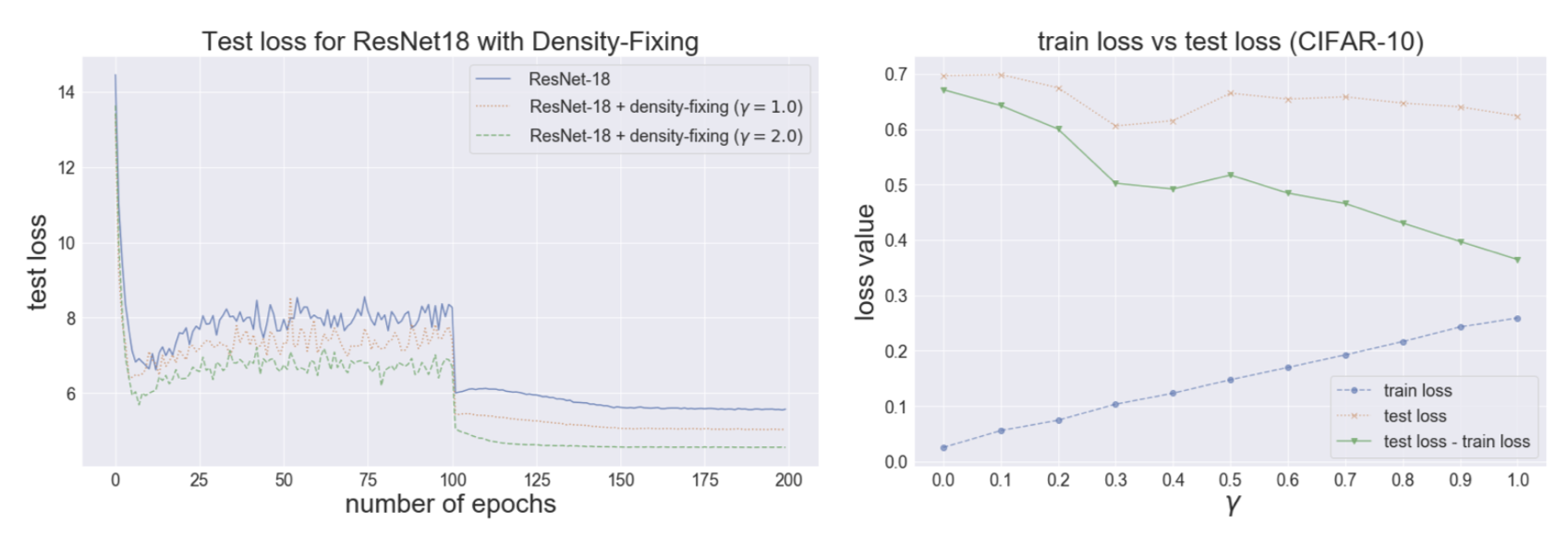

Machine learning models suffer from overfitting, which is caused by a lack of labeled data. To tackle this problem, we proposed a framework of regularization methods, called density-fixing, that can be used commonly for supervised and semi-supervised learning. Our proposed regularization method improves the generalization performance by forcing the model to approximate the class's prior distribution or the frequency of occurrence. This regularization term is naturally derived from the formula of maximum likelihood estimation and is theoretically justified. We further provide the several theoretical analyses of the proposed method including asymptotic behavior. Our experimental results on multiple benchmark datasets are sufficient to support our argument, and we suggest that this simple and effective regularization method is useful in real-world machine learning problems.

PDF Abstract

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

SVHN

SVHN

STL-10

STL-10