Domain-Specific Bias Filtering for Single Labeled Domain Generalization

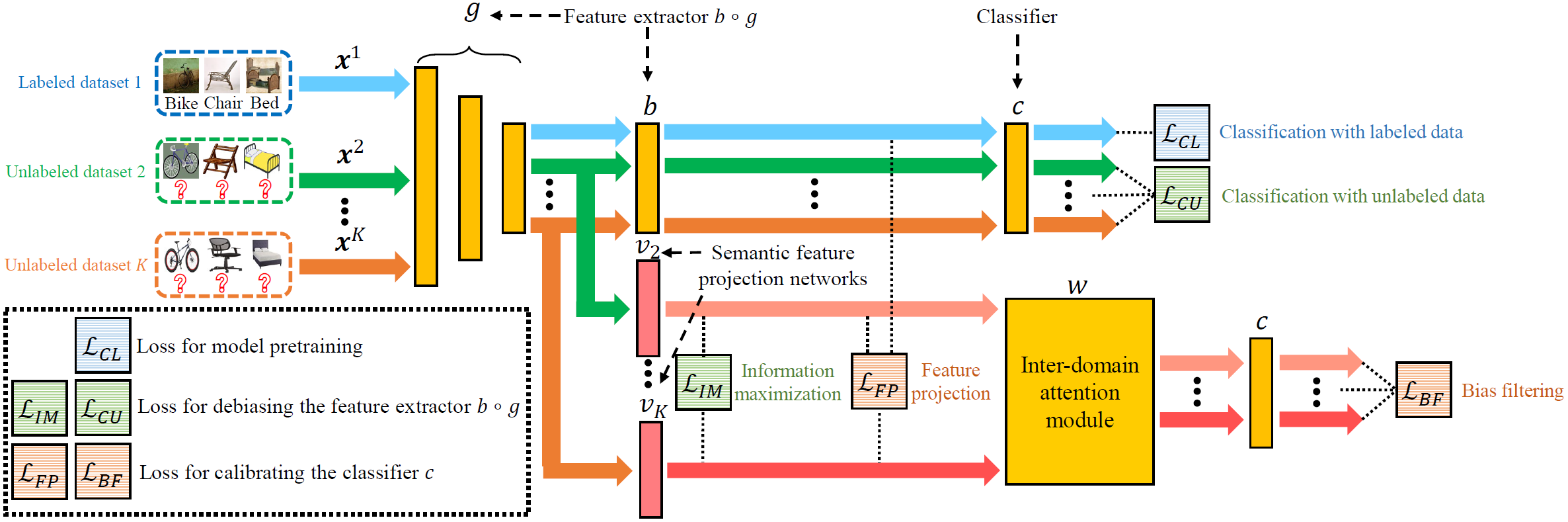

Conventional Domain Generalization (CDG) utilizes multiple labeled source datasets to train a generalizable model for unseen target domains. However, due to expensive annotation costs, the requirements of labeling all the source data are hard to be met in real-world applications. In this paper, we investigate a Single Labeled Domain Generalization (SLDG) task with only one source domain being labeled, which is more practical and challenging than the CDG task. A major obstacle in the SLDG task is the discriminability-generalization bias: the discriminative information in the labeled source dataset may contain domain-specific bias, constraining the generalization of the trained model. To tackle this challenging task, we propose a novel framework called Domain-Specific Bias Filtering (DSBF), which initializes a discriminative model with the labeled source data and then filters out its domain-specific bias with the unlabeled source data for generalization improvement. We divide the filtering process into (1) feature extractor debiasing via k-means clustering-based semantic feature re-extraction and (2) classifier rectification through attention-guided semantic feature projection. DSBF unifies the exploration of the labeled and the unlabeled source data to enhance the discriminability and generalization of the trained model, resulting in a highly generalizable model. We further provide theoretical analysis to verify the proposed domain-specific bias filtering process. Extensive experiments on multiple datasets show the superior performance of DSBF in tackling both the challenging SLDG task and the CDG task.

PDF Abstract

Office-Home

Office-Home

DomainNet

DomainNet

PACS

PACS