DualView: Data Attribution from the Dual Perspective

Local data attribution (or influence estimation) techniques aim at estimating the impact that individual data points seen during training have on particular predictions of an already trained Machine Learning model during test time. Previous methods either do not perform well consistently across different evaluation criteria from literature, are characterized by a high computational demand, or suffer from both. In this work we present DualView, a novel method for post-hoc data attribution based on surrogate modelling, demonstrating both high computational efficiency, as well as good evaluation results. With a focus on neural networks, we evaluate our proposed technique using suitable quantitative evaluation strategies from the literature against related principal local data attribution methods. We find that DualView requires considerably lower computational resources than other methods, while demonstrating comparable performance to competing approaches across evaluation metrics. Futhermore, our proposed method produces sparse explanations, where sparseness can be tuned via a hyperparameter. Finally, we showcase that with DualView, we can now render explanations from local data attributions compatible with established local feature attribution methods: For each prediction on (test) data points explained in terms of impactful samples from the training set, we are able to compute and visualize how the prediction on (test) sample relates to each influential training sample in terms of features recognized and by the model. We provide an Open Source implementation of DualView online, together with implementations for all other local data attribution methods we compare against, as well as the metrics reported here, for full reproducibility.

PDF Abstract

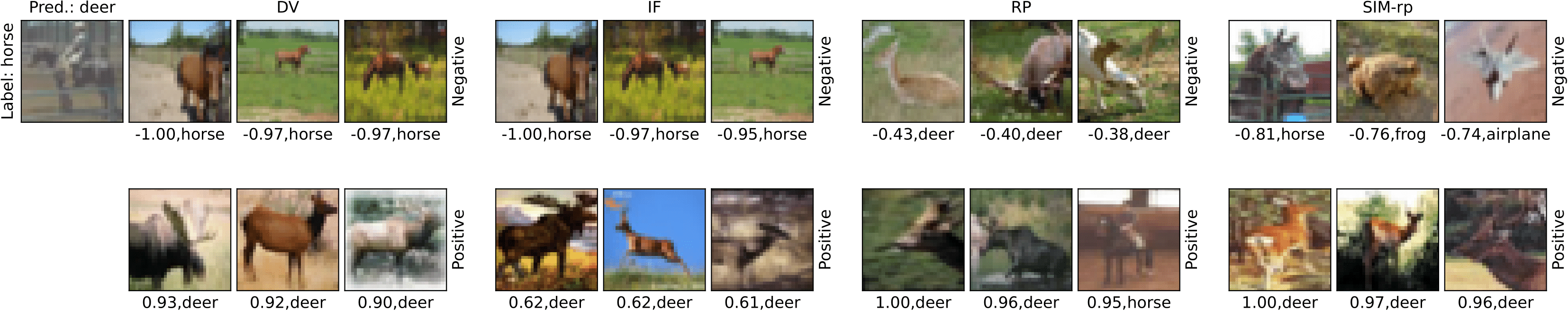

CIFAR-10

CIFAR-10