EvoSeed: Unveiling the Threat on Deep Neural Networks with Real-World Illusions

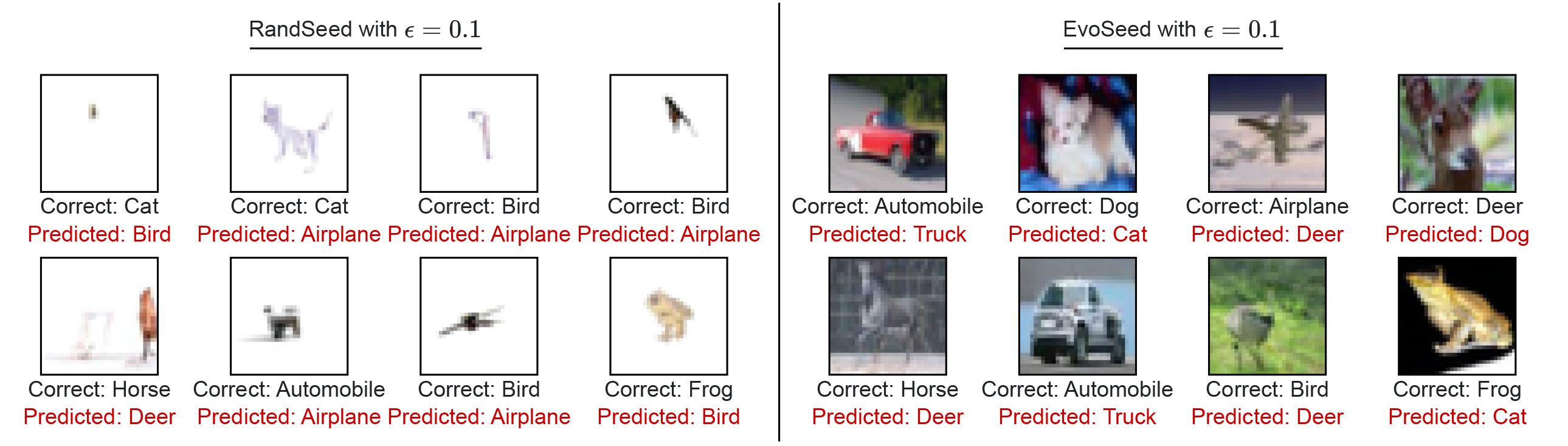

Deep neural networks are exploited using natural adversarial samples, which have no impact on human perception but are misclassified. Current approaches often rely on the white-box nature of deep neural networks to generate these adversarial samples or alter the distribution of adversarial samples compared to training distribution. To alleviate the limitations of current approaches, we propose EvoSeed, a novel evolutionary strategy-based search algorithmic framework to generate natural adversarial samples. Our EvoSeed framework uses auxiliary Diffusion and Classifier models to operate in a model-agnostic black-box setting. We employ CMA-ES to optimize the search for an adversarial seed vector, which, when processed by the Conditional Diffusion Model, results in an unrestricted natural adversarial sample misclassified by the Classifier Model. Experiments show that generated adversarial images are of high image quality and are transferable to different classifiers. Our approach demonstrates promise in enhancing the quality of adversarial samples using evolutionary algorithms. We hope our research opens new avenues to enhance the robustness of deep neural networks in real-world scenarios. Project Website can be accessed at \url{https://shashankkotyan.github.io/EvoSeed}.

PDF Abstract

CIFAR-10

CIFAR-10