Explaining Neural Networks by Decoding Layer Activations

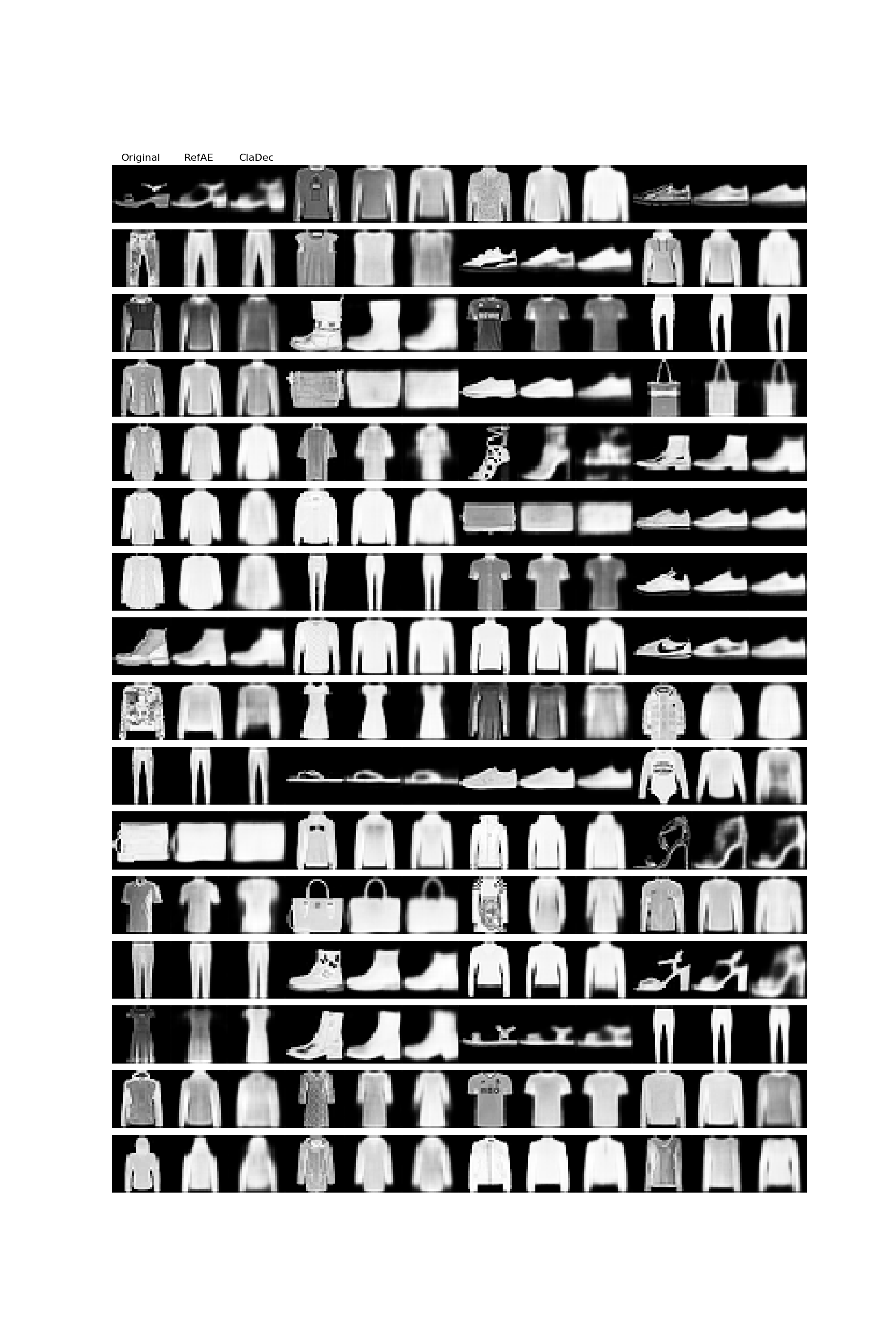

We present a `CLAssifier-DECoder' architecture (\emph{ClaDec}) which facilitates the comprehension of the output of an arbitrary layer in a neural network (NN). It uses a decoder to transform the non-interpretable representation of the given layer to a representation that is more similar to the domain a human is familiar with. In an image recognition problem, one can recognize what information is represented by a layer by contrasting reconstructed images of \emph{ClaDec} with those of a conventional auto-encoder(AE) serving as reference. We also extend \emph{ClaDec} to allow the trade-off between human interpretability and fidelity. We evaluate our approach for image classification using Convolutional NNs. We show that reconstructed visualizations using encodings from a classifier capture more relevant information for classification than conventional AEs. Relevant code is available at \url{https://github.com/JohnTailor/ClaDec}

PDF Abstract

Fashion-MNIST

Fashion-MNIST