FBCNet: A Multi-view Convolutional Neural Network for Brain-Computer Interface

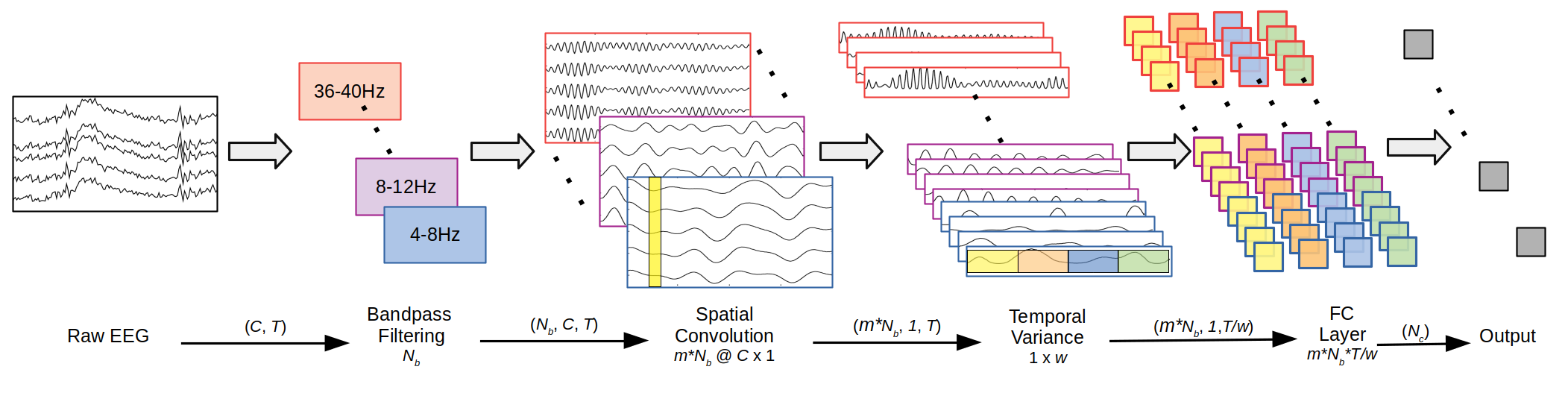

Lack of adequate training samples and noisy high-dimensional features are key challenges faced by Motor Imagery (MI) decoding algorithms for electroencephalogram (EEG) based Brain-Computer Interface (BCI). To address these challenges, inspired from neuro-physiological signatures of MI, this paper proposes a novel Filter-Bank Convolutional Network (FBCNet) for MI classification. FBCNet employs a multi-view data representation followed by spatial filtering to extract spectro-spatially discriminative features. This multistage approach enables efficient training of the network even when limited training data is available. More significantly, in FBCNet, we propose a novel Variance layer that effectively aggregates the EEG time-domain information. With this design, we compare FBCNet with state-of-the-art (SOTA) BCI algorithm on four MI datasets: The BCI competition IV dataset 2a (BCIC-IV-2a), the OpenBMI dataset, and two large datasets from chronic stroke patients. The results show that, by achieving 76.20% 4-class classification accuracy, FBCNet sets a new SOTA for BCIC-IV-2a dataset. On the other three datasets, FBCNet yields up to 8% higher binary classification accuracies. Additionally, using explainable AI techniques we present one of the first reports about the differences in discriminative EEG features between healthy subjects and stroke patients. Also, the FBCNet source code is available at https://github.com/ravikiran-mane/FBCNet.

PDF Abstract