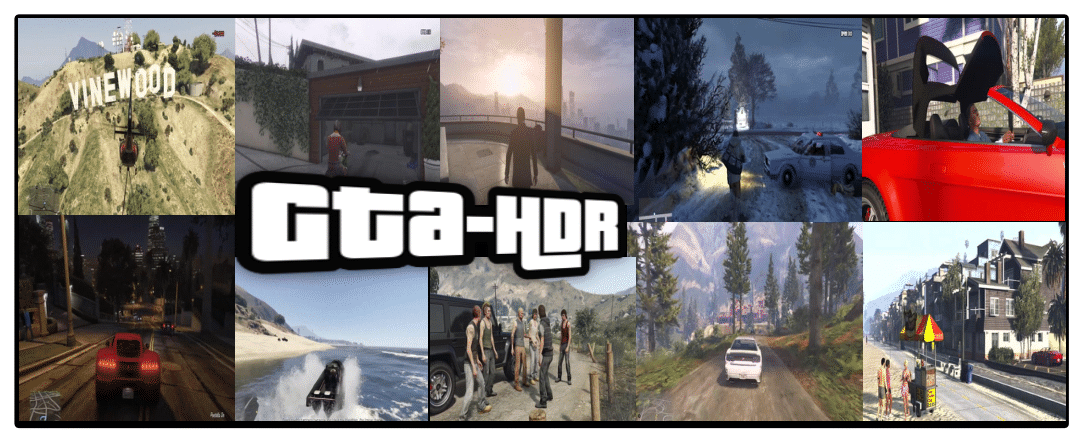

GTA-HDR: A Large-Scale Synthetic Dataset for HDR Image Reconstruction

High Dynamic Range (HDR) content (i.e., images and videos) has a broad range of applications. However, capturing HDR content from real-world scenes is expensive and time- consuming. Therefore, the challenging task of reconstructing visually accurate HDR images from their Low Dynamic Range (LDR) counterparts is gaining attention in the vision research community. A major challenge in this research problem is the lack of datasets, which capture diverse scene conditions (e.g., lighting, shadows, weather, locations, landscapes, objects, humans, buildings) and various image features (e.g., color, contrast, saturation, hue, luminance, brightness, radiance). To address this gap, in this paper, we introduce GTA-HDR, a large-scale synthetic dataset of photo-realistic HDR images sampled from the GTA-V video game. We perform thorough evaluation of the proposed dataset, which demonstrates significant qualitative and quantitative improvements of the state-of-the-art HDR image reconstruction methods. Furthermore, we demonstrate the effectiveness of the proposed dataset and its impact on additional computer vision tasks including 3D human pose estimation, human body part segmentation, and holistic scene segmentation. The dataset, data collection pipeline, and evaluation code are available at: https://github.com/HrishavBakulBarua/GTA-HDR.

PDF Abstract