Hierarchical Density Order Embeddings

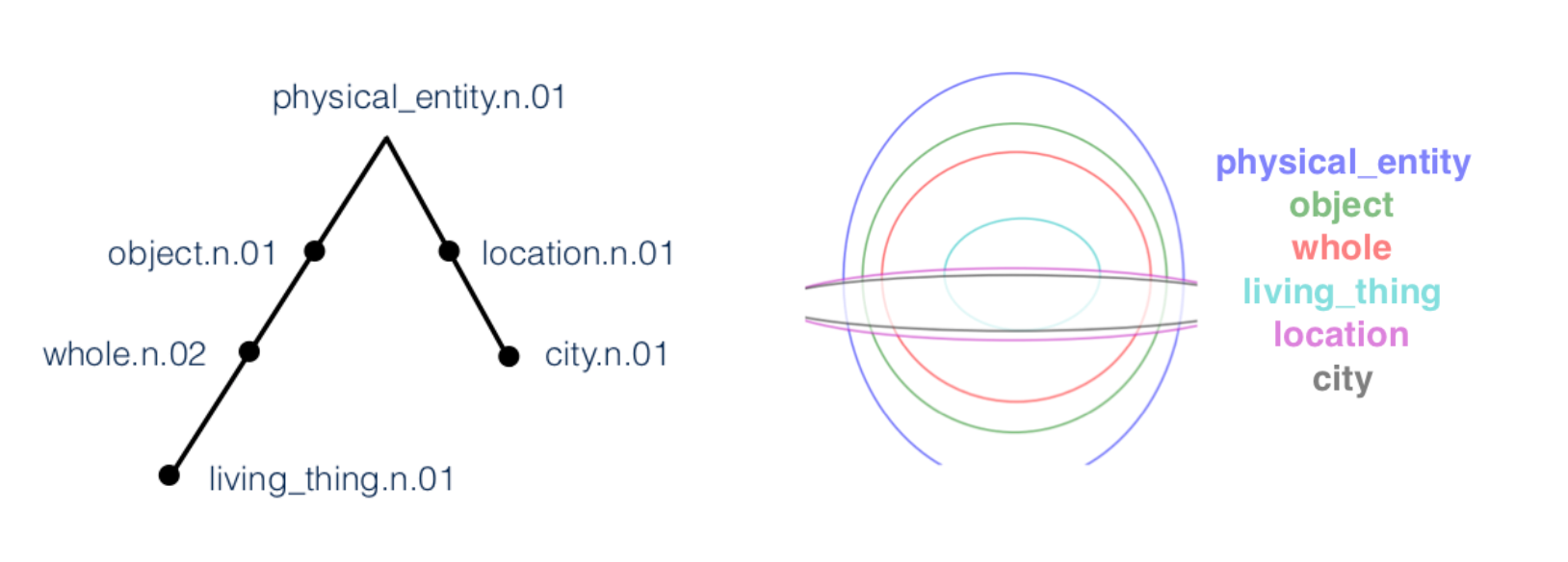

By representing words with probability densities rather than point vectors, probabilistic word embeddings can capture rich and interpretable semantic information and uncertainty. The uncertainty information can be particularly meaningful in capturing entailment relationships -- whereby general words such as "entity" correspond to broad distributions that encompass more specific words such as "animal" or "instrument". We introduce density order embeddings, which learn hierarchical representations through encapsulation of probability densities. In particular, we propose simple yet effective loss functions and distance metrics, as well as graph-based schemes to select negative samples to better learn hierarchical density representations. Our approach provides state-of-the-art performance on the WordNet hypernym relationship prediction task and the challenging HyperLex lexical entailment dataset -- while retaining a rich and interpretable density representation.

PDF Abstract ICLR 2018 PDF ICLR 2018 Abstract