Hierarchical Inter-Message Passing for Learning on Molecular Graphs

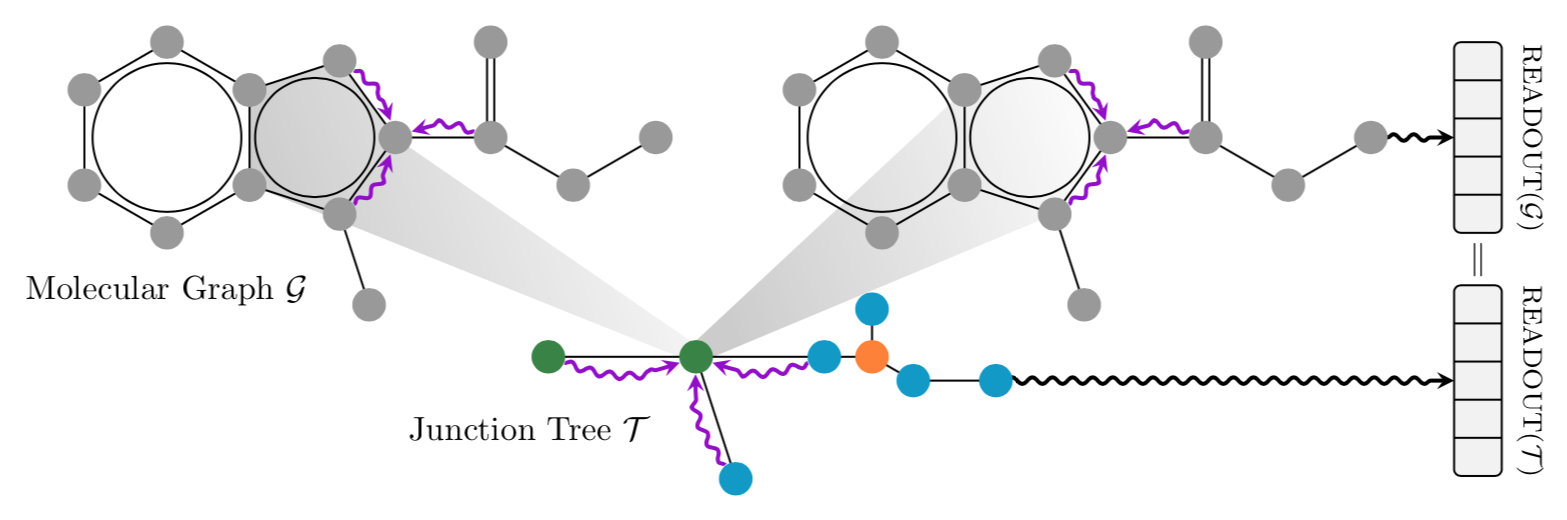

We present a hierarchical neural message passing architecture for learning on molecular graphs. Our model takes in two complementary graph representations: the raw molecular graph representation and its associated junction tree, where nodes represent meaningful clusters in the original graph, e.g., rings or bridged compounds. We then proceed to learn a molecule's representation by passing messages inside each graph, and exchange messages between the two representations using a coarse-to-fine and fine-to-coarse information flow. Our method is able to overcome some of the restrictions known from classical GNNs, like detecting cycles, while still being very efficient to train. We validate its performance on the ZINC dataset and datasets stemming from the MoleculeNet benchmark collection.

PDF Abstract

OGB

OGB