Impact of Fully Connected Layers on Performance of Convolutional Neural Networks for Image Classification

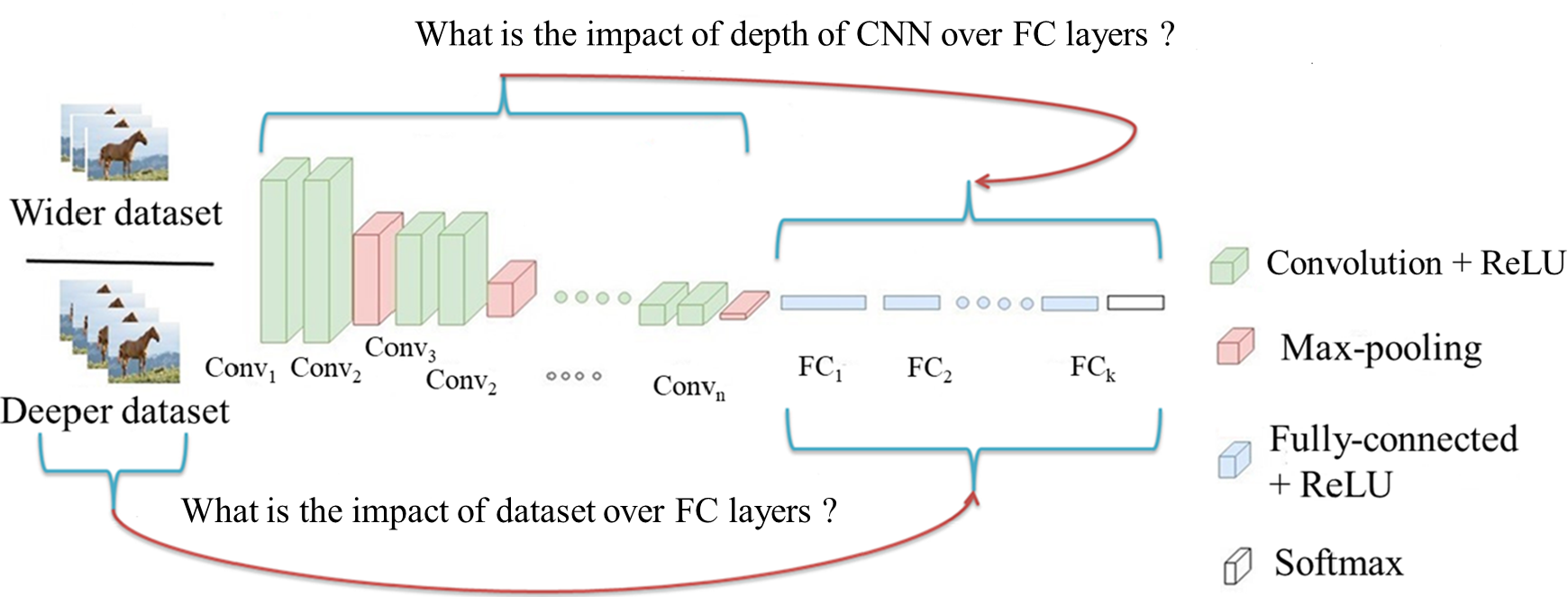

The Convolutional Neural Networks (CNNs), in domains like computer vision, mostly reduced the need for handcrafted features due to its ability to learn the problem-specific features from the raw input data. However, the selection of dataset-specific CNN architecture, which mostly performed by either experience or expertise is a time-consuming and error-prone process. To automate the process of learning a CNN architecture, this paper attempts at finding the relationship between Fully Connected (FC) layers with some of the characteristics of the datasets. The CNN architectures, and recently datasets also, are categorized as deep, shallow, wide, etc. This paper tries to formalize these terms along with answering the following questions. (i) What is the impact of deeper/shallow architectures on the performance of the CNN w.r.t. FC layers?, (ii) How the deeper/wider datasets influence the performance of CNN w.r.t. FC layers?, and (iii) Which kind of architecture (deeper/ shallower) is better suitable for which kind of (deeper/ wider) datasets. To address these findings, we have performed experiments with three CNN architectures having different depths. The experiments are conducted by varying the number of FC layers. We used four widely used datasets including CIFAR-10, CIFAR-100, Tiny ImageNet, and CRCHistoPhenotypes to justify our findings in the context of the image classification problem. The source code of this research is available at https://github.com/shabbeersh/Impact-of-FC-layers.

PDF Abstract

CIFAR-10

CIFAR-10

ImageNet

ImageNet

CIFAR-100

CIFAR-100