Intra-Inter Camera Similarity for Unsupervised Person Re-Identification

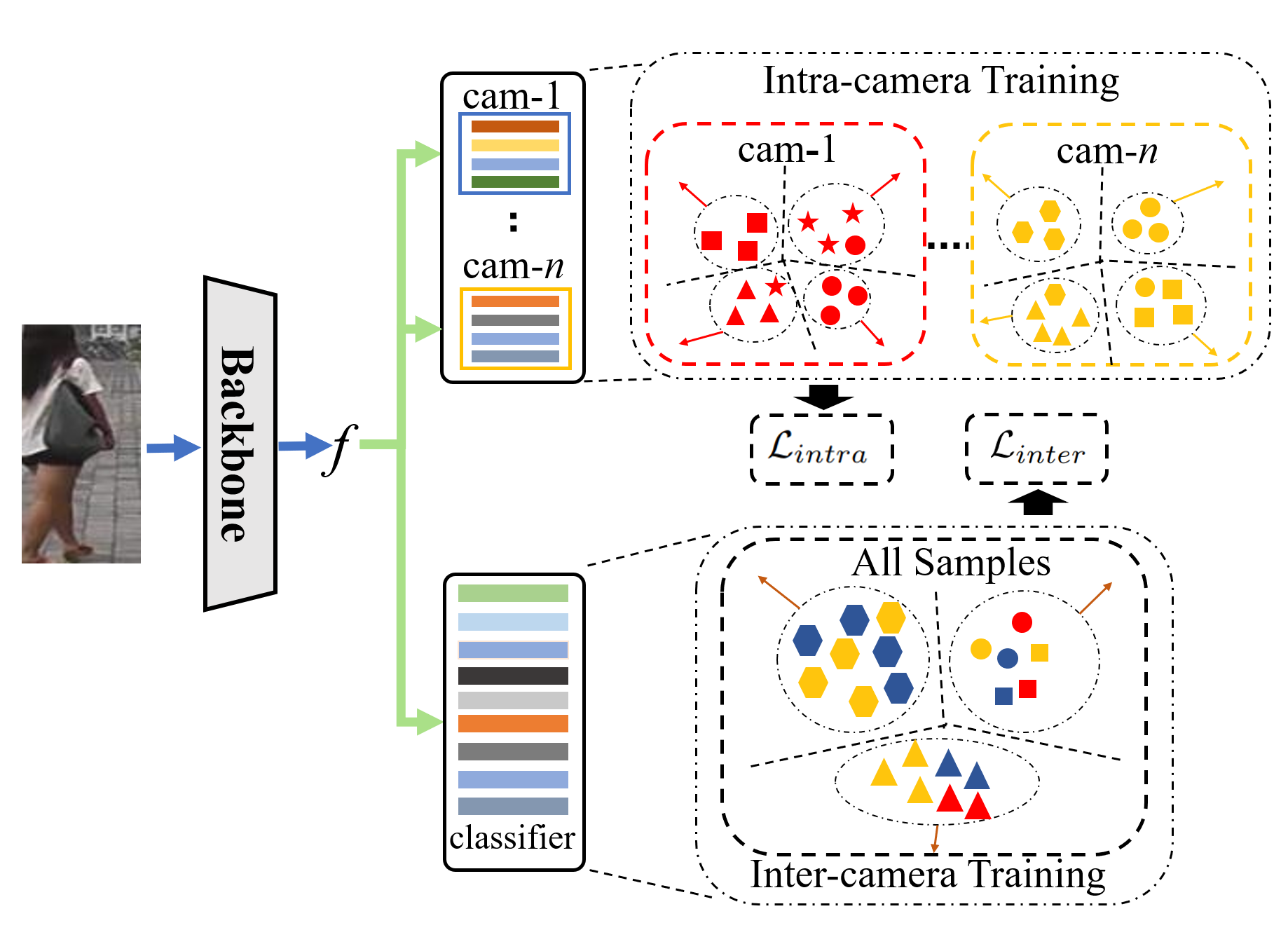

Most of unsupervised person Re-Identification (Re-ID) works produce pseudo-labels by measuring the feature similarity without considering the distribution discrepancy among cameras, leading to degraded accuracy in label computation across cameras. This paper targets to address this challenge by studying a novel intra-inter camera similarity for pseudo-label generation. We decompose the sample similarity computation into two stage, i.e., the intra-camera and inter-camera computations, respectively. The intra-camera computation directly leverages the CNN features for similarity computation within each camera. Pseudo-labels generated on different cameras train the re-id model in a multi-branch network. The second stage considers the classification scores of each sample on different cameras as a new feature vector. This new feature effectively alleviates the distribution discrepancy among cameras and generates more reliable pseudo-labels. We hence train our re-id model in two stages with intra-camera and inter-camera pseudo-labels, respectively. This simple intra-inter camera similarity produces surprisingly good performance on multiple datasets, e.g., achieves rank-1 accuracy of 89.5% on the Market1501 dataset, outperforming the recent unsupervised works by 9+%, and is comparable with the latest transfer learning works that leverage extra annotations.

PDF Abstract CVPR 2021 PDF CVPR 2021 AbstractCode

Datasets

Results from the Paper

Ranked #1 on

Person Re-Identification

on SYSU-30k

(using extra training data)

Ranked #1 on

Person Re-Identification

on SYSU-30k

(using extra training data)

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Uses Extra Training Data |

Benchmark |

|---|---|---|---|---|---|---|---|

| Unsupervised Person Re-Identification | DukeMTMCreID | IICS | Rank-1 | 80.0 | # 1 | ||

| Unsupervised Person Re-Identification | Market-1501 | IICS | Rank-1 | 89.5 | # 16 | ||

| MAP | 72.9 | # 15 | |||||

| Rank-10 | 97.0 | # 12 | |||||

| Rank-5 | 95.2 | # 12 | |||||

| Person Re-Identification | SYSU-30k | IICS (generalization) | Rank-1 | 36.0 | # 1 |

Market-1501

Market-1501

DukeMTMC-reID

DukeMTMC-reID

SYSU-30k

SYSU-30k