Dynamics of Local Elasticity During Training of Neural Nets

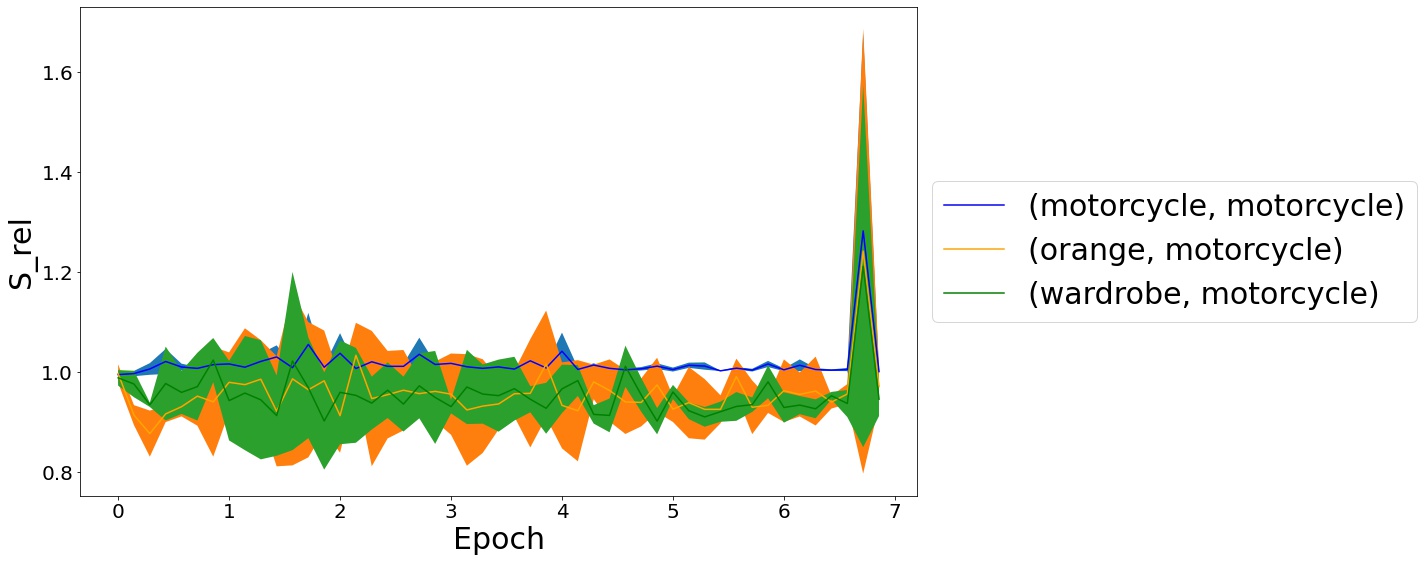

In the recent past, a property of neural training trajectories in weight-space had been isolated, that of "local elasticity" (denoted as $S_{\rm rel}$). Local elasticity attempts to quantify the propagation of the influence of a sampled data point on the prediction at another data. In this work, we embark on a comprehensive study of the existing notion of $S_{\rm rel}$ and also propose a new definition that addresses the limitations that we point out for the original definition in the classification setting. On various state-of-the-art neural network training on SVHN, CIFAR-10 and CIFAR-100 we demonstrate how our new proposal of $S_{\rm rel}$, as opposed to the original definition, much more sharply detects the property of the weight updates preferring to make prediction changes within the same class as the sampled data. In neural regression experiments we demonstrate that the original $S_{\rm rel}$ reveals a $2-$phase behavior -- that the training proceeds via an initial elastic phase when $S_{\rm rel}$ changes rapidly and an eventual inelastic phase when $S_{\rm rel}$ remains large. We show that some of these properties can be analytically reproduced in various instances of doing regression via gradient flows on model predictor classes.

PDF Abstract

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

SVHN

SVHN