Joint Learning of Neural Networks via Iterative Reweighted Least Squares

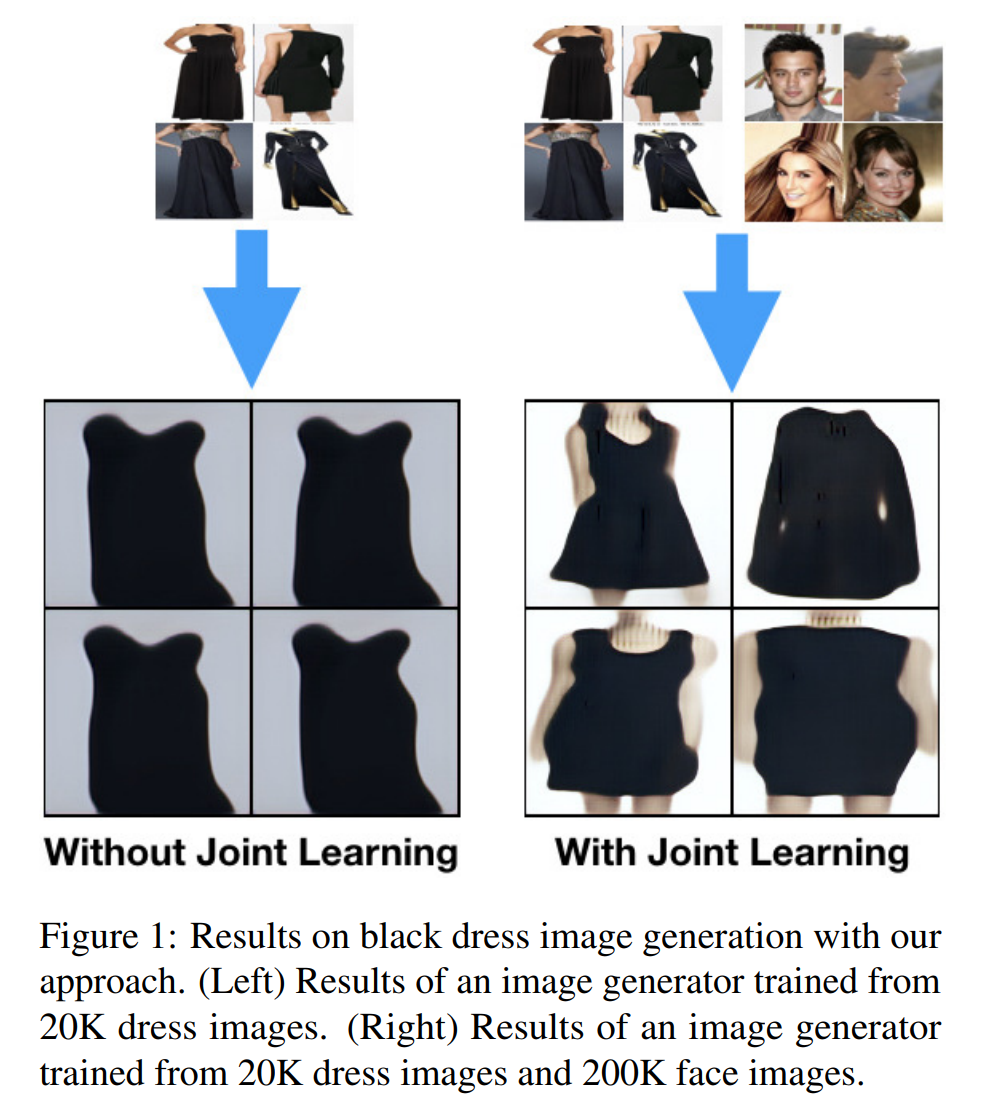

In this paper, we introduce the problem of jointly learning feed-forward neural networks across a set of relevant but diverse datasets. Compared to learning a separate network from each dataset in isolation, joint learning enables us to extract correlated information across multiple datasets to significantly improve the quality of learned networks. We formulate this problem as joint learning of multiple copies of the same network architecture and enforce the network weights to be shared across these networks. Instead of hand-encoding the shared network layers, we solve an optimization problem to automatically determine how layers should be shared between each pair of datasets. Experimental results show that our approach outperforms baselines without joint learning and those using pretraining-and-fine-tuning. We show the effectiveness of our approach on three tasks: image classification, learning auto-encoders, and image generation.

PDF Abstract