Learnable Boundary Guided Adversarial Training

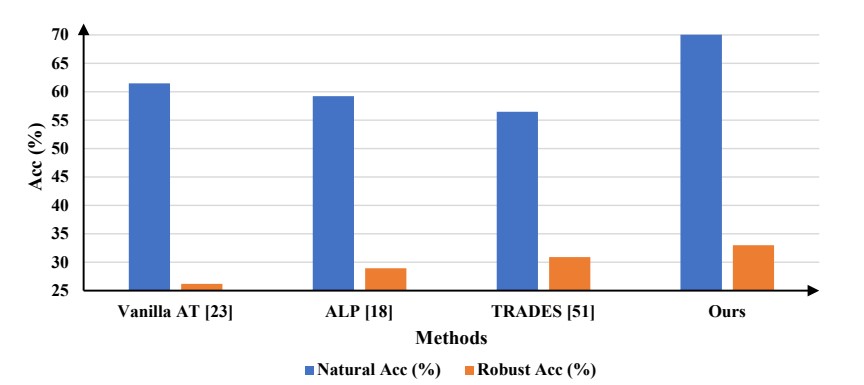

Previous adversarial training raises model robustness under the compromise of accuracy on natural data. In this paper, we reduce natural accuracy degradation. We use the model logits from one clean model to guide learning of another one robust model, taking into consideration that logits from the well trained clean model embed the most discriminative features of natural data, {\it e.g.}, generalizable classifier boundary. Our solution is to constrain logits from the robust model that takes adversarial examples as input and makes it similar to those from the clean model fed with corresponding natural data. It lets the robust model inherit the classifier boundary of the clean model. Moreover, we observe such boundary guidance can not only preserve high natural accuracy but also benefit model robustness, which gives new insights and facilitates progress for the adversarial community. Finally, extensive experiments on CIFAR-10, CIFAR-100, and Tiny ImageNet testify to the effectiveness of our method. We achieve new state-of-the-art robustness on CIFAR-100 without additional real or synthetic data with auto-attack benchmark \footnote{\url{https://github.com/fra31/auto-attack}}. Our code is available at \url{https://github.com/dvlab-research/LBGAT}.

PDF Abstract ICCV 2021 PDF ICCV 2021 Abstract

ImageNet

ImageNet

CIFAR-100

CIFAR-100