Learning Conditional Attributes for Compositional Zero-Shot Learning

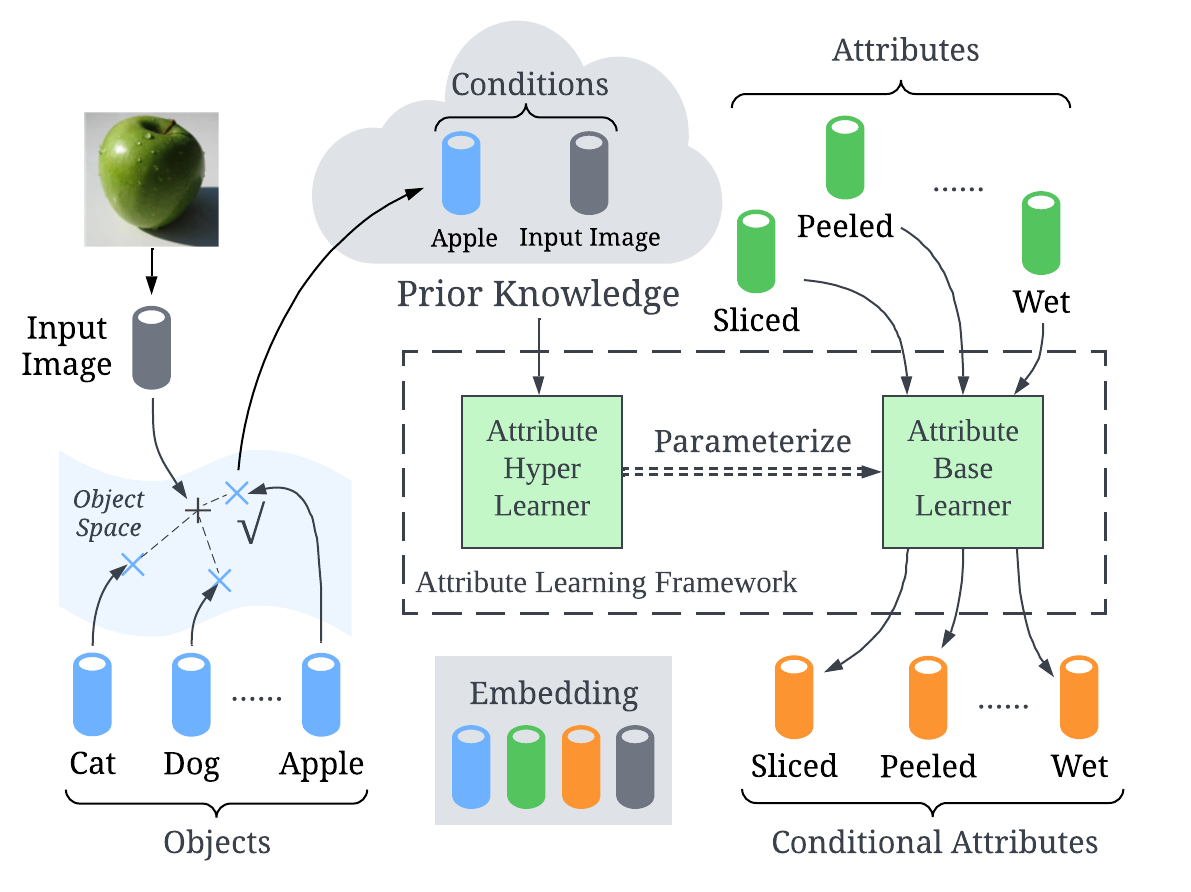

Compositional Zero-Shot Learning (CZSL) aims to train models to recognize novel compositional concepts based on learned concepts such as attribute-object combinations. One of the challenges is to model attributes interacted with different objects, e.g., the attribute ``wet" in ``wet apple" and ``wet cat" is different. As a solution, we provide analysis and argue that attributes are conditioned on the recognized object and input image and explore learning conditional attribute embeddings by a proposed attribute learning framework containing an attribute hyper learner and an attribute base learner. By encoding conditional attributes, our model enables to generate flexible attribute embeddings for generalization from seen to unseen compositions. Experiments on CZSL benchmarks, including the more challenging C-GQA dataset, demonstrate better performances compared with other state-of-the-art approaches and validate the importance of learning conditional attributes. Code is available at https://github.com/wqshmzh/CANet-CZSL

PDF Abstract CVPR 2023 PDF CVPR 2023 Abstract

MIT-States

MIT-States

UT Zappos50K

UT Zappos50K