MDSC: Towards Evaluating the Style Consistency Between Music and Dance

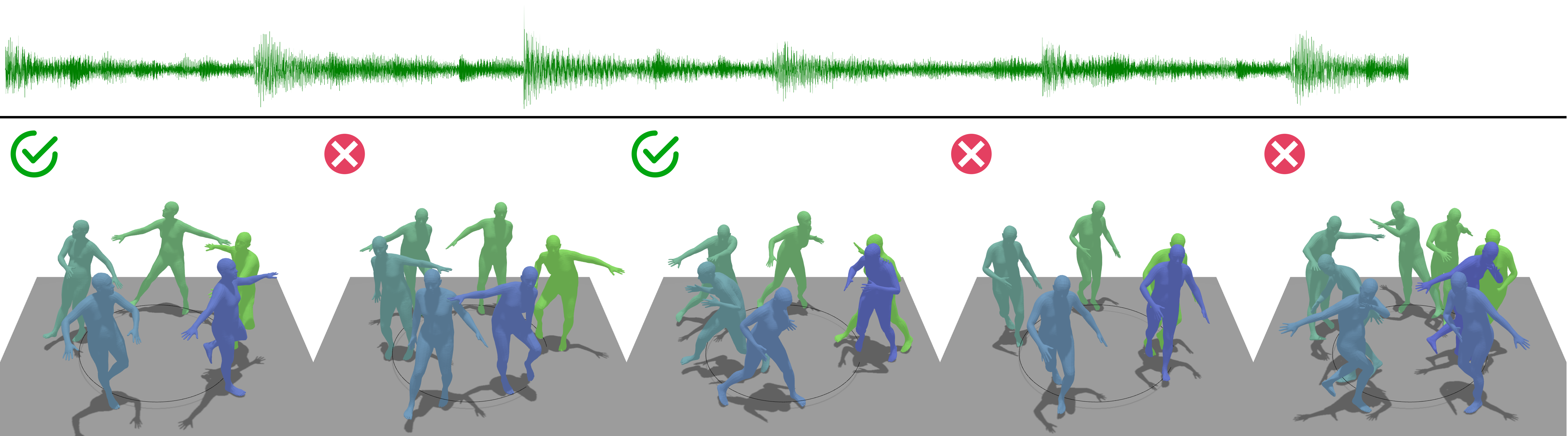

We propose MDSC(Music-Dance-Style Consistency), the first evaluation metric that assesses to what degree the dance moves and music match. Existing metrics can only evaluate the motion fidelity and diversity and the degree of rhythmic matching between music and dance. MDSC measures how stylistically correlated the generated dance motion sequences and the conditioning music sequences are. We found that directly measuring the embedding distance between motion and music is not an optimal solution. We instead tackle this through modeling it as a clustering problem. Specifically, 1) we pre-train a music encoder and a motion encoder, then 2) we learn to map and align the motion and music embedding in joint space by jointly minimizing the intra-cluster distance and maximizing the inter-cluster distance, and 3) for evaluation purposes, we encode the dance moves into embedding and measure the intra-cluster and inter-cluster distances, as well as the ratio between them. We evaluate our metric on the results of several music-conditioned motion generation methods, combined with user study, we found that our proposed metric is a robust evaluation metric in measuring the music-dance style correlation.

PDF Abstract