Measuring Word Significance using Distributed Representations of Words

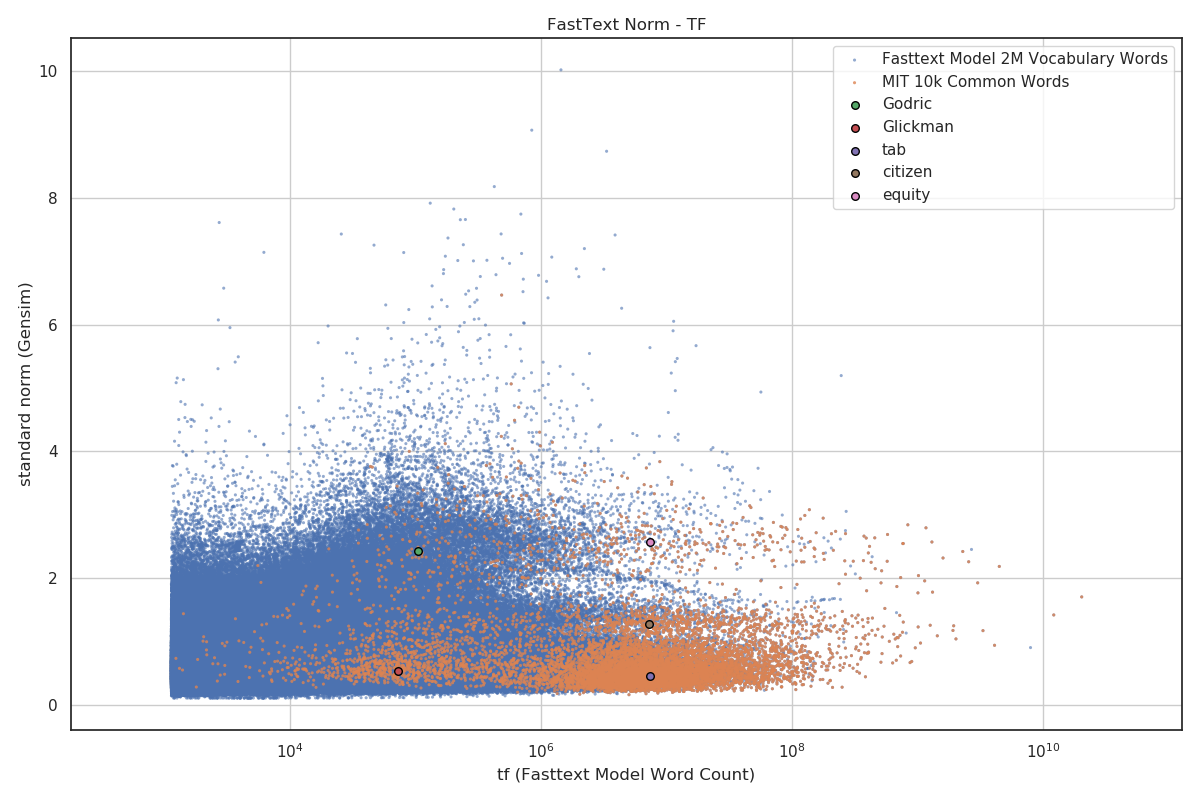

Distributed representations of words as real-valued vectors in a relatively low-dimensional space aim at extracting syntactic and semantic features from large text corpora. A recently introduced neural network, named word2vec (Mikolov et al., 2013a; Mikolov et al., 2013b), was shown to encode semantic information in the direction of the word vectors. In this brief report, it is proposed to use the length of the vectors, together with the term frequency, as measure of word significance in a corpus. Experimental evidence using a domain-specific corpus of abstracts is presented to support this proposal. A useful visualization technique for text corpora emerges, where words are mapped onto a two-dimensional plane and automatically ranked by significance.

PDF Abstract