Model-Based Planning with Discrete and Continuous Actions

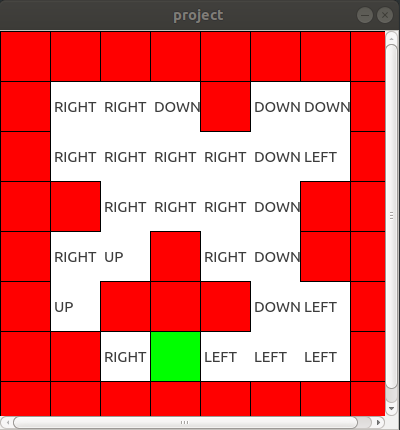

Action planning using learned and differentiable forward models of the world is a general approach which has a number of desirable properties, including improved sample complexity over model-free RL methods, reuse of learned models across different tasks, and the ability to perform efficient gradient-based optimization in continuous action spaces. However, this approach does not apply straightforwardly when the action space is discrete. In this work, we show that it is in fact possible to effectively perform planning via backprop in discrete action spaces, using a simple paramaterization of the actions vectors on the simplex combined with input noise when training the forward model. Our experiments show that this approach can match or outperform model-free RL and discrete planning methods on gridworld navigation tasks in terms of performance and/or planning time while using limited environment interactions, and can additionally be used to perform model-based control in a challenging new task where the action space combines discrete and continuous actions. We furthermore propose a policy distillation approach which yields a fast policy network which can be used at inference time, removing the need for an iterative planning procedure.

PDF Abstract