MVDG: A Unified Multi-view Framework for Domain Generalization

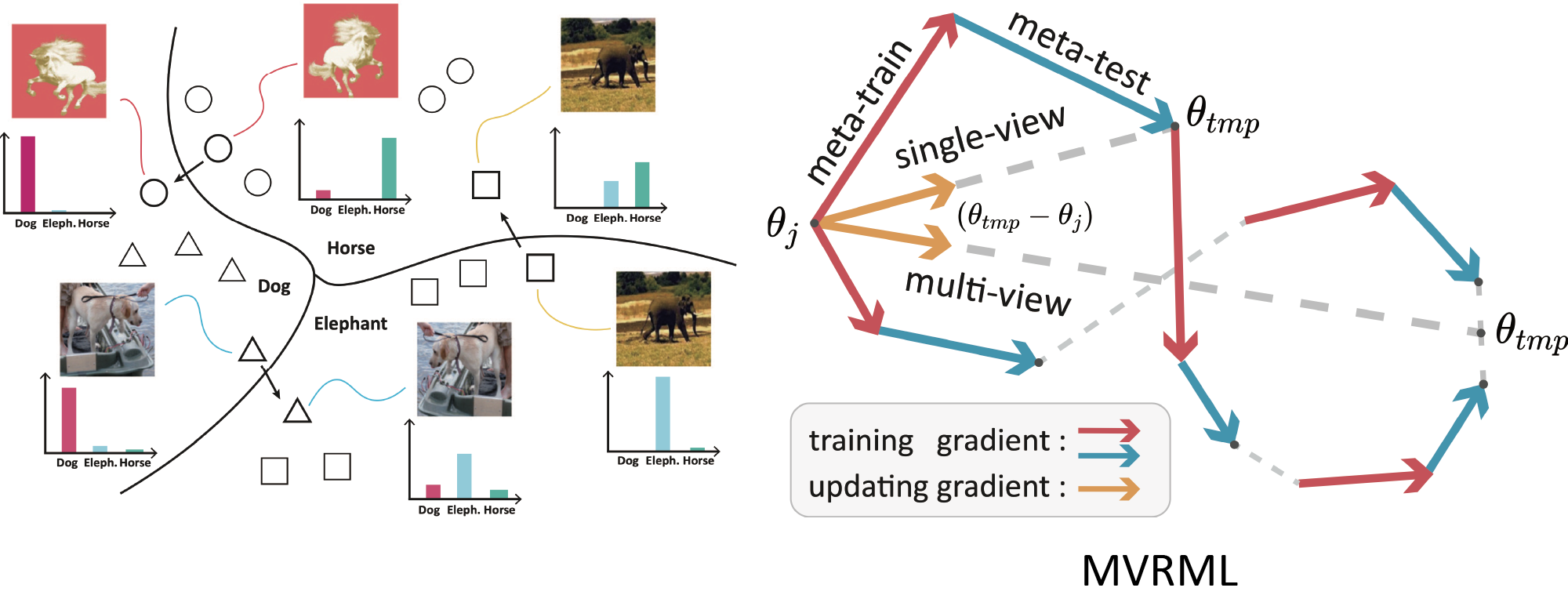

To generalize the model trained in source domains to unseen target domains, domain generalization (DG) has recently attracted lots of attention. Since target domains can not be involved in training, overfitting source domains is inevitable. As a popular regularization technique, the meta-learning training scheme has shown its ability to resist overfitting. However, in the training stage, current meta-learning-based methods utilize only one task along a single optimization trajectory, which might produce a biased and noisy optimization direction. Beyond the training stage, overfitting could also cause unstable prediction in the test stage. In this paper, we propose a novel multi-view DG framework to effectively reduce the overfitting in both the training and test stage. Specifically, in the training stage, we develop a multi-view regularized meta-learning algorithm that employs multiple optimization trajectories to produce a suitable optimization direction for model updating. We also theoretically show that the generalization bound could be reduced by increasing the number of tasks in each trajectory. In the test stage, we utilize multiple augmented images to yield a multi-view prediction to alleviate unstable prediction, which significantly promotes model reliability. Extensive experiments on three benchmark datasets validate that our method can find a flat minimum to enhance generalization and outperform several state-of-the-art approaches.

PDF Abstract

ImageNet

ImageNet

Office-Home

Office-Home

PACS

PACS