Fisher Task Distance and Its Application in Neural Architecture Search

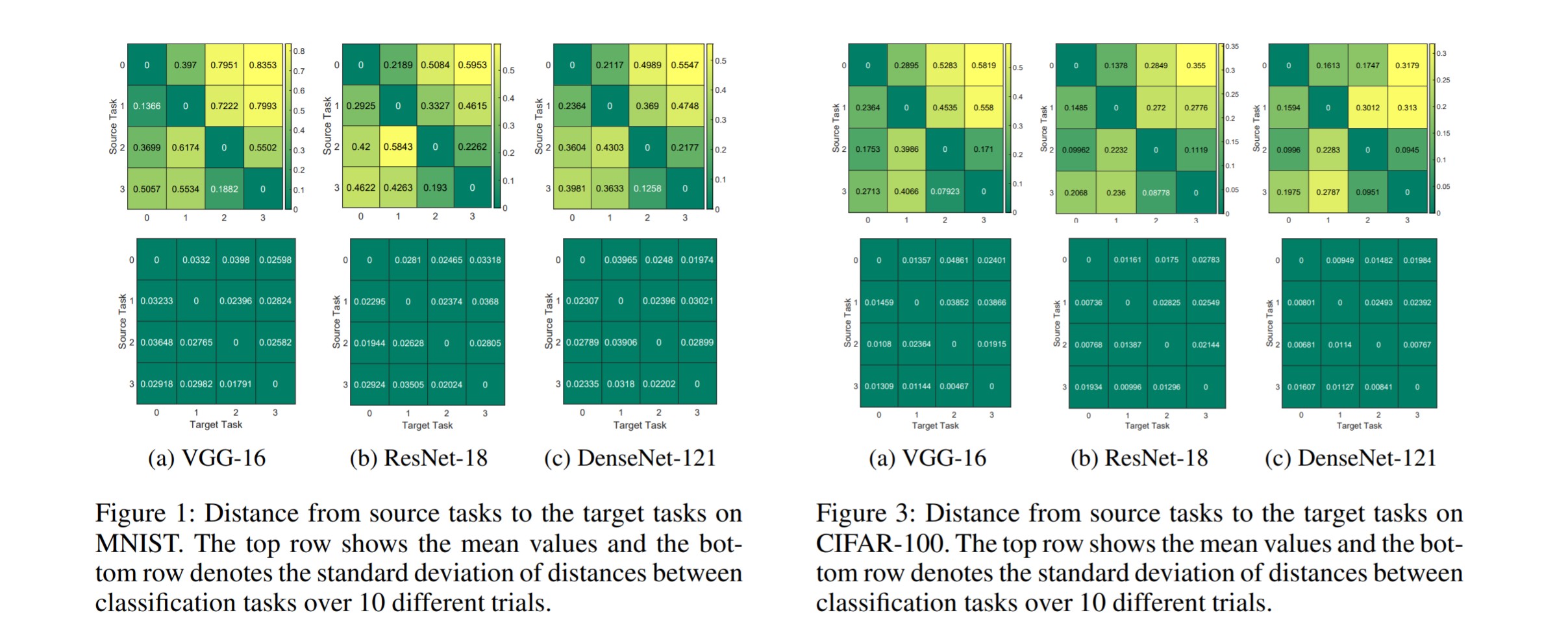

We formulate an asymmetric (or non-commutative) distance between tasks based on Fisher Information Matrices, called Fisher task distance. This distance represents the complexity of transferring the knowledge from one task to another. We provide a proof of consistency for our distance through theorems and experiments on various classification tasks from MNIST, CIFAR-10, CIFAR-100, ImageNet, and Taskonomy datasets. Next, we construct an online neural architecture search framework using the Fisher task distance, in which we have access to the past learned tasks. By using the Fisher task distance, we can identify the closest learned tasks to the target task, and utilize the knowledge learned from these related tasks for the target task. Here, we show how the proposed distance between a target task and a set of learned tasks can be used to reduce the neural architecture search space for the target task. The complexity reduction in search space for task-specific architectures is achieved by building on the optimized architectures for similar tasks instead of doing a full search and without using this side information. Experimental results for tasks in MNIST, CIFAR-10, CIFAR-100, ImageNet datasets demonstrate the efficacy of the proposed approach and its improvements, in terms of the performance and the number of parameters, over other gradient-based search methods, such as ENAS, DARTS, PC-DARTS.

PDF Abstract

CIFAR-10

CIFAR-10

ImageNet

ImageNet

CIFAR-100

CIFAR-100

MNIST

MNIST

Taskonomy

Taskonomy