On Intrinsic Dataset Properties for Adversarial Machine Learning

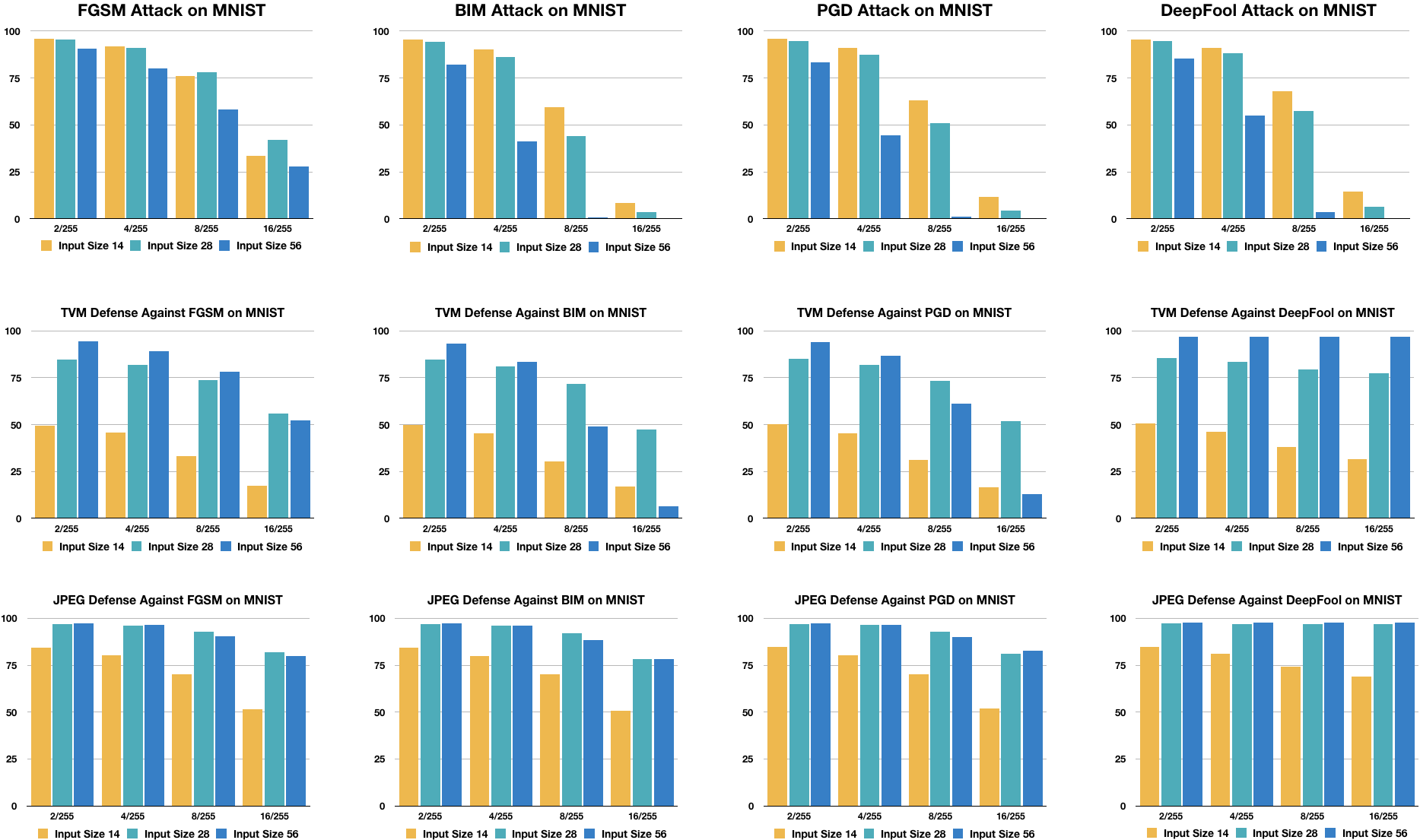

Deep neural networks (DNNs) have played a key role in a wide range of machine learning applications. However, DNN classifiers are vulnerable to human-imperceptible adversarial perturbations, which can cause them to misclassify inputs with high confidence. Thus, creating robust DNNs which can defend against malicious examples is critical in applications where security plays a major role. In this paper, we study the effect of intrinsic dataset properties on the performance of adversarial attack and defense methods, testing on five popular image classification datasets - MNIST, Fashion-MNIST, CIFAR10/CIFAR100, and ImageNet. We find that input size and image contrast play key roles in attack and defense success. Our discoveries highlight that dataset design and data preprocessing steps are important to boost the adversarial robustness of DNNs. To our best knowledge, this is the first comprehensive work that studies the effect of intrinsic dataset properties on adversarial machine learning.

PDF Abstract

ImageNet

ImageNet

Fashion-MNIST

Fashion-MNIST