PatchGuard++: Efficient Provable Attack Detection against Adversarial Patches

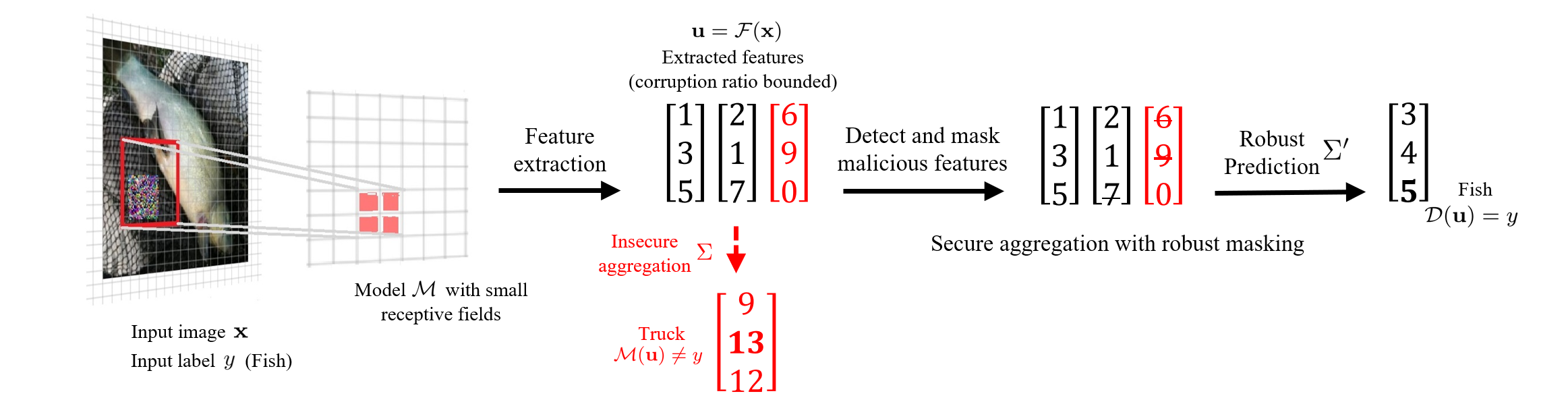

An adversarial patch can arbitrarily manipulate image pixels within a restricted region to induce model misclassification. The threat of this localized attack has gained significant attention because the adversary can mount a physically-realizable attack by attaching patches to the victim object. Recent provably robust defenses generally follow the PatchGuard framework by using CNNs with small receptive fields and secure feature aggregation for robust model predictions. In this paper, we extend PatchGuard to PatchGuard++ for provably detecting the adversarial patch attack to boost both provable robust accuracy and clean accuracy. In PatchGuard++, we first use a CNN with small receptive fields for feature extraction so that the number of features corrupted by the adversarial patch is bounded. Next, we apply masks in the feature space and evaluate predictions on all possible masked feature maps. Finally, we extract a pattern from all masked predictions to catch the adversarial patch attack. We evaluate PatchGuard++ on ImageNette (a 10-class subset of ImageNet), ImageNet, and CIFAR-10 and demonstrate that PatchGuard++ significantly improves the provable robustness and clean performance.

PDF Abstract

CIFAR-10

CIFAR-10

ImageNet

ImageNet