PointOdyssey: A Large-Scale Synthetic Dataset for Long-Term Point Tracking

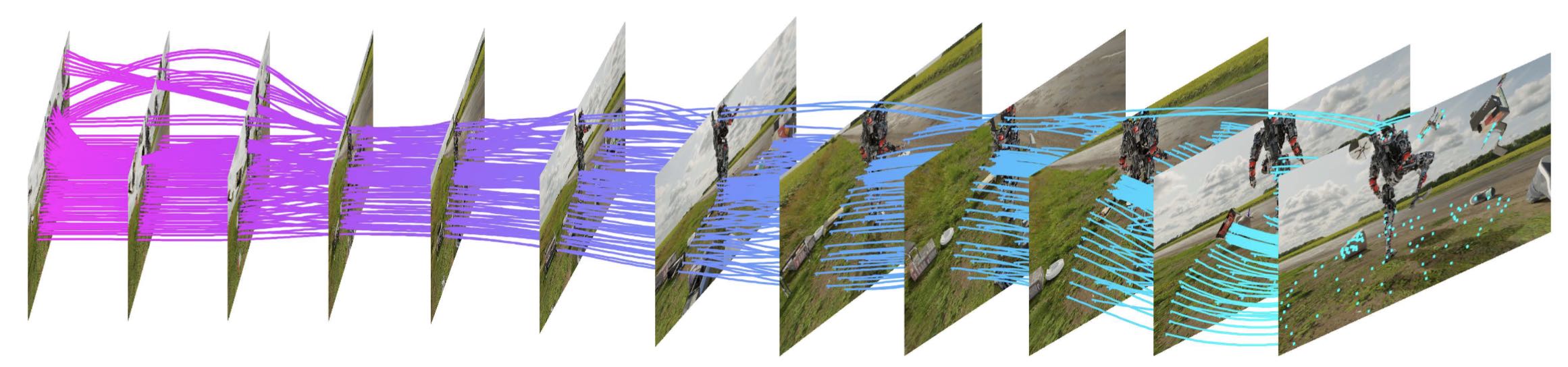

We introduce PointOdyssey, a large-scale synthetic dataset, and data generation framework, for the training and evaluation of long-term fine-grained tracking algorithms. Our goal is to advance the state-of-the-art by placing emphasis on long videos with naturalistic motion. Toward the goal of naturalism, we animate deformable characters using real-world motion capture data, we build 3D scenes to match the motion capture environments, and we render camera viewpoints using trajectories mined via structure-from-motion on real videos. We create combinatorial diversity by randomizing character appearance, motion profiles, materials, lighting, 3D assets, and atmospheric effects. Our dataset currently includes 104 videos, averaging 2,000 frames long, with orders of magnitude more correspondence annotations than prior work. We show that existing methods can be trained from scratch in our dataset and outperform the published variants. Finally, we introduce modifications to the PIPs point tracking method, greatly widening its temporal receptive field, which improves its performance on PointOdyssey as well as on two real-world benchmarks. Our data and code are publicly available at: https://pointodyssey.com

PDF Abstract ICCV 2023 PDF ICCV 2023 AbstractCode

Tasks

Datasets

Introduced in the Paper:

PointOdyssey

PointOdyssey

Used in the Paper:

Kinetics

Kinetics

MPI Sintel

MPI Sintel

Kubric

Kubric

DeformingThings4D

DeformingThings4D

TAP-Vid

TAP-Vid