Reconstructing Compact Building Models from Point Clouds Using Deep Implicit Fields

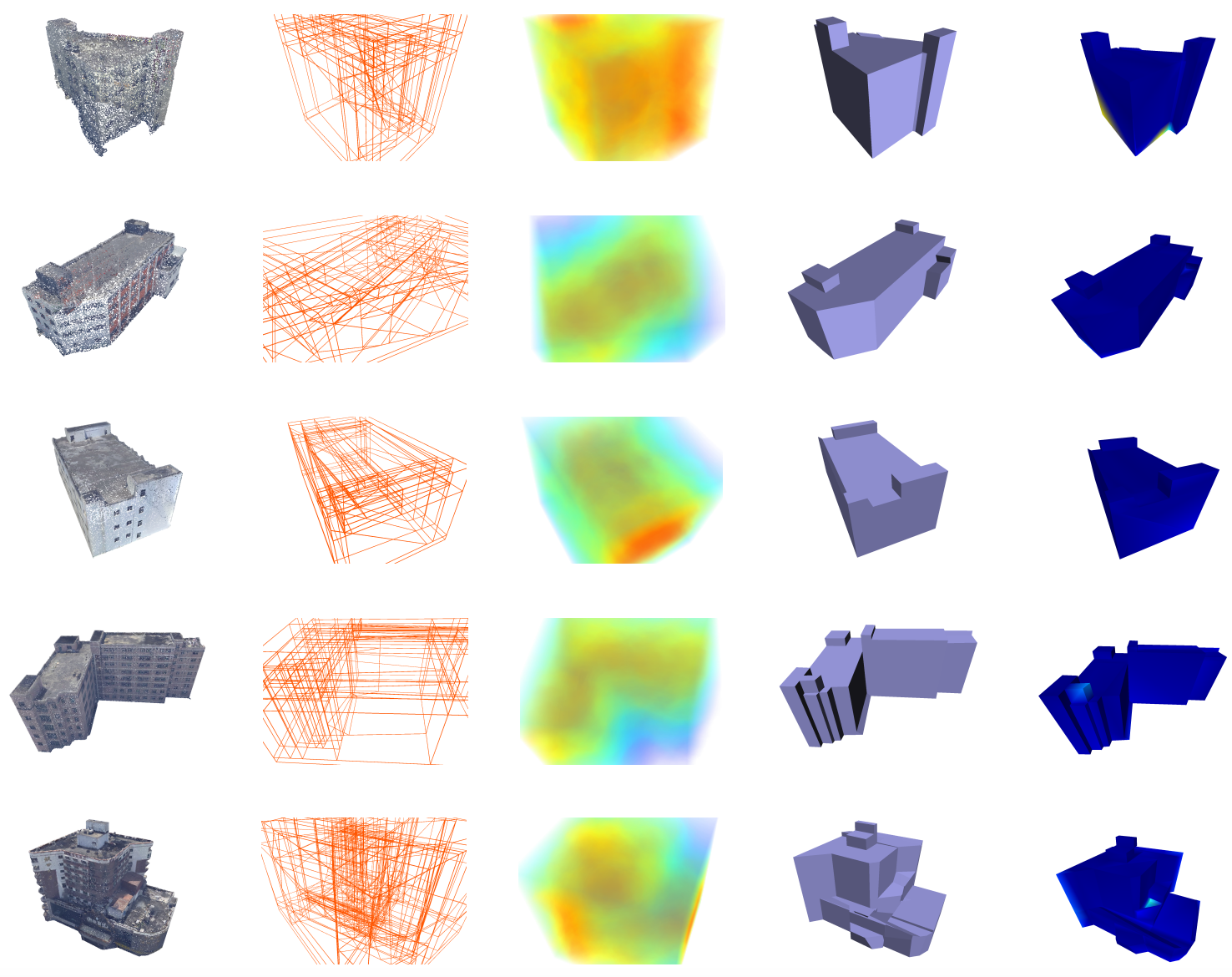

While three-dimensional (3D) building models play an increasingly pivotal role in many real-world applications, obtaining a compact representation of buildings remains an open problem. In this paper, we present a novel framework for reconstructing compact, watertight, polygonal building models from point clouds. Our framework comprises three components: (a) a cell complex is generated via adaptive space partitioning that provides a polyhedral embedding as the candidate set; (b) an implicit field is learned by a deep neural network that facilitates building occupancy estimation; (c) a Markov random field is formulated to extract the outer surface of a building via combinatorial optimization. We evaluate and compare our method with state-of-the-art methods in generic reconstruction, model-based reconstruction, geometry simplification, and primitive assembly. Experiments on both synthetic and real-world point clouds have demonstrated that, with our neural-guided strategy, high-quality building models can be obtained with significant advantages in fidelity, compactness, and computational efficiency. Our method also shows robustness to noise and insufficient measurements, and it can directly generalize from synthetic scans to real-world measurements. The source code of this work is freely available at https://github.com/chenzhaiyu/points2poly.

PDF Abstract