Recurrent Attention Model with Log-Polar Mapping is Robust against Adversarial Attacks

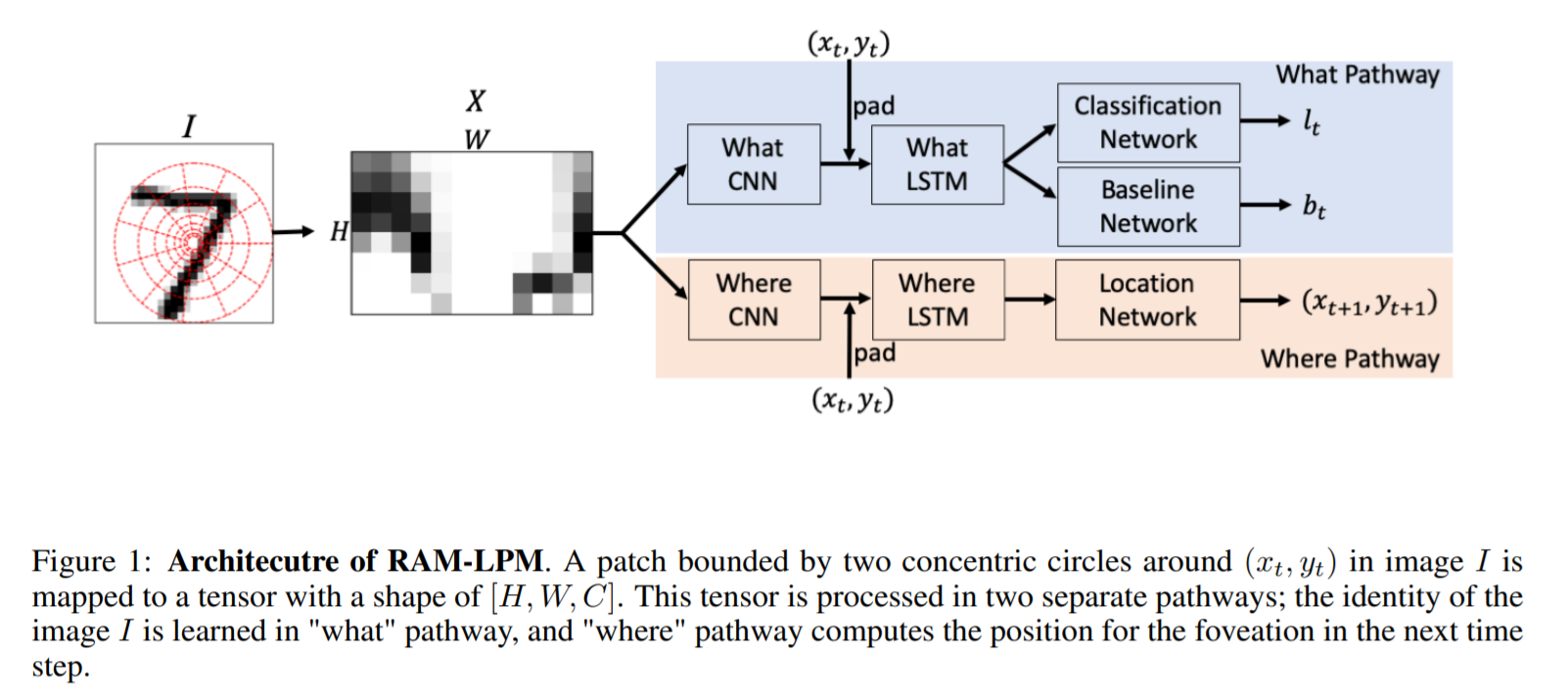

Convolutional neural networks are vulnerable to small $\ell^p$ adversarial attacks, while the human visual system is not. Inspired by neural networks in the eye and the brain, we developed a novel artificial neural network model that recurrently collects data with a log-polar field of view that is controlled by attention. We demonstrate the effectiveness of this design as a defense against SPSA and PGD adversarial attacks. It also has beneficial properties observed in the animal visual system, such as reflex-like pathways for low-latency inference, fixed amount of computation independent of image size, and rotation and scale invariance. The code for experiments is available at https://gitlab.com/exwzd-public/kiritani_ono_2020.

PDF Abstract

ImageNet

ImageNet