Regularizing Deep Multi-Task Networks using Orthogonal Gradients

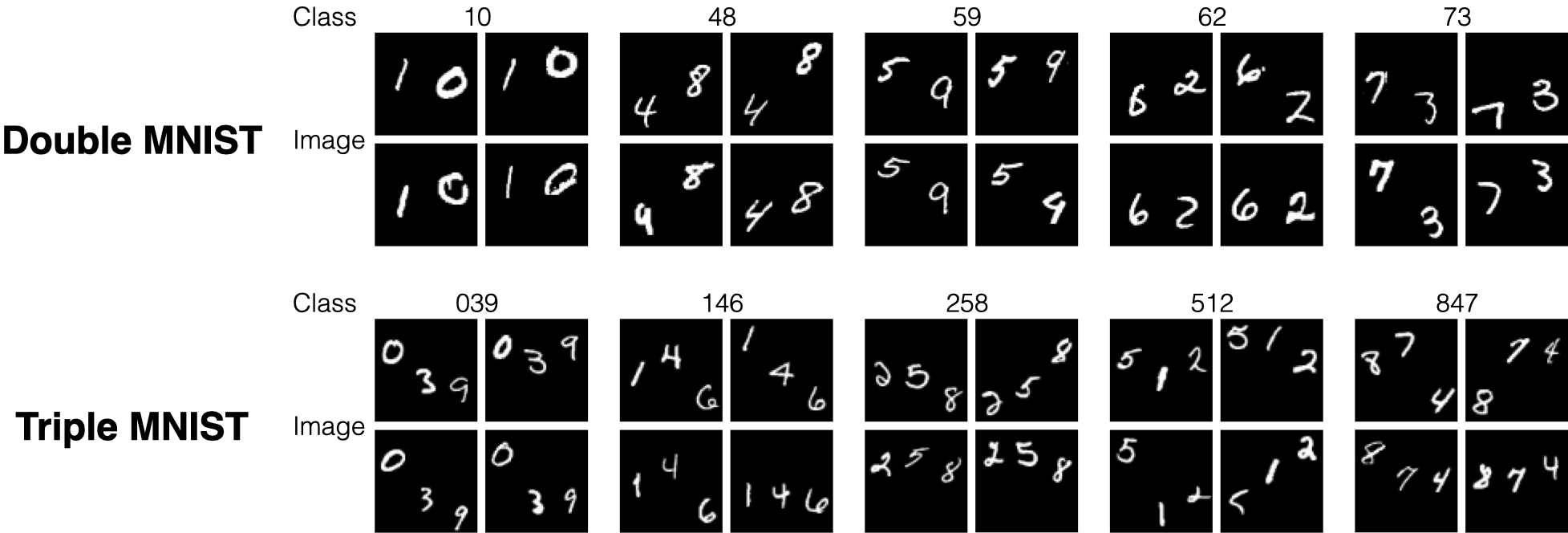

Deep neural networks are a promising approach towards multi-task learning because of their capability to leverage knowledge across domains and learn general purpose representations. Nevertheless, they can fail to live up to these promises as tasks often compete for a model's limited resources, potentially leading to lower overall performance. In this work we tackle the issue of interfering tasks through a comprehensive analysis of their training, derived from looking at the interaction between gradients within their shared parameters. Our empirical results show that well-performing models have low variance in the angles between task gradients and that popular regularization methods implicitly reduce this measure. Based on this observation, we propose a novel gradient regularization term that minimizes task interference by enforcing near orthogonal gradients. Updating the shared parameters using this property encourages task specific decoders to optimize different parts of the feature extractor, thus reducing competition. We evaluate our method with classification and regression tasks on the multiDigitMNIST, NYUv2 and SUN RGB-D datasets where we obtain competitive results.

PDF Abstract

NYUv2

NYUv2

SUN RGB-D

SUN RGB-D