Rotation Equivariance and Invariance in Convolutional Neural Networks

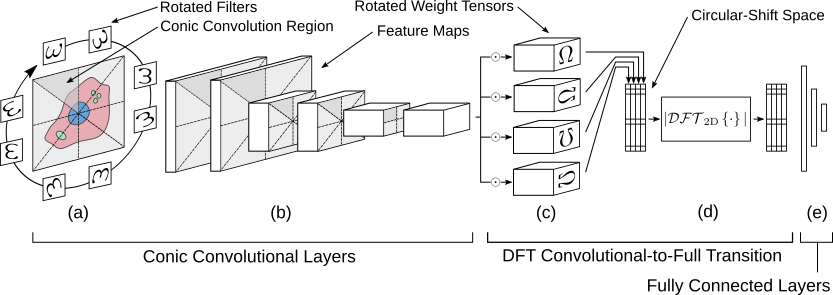

Performance of neural networks can be significantly improved by encoding known invariance for particular tasks. Many image classification tasks, such as those related to cellular imaging, exhibit invariance to rotation. We present a novel scheme using the magnitude response of the 2D-discrete-Fourier transform (2D-DFT) to encode rotational invariance in neural networks, along with a new, efficient convolutional scheme for encoding rotational equivariance throughout convolutional layers. We implemented this scheme for several image classification tasks and demonstrated improved performance, in terms of classification accuracy, time required to train the model, and robustness to hyperparameter selection, over a standard CNN and another state-of-the-art method.

PDF Abstract