Scene Text Recognition with Permuted Autoregressive Sequence Models

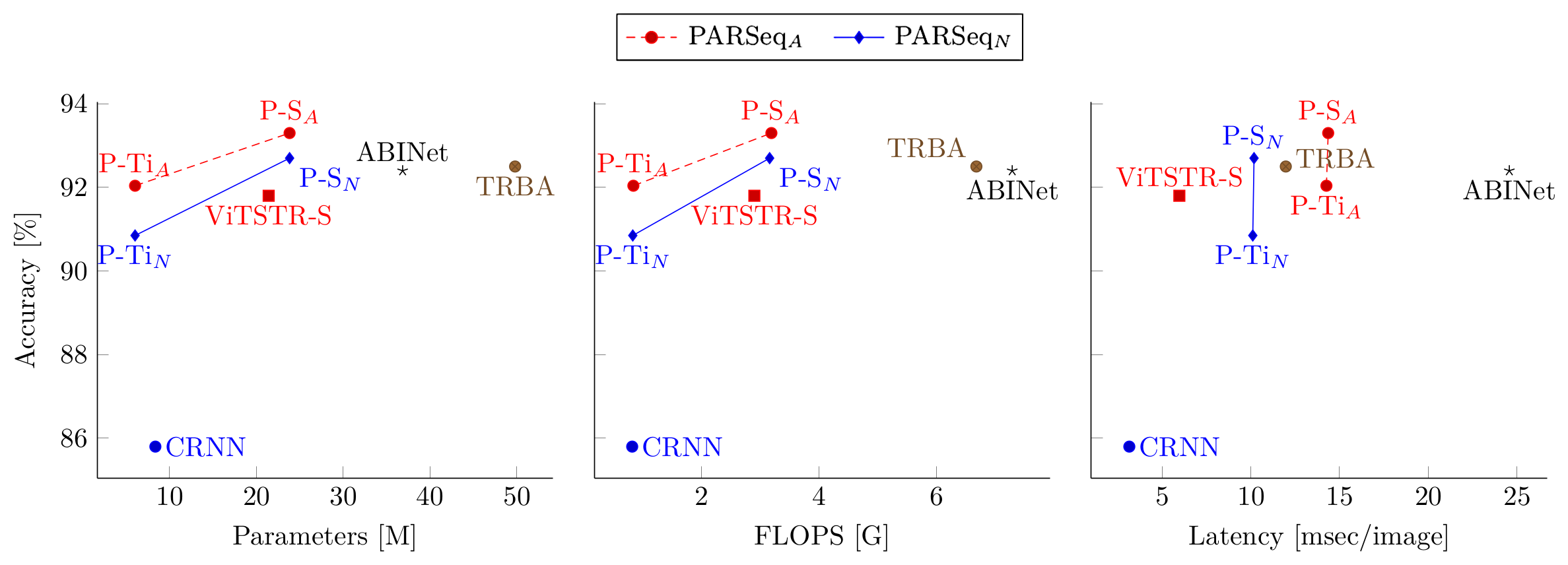

Context-aware STR methods typically use internal autoregressive (AR) language models (LM). Inherent limitations of AR models motivated two-stage methods which employ an external LM. The conditional independence of the external LM on the input image may cause it to erroneously rectify correct predictions, leading to significant inefficiencies. Our method, PARSeq, learns an ensemble of internal AR LMs with shared weights using Permutation Language Modeling. It unifies context-free non-AR and context-aware AR inference, and iterative refinement using bidirectional context. Using synthetic training data, PARSeq achieves state-of-the-art (SOTA) results in STR benchmarks (91.9% accuracy) and more challenging datasets. It establishes new SOTA results (96.0% accuracy) when trained on real data. PARSeq is optimal on accuracy vs parameter count, FLOPS, and latency because of its simple, unified structure and parallel token processing. Due to its extensive use of attention, it is robust on arbitrarily-oriented text which is common in real-world images. Code, pretrained weights, and data are available at: https://github.com/baudm/parseq.

PDF AbstractCode

Datasets

Results from the Paper

Ranked #4 on

Scene Text Recognition

on IC19-Art

(using extra training data)

Ranked #4 on

Scene Text Recognition

on IC19-Art

(using extra training data)

ICDAR 2013

ICDAR 2013

COCO-Text

COCO-Text

SVT

SVT

TextOCR

TextOCR

RCTW-17

RCTW-17

IIIT5k

IIIT5k