PhIT-Net: Photo-consistent Image Transform for Robust Illumination Invariant Matching

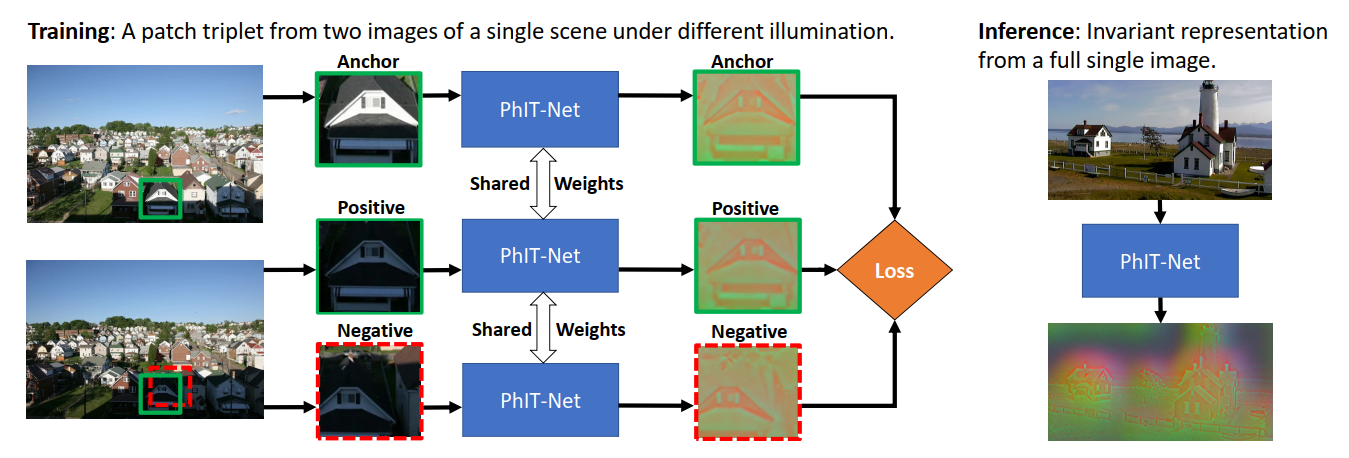

We propose a new and completely data-driven approach for generating a photo-consistent image transform. We show that simple classical algorithms which operate in the transform domain become extremely resilient to illumination changes. This considerably improves matching accuracy, outperforming the use of state-of-the-art invariant representations as well as new matching methods based on deep features. The transform is obtained by training a neural network with a specialized triplet loss, designed to emphasize actual scene changes while attenuating illumination changes. The transform yields an illumination invariant representation, structured as an image map, which is highly flexible and can be easily used for various tasks.

PDF Abstract

Middlebury 2014

Middlebury 2014