Self-supervision for medical image classification: state-of-the-art performance with ~100 labeled training samples per class

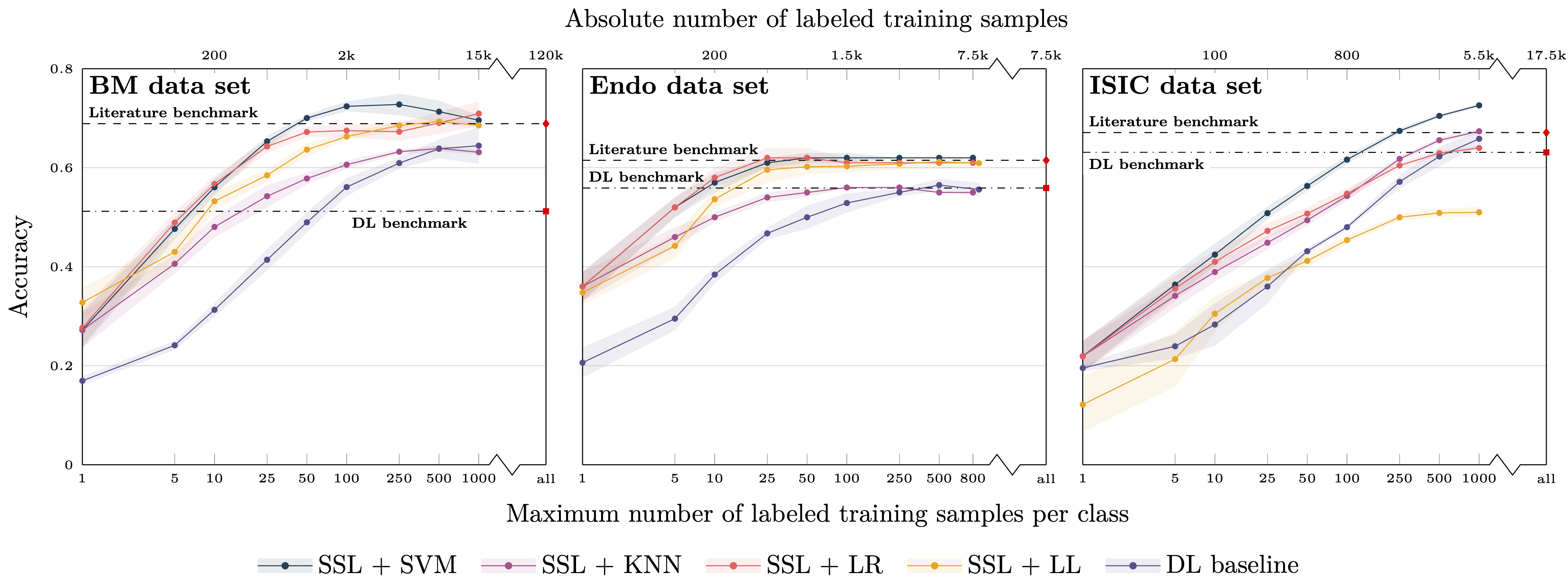

Is self-supervised deep learning (DL) for medical image analysis already a serious alternative to the de facto standard of end-to-end trained supervised DL? We tackle this question for medical image classification, with a particular focus on one of the currently most limiting factors of the field: the (non-)availability of labeled data. Based on three common medical imaging modalities (bone marrow microscopy, gastrointestinal endoscopy, dermoscopy) and publicly available data sets, we analyze the performance of self-supervised DL within the self-distillation with no labels (DINO) framework. After learning an image representation without use of image labels, conventional machine learning classifiers are applied. The classifiers are fit using a systematically varied number of labeled data (1-1000 samples per class). Exploiting the learned image representation, we achieve state-of-the-art classification performance for all three imaging modalities and data sets with only a fraction of between 1% and 10% of the available labeled data and about 100 labeled samples per class.

PDF Abstract