Sequence Generation using Deep Recurrent Networks and Embeddings: A study case in music

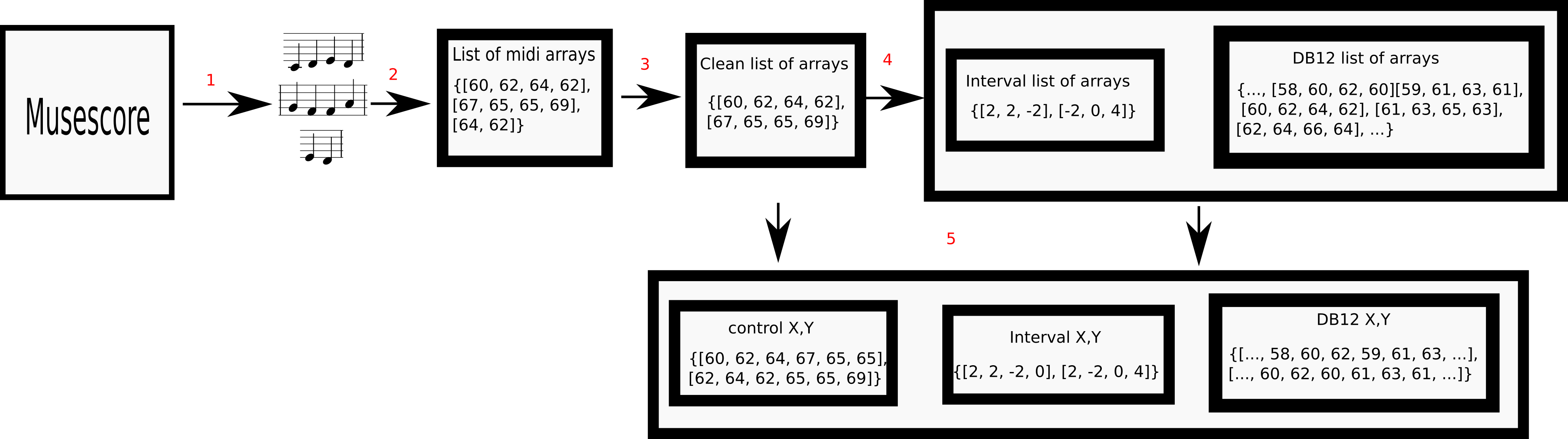

Automatic generation of sequences has been a highly explored field in the last years. In particular, natural language processing and automatic music composition have gained importance due to the recent advances in machine learning and Neural Networks with intrinsic memory mechanisms such as Recurrent Neural Networks. This paper evaluates different types of memory mechanisms (memory cells) and analyses their performance in the field of music composition. The proposed approach considers music theory concepts such as transposition, and uses data transformations (embeddings) to introduce semantic meaning and improve the quality of the generated melodies. A set of quantitative metrics is presented to evaluate the performance of the proposed architecture automatically, measuring the tonality of the musical compositions.

PDF Abstract