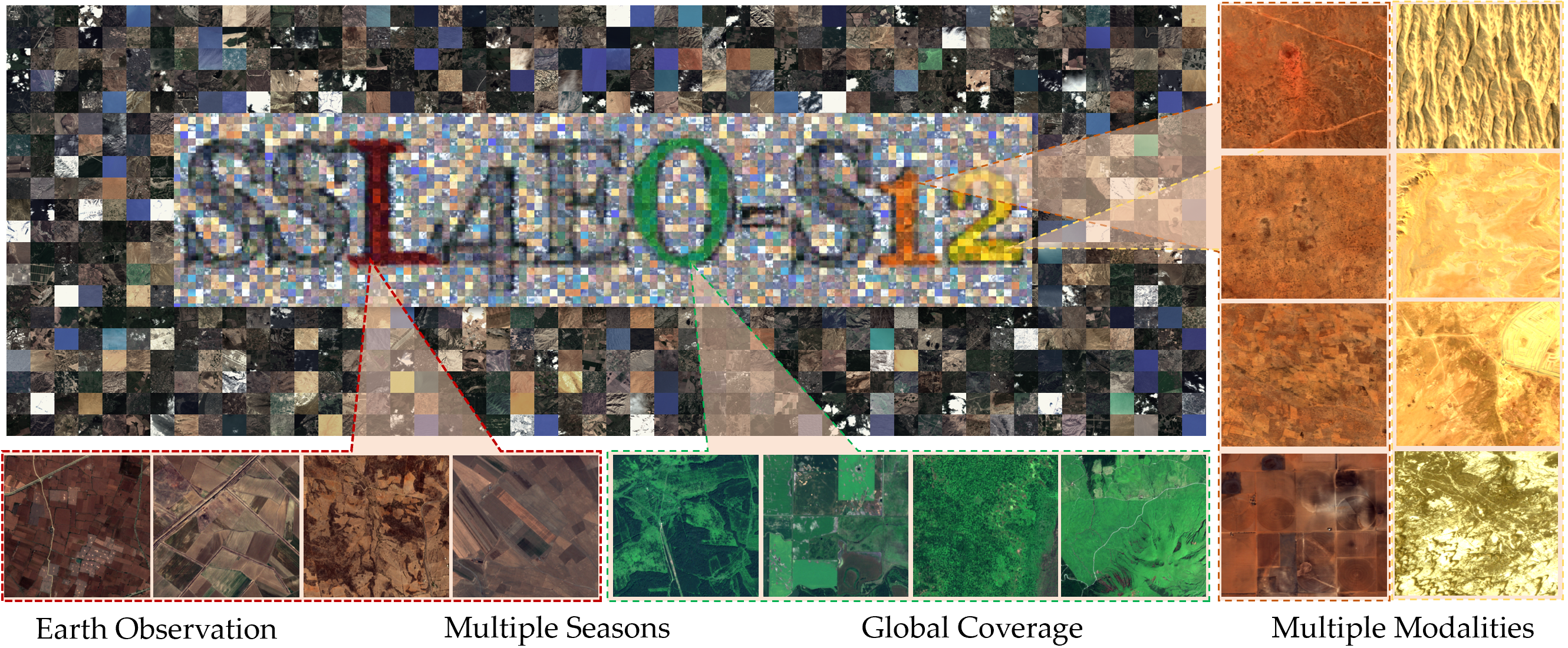

SSL4EO-S12: A Large-Scale Multi-Modal, Multi-Temporal Dataset for Self-Supervised Learning in Earth Observation

Self-supervised pre-training bears potential to generate expressive representations without human annotation. Most pre-training in Earth observation (EO) are based on ImageNet or medium-size, labeled remote sensing (RS) datasets. We share an unlabeled RS dataset SSL4EO-S12 (Self-Supervised Learning for Earth Observation - Sentinel-1/2) to assemble a large-scale, global, multimodal, and multi-seasonal corpus of satellite imagery from the ESA Sentinel-1 \& -2 satellite missions. For EO applications we demonstrate SSL4EO-S12 to succeed in self-supervised pre-training for a set of methods: MoCo-v2, DINO, MAE, and data2vec. Resulting models yield downstream performance close to, or surpassing accuracy measures of supervised learning. In addition, pre-training on SSL4EO-S12 excels compared to existing datasets. We make openly available the dataset, related source code, and pre-trained models at https://github.com/zhu-xlab/SSL4EO-S12.

PDF AbstractCode

Datasets

Results from the Paper

Ranked #1 on

Multi-Label Image Classification

on BigEarthNet (official test set)

(using extra training data)

Ranked #1 on

Multi-Label Image Classification

on BigEarthNet (official test set)

(using extra training data)

SSL4EO-S12

SSL4EO-S12