Stable Knowledge Editing in Large Language Models

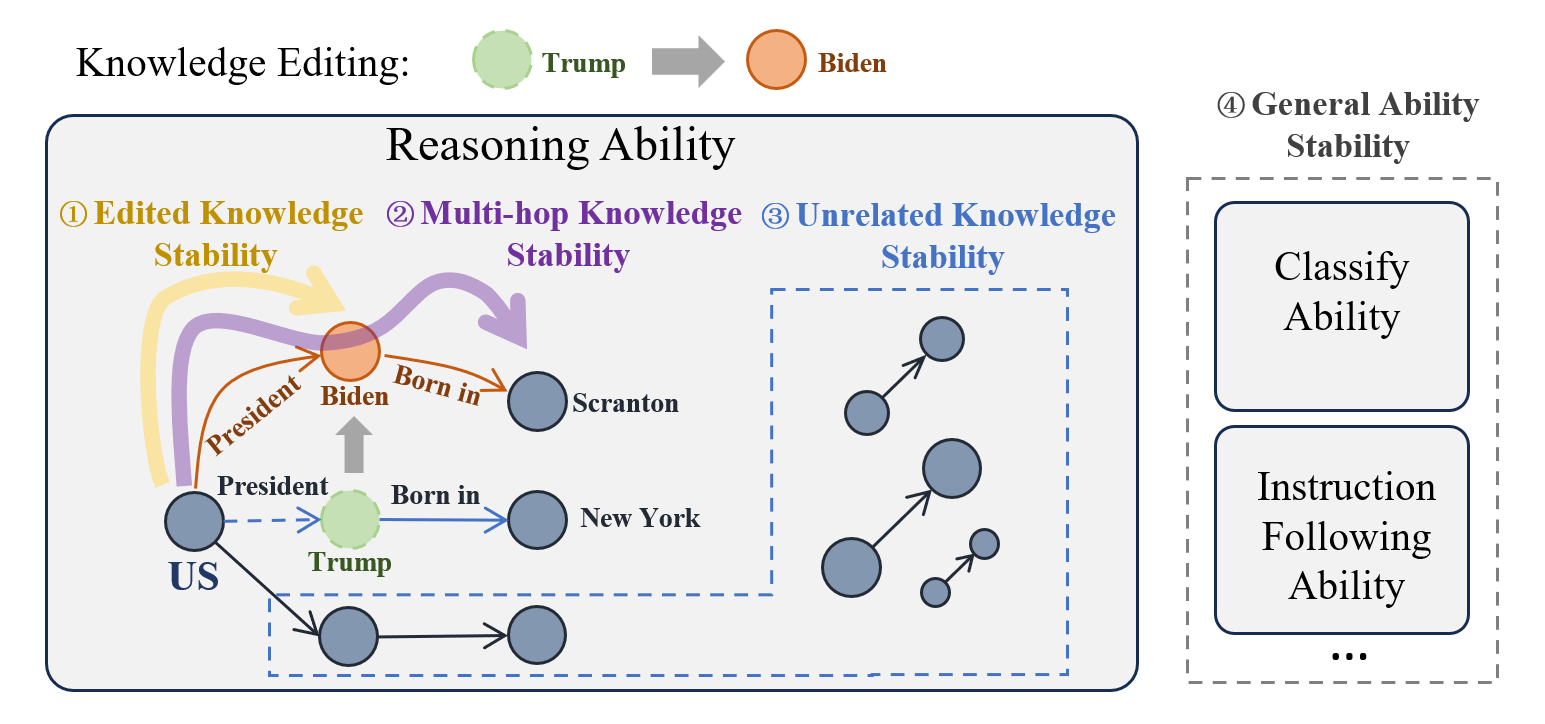

Efficient knowledge editing of large language models is crucial for replacing obsolete information or incorporating specialized knowledge on a large scale. However, previous methods implicitly assume that knowledge is localized and isolated within the model, an assumption that oversimplifies the interconnected nature of model knowledge. The premise of localization results in an incomplete knowledge editing, whereas an isolated assumption may impair both other knowledge and general abilities. It introduces instability to the performance of the knowledge editing method. To transcend these assumptions, we introduce StableKE, a method adopts a novel perspective based on knowledge augmentation rather than knowledge localization. To overcome the expense of human labeling, StableKE integrates two automated knowledge augmentation strategies: Semantic Paraphrase Enhancement strategy, which diversifies knowledge descriptions to facilitate the teaching of new information to the model, and Contextual Description Enrichment strategy, expanding the surrounding knowledge to prevent the forgetting of related information. StableKE surpasses other knowledge editing methods, demonstrating stability both edited knowledge and multi-hop knowledge, while also preserving unrelated knowledge and general abilities. Moreover, StableKE can edit knowledge on ChatGPT.

PDF Abstract

MMLU

MMLU